1. Introduction

Briefly explain the importance of continuous integration and continuous deployment (CI/CD) in modern software development.

Introduce AWS CodePipeline as a powerful CI/CD service provided by Amazon Web Services (AWS).

2. Purpose

Clearly state the purpose of the article, which is to guide readers in setting up and using AWS CodePipeline effectively.

3. What is AWS CodePipeline, CodeCommit, CodeBuild, CodeDeploy?

CodePipeline:

- What it does: CodePipeline is a continuous integration and continuous delivery (CI/CD) service that automates the build, test, and deployment phases of your software release process.

- Key Benefit: It helps you streamline and visualize the entire release process, from source code changes to production deployment, making it easier to manage and improve software delivery.

- What it does: CodeCommit is a secure and scalable source code management service that hosts Git repositories.

- Key Benefit: It provides a reliable and efficient way to store and manage your application's source code, making collaboration among developers smoother, and integrates seamlessly with other AWS CI/CD tools.

- What it does: CodeBuild is a fully managed build service that compiles source code, runs tests, and produces deployable software artifacts.

- Key Benefit: It automates the building process, ensuring consistent and reliable software builds, and integrates well with other CI/CD services for a seamless development pipeline.

- What it does: CodeDeploy automates application deployments to different compute services like EC2 instances, Lambda functions, and more.

- Key Benefit: It ensures your application updates are smoothly deployed across various environments, offers deployment strategies, and can automatically roll back if issues arise, resulting in reliable and efficient deployments.

4. Creating AWS CodePipeline with AWS console

Estimated Time:

With DevOps knowledge: 6-8 hours Without DevOps knowledge: 2-3 days

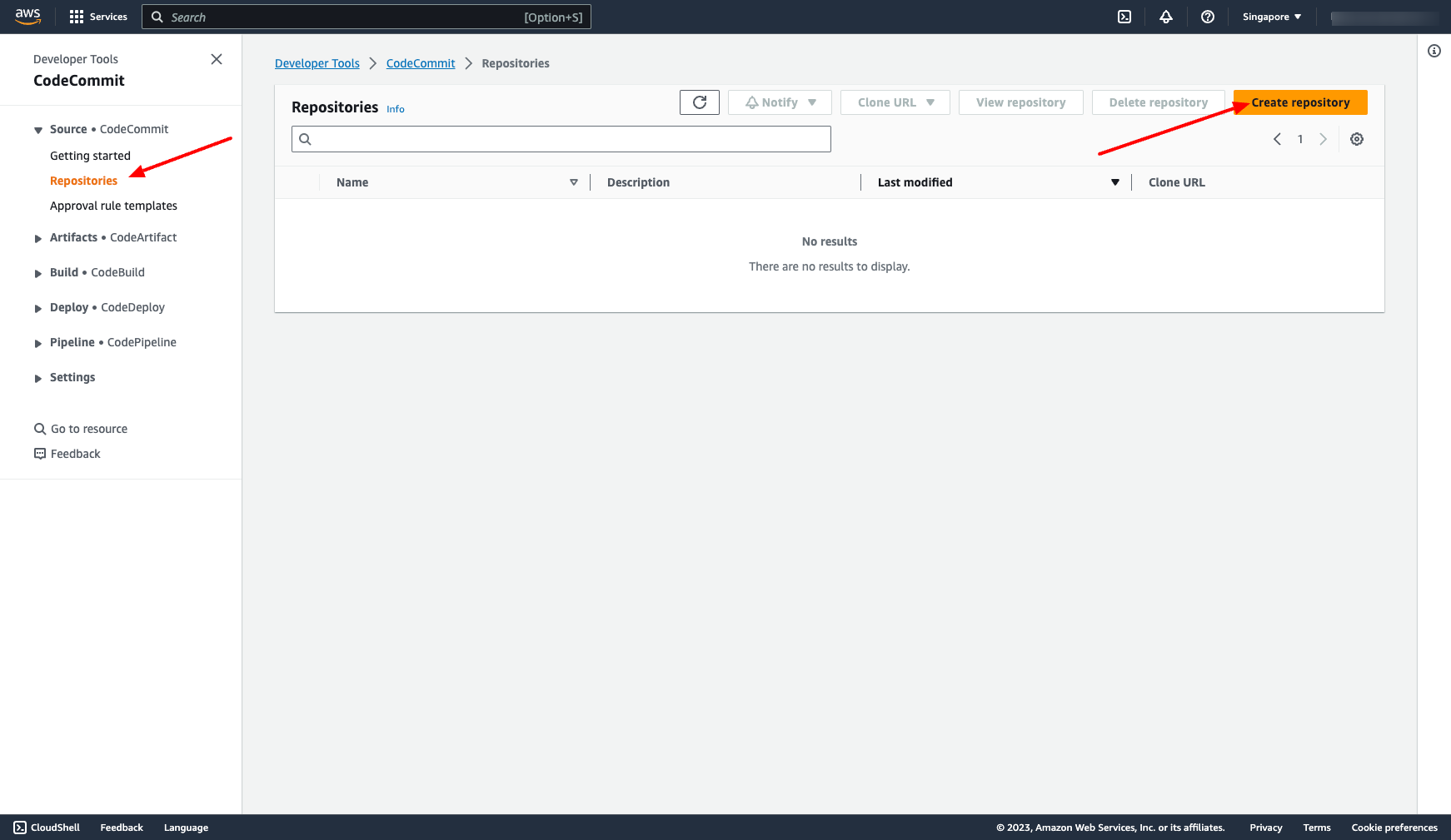

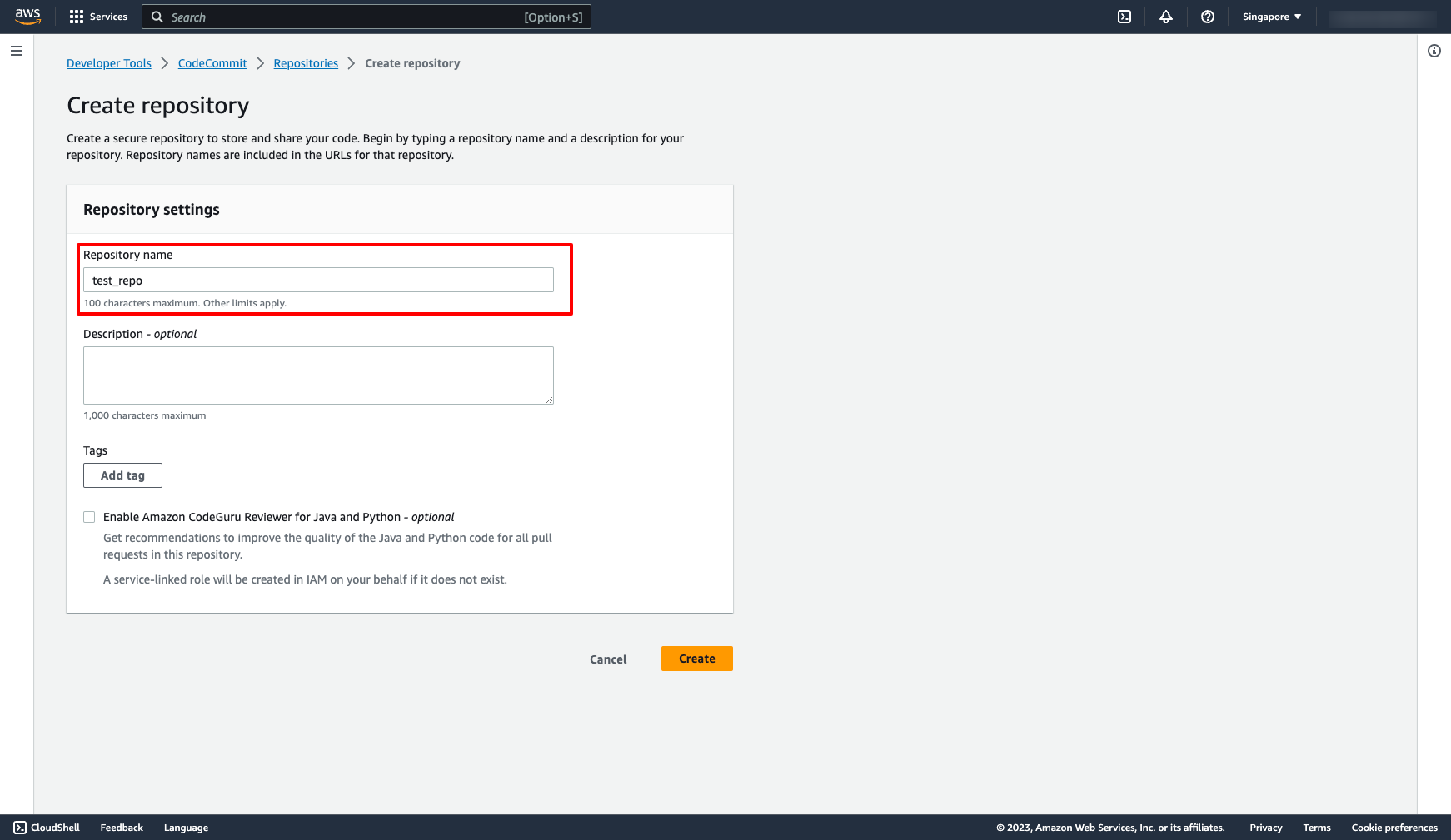

Assuming we want to create a CI/CD pipeline, this pipeline will automatically build the code in CodeCommit repository and push the container to ECR, then deploy that container to ECS. Getting started CodeCommit repository Go to CodeCommit → Click to “Create repository” → Enter name of repository:

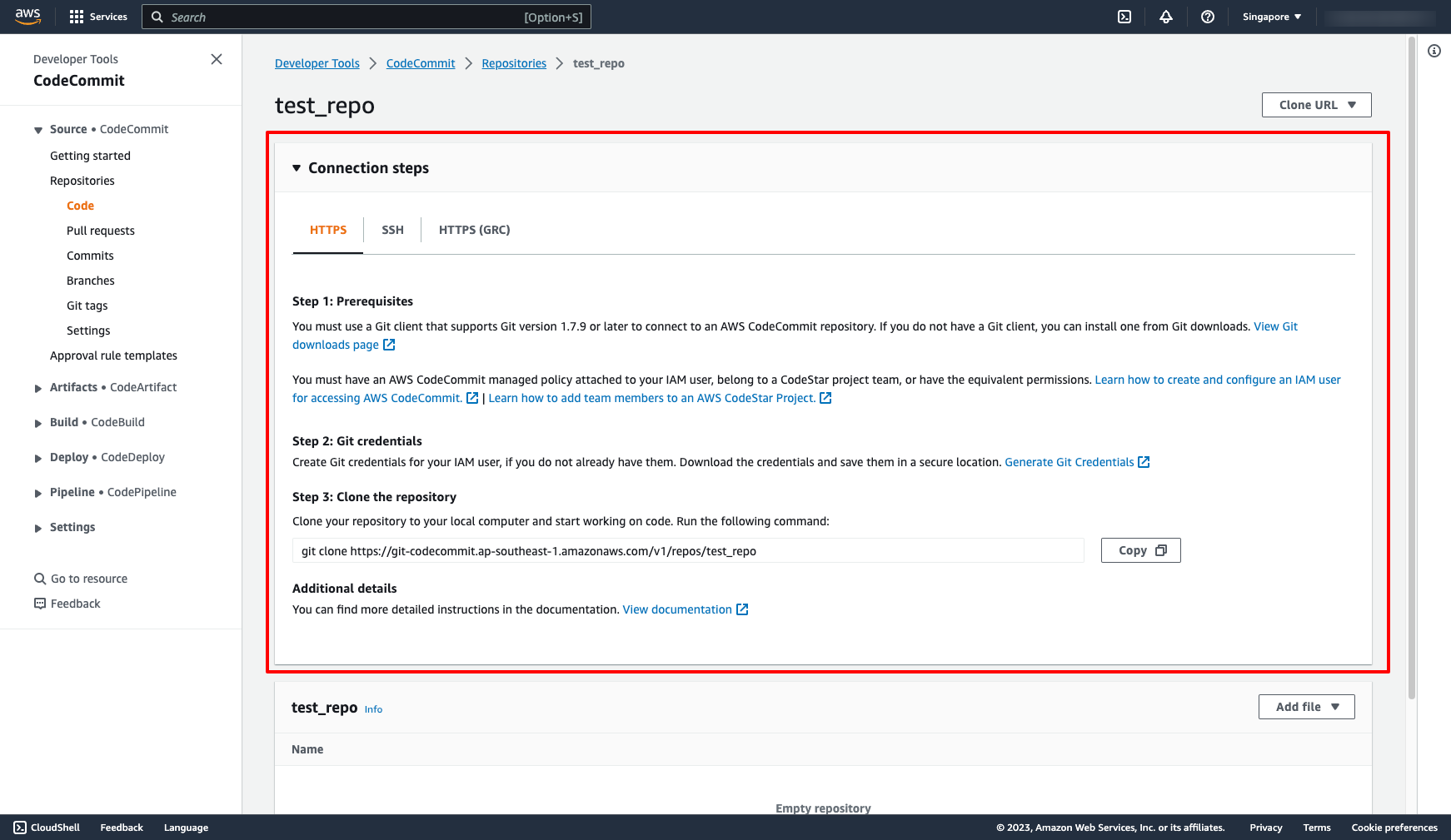

You can follow to Connection steps to commit and push code the repository:

You can follow to Connection steps to commit and push code the repository:

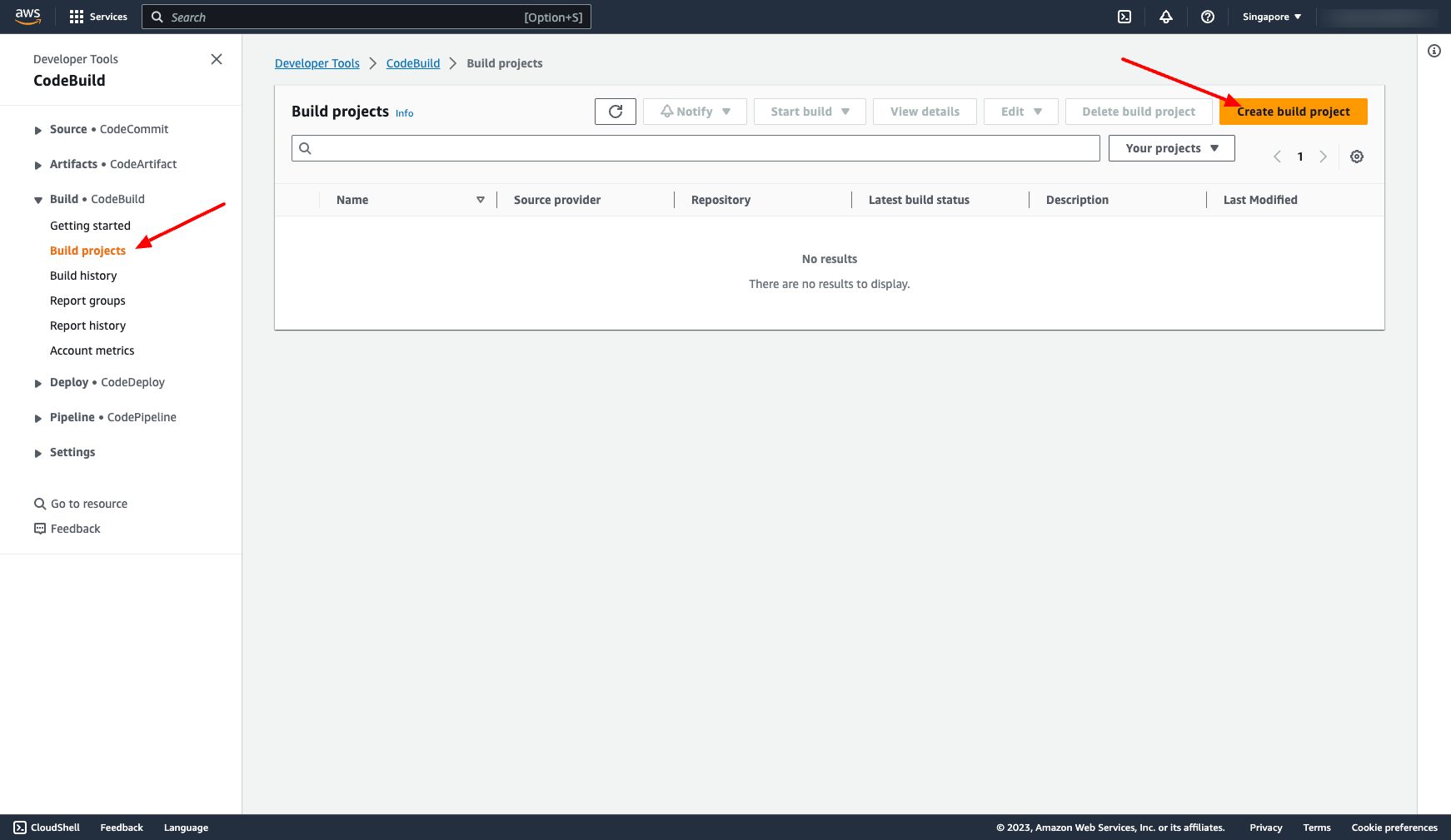

Creating CodeBuild project

Go to CodeBuild projects → Choose “Create build project” → Enter the necessary information:

Creating CodeBuild project

Go to CodeBuild projects → Choose “Create build project” → Enter the necessary information:

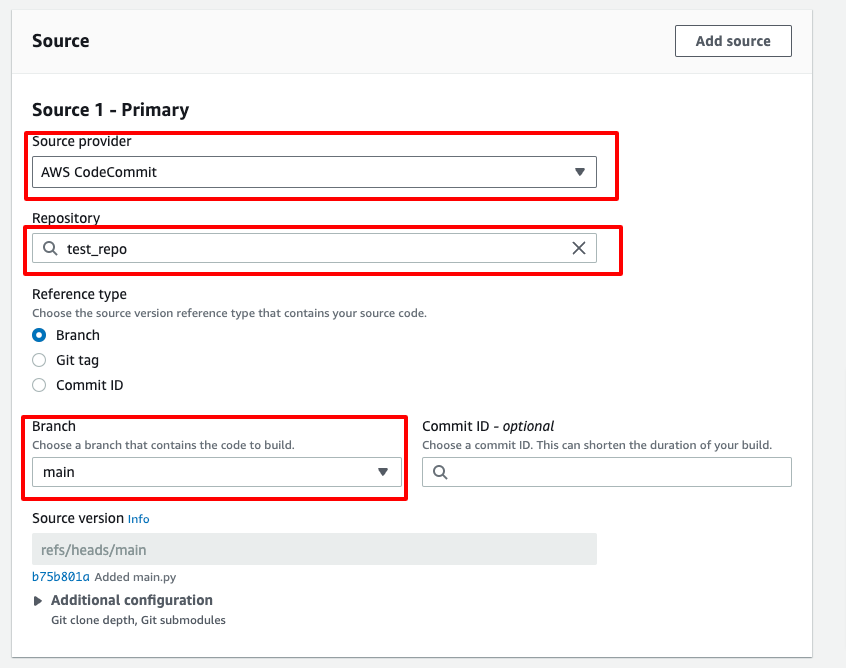

Choose Source is CodeCommit and the repository created in the previous step:

Choose Source is CodeCommit and the repository created in the previous step:

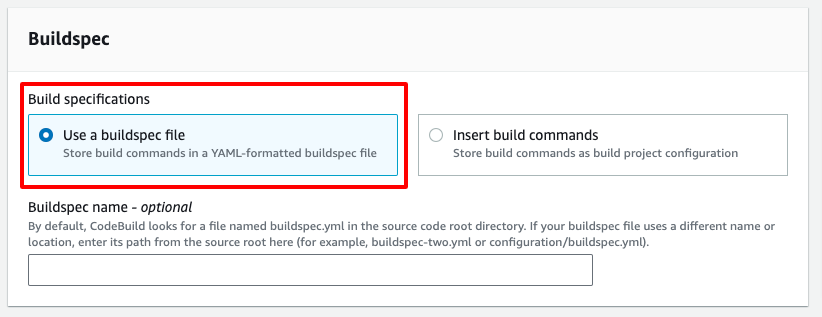

Choose using BuildSpec file, that means we have to push a buildspec.yml file to CodeCommit repo:

Choose using BuildSpec file, that means we have to push a buildspec.yml file to CodeCommit repo:

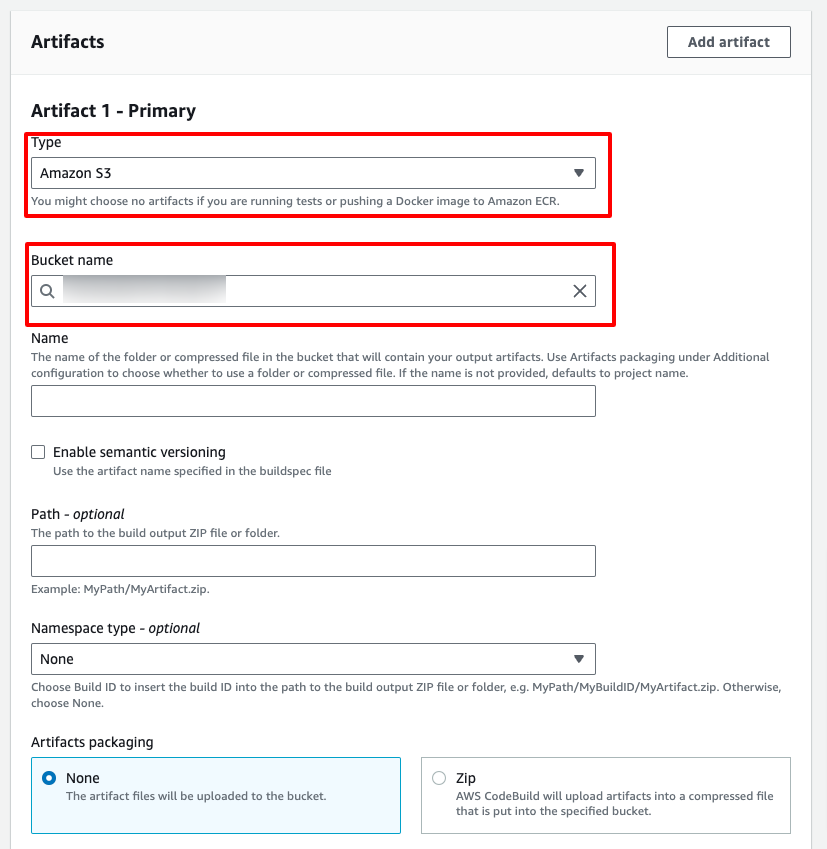

Choose Amazon S3 Artifact if we want to push container to ECR:

Choose Amazon S3 Artifact if we want to push container to ECR:

Creating CodeDeploy

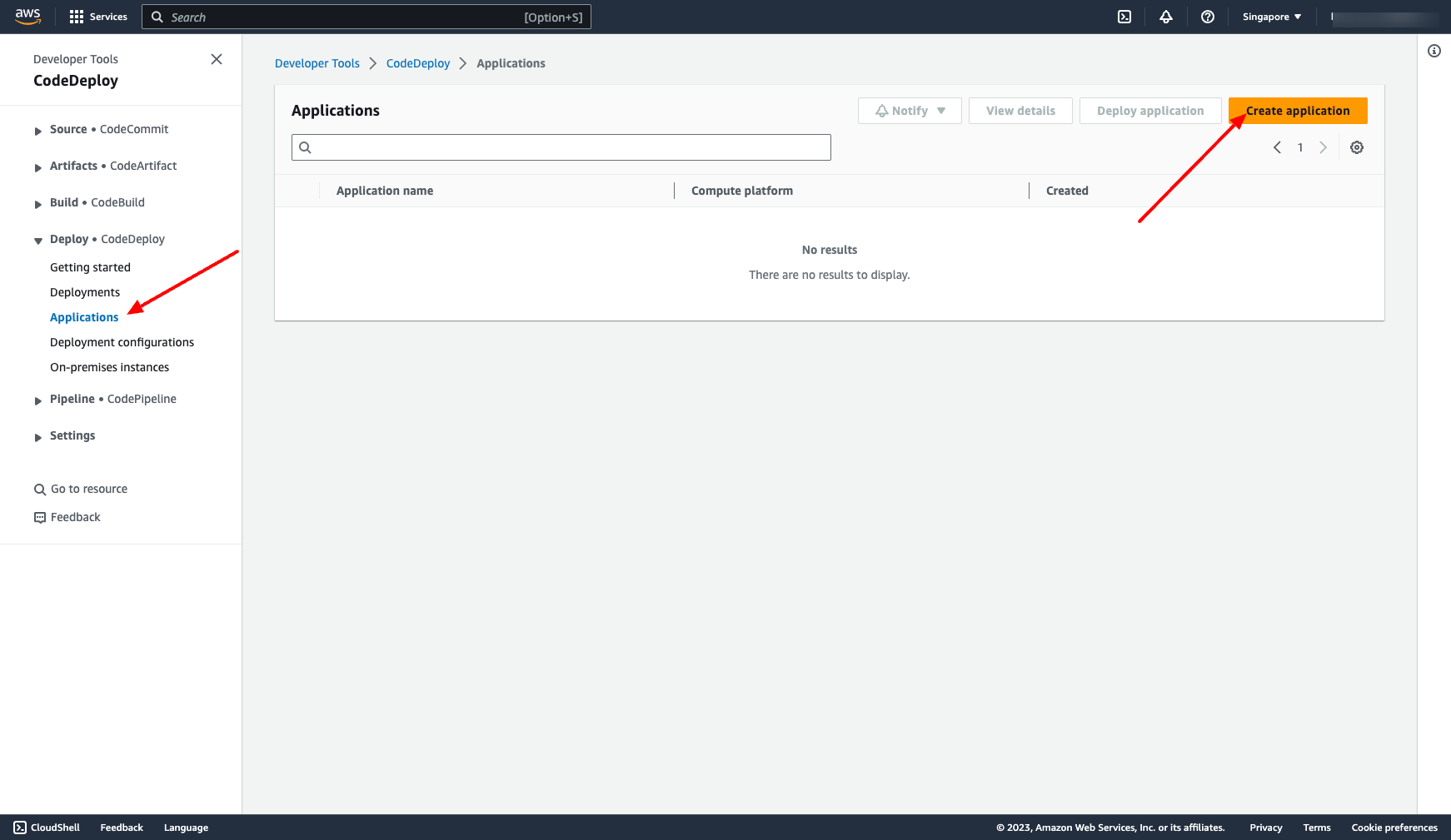

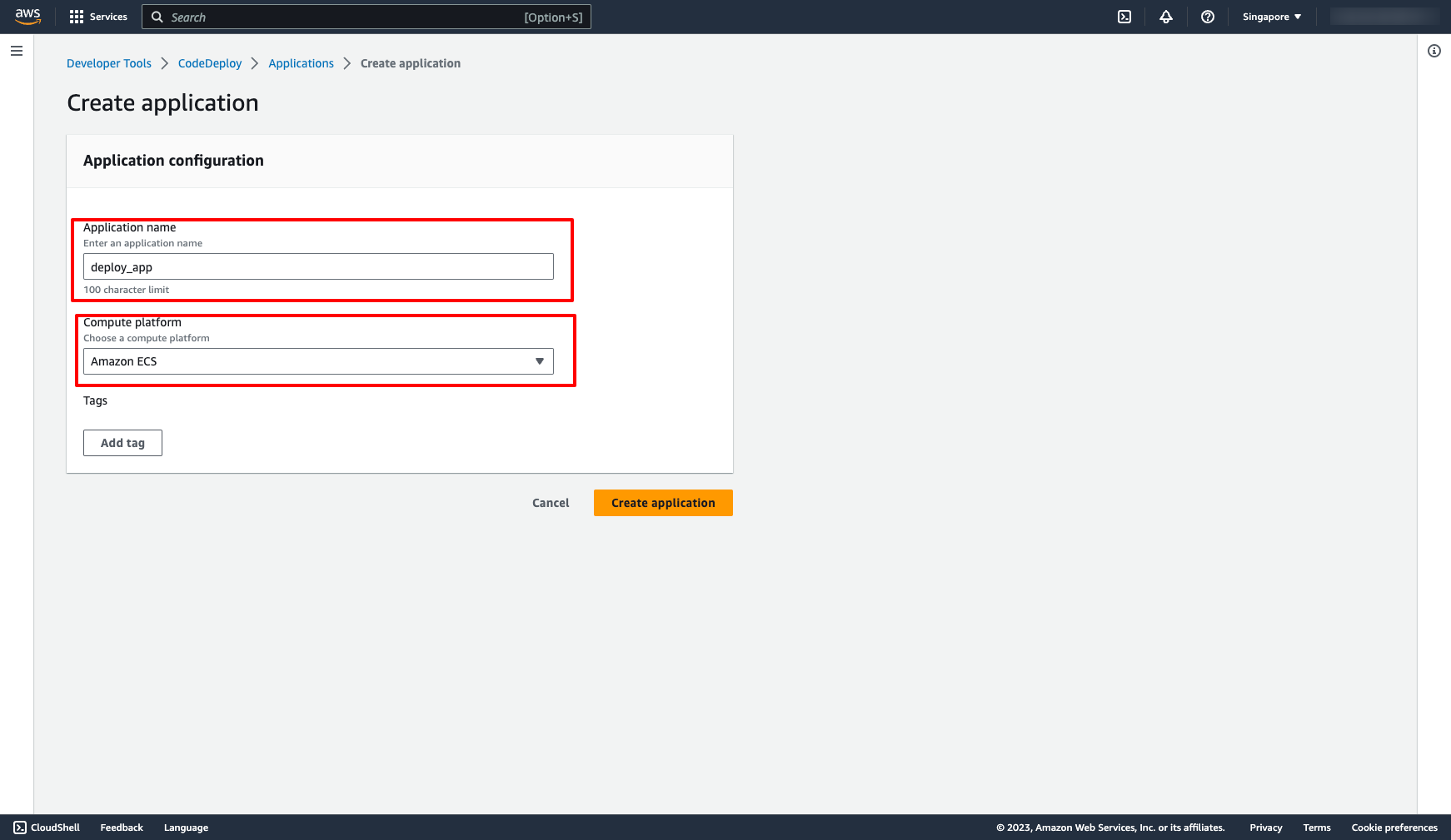

Go to Applications → Click to “Create application” → Enter name and choose Compute platform:

Creating CodeDeploy

Go to Applications → Click to “Create application” → Enter name and choose Compute platform:

We can choose EC2/On-premises or Lambda or Amazon ECS. In this case, we choose Amazon ECS.

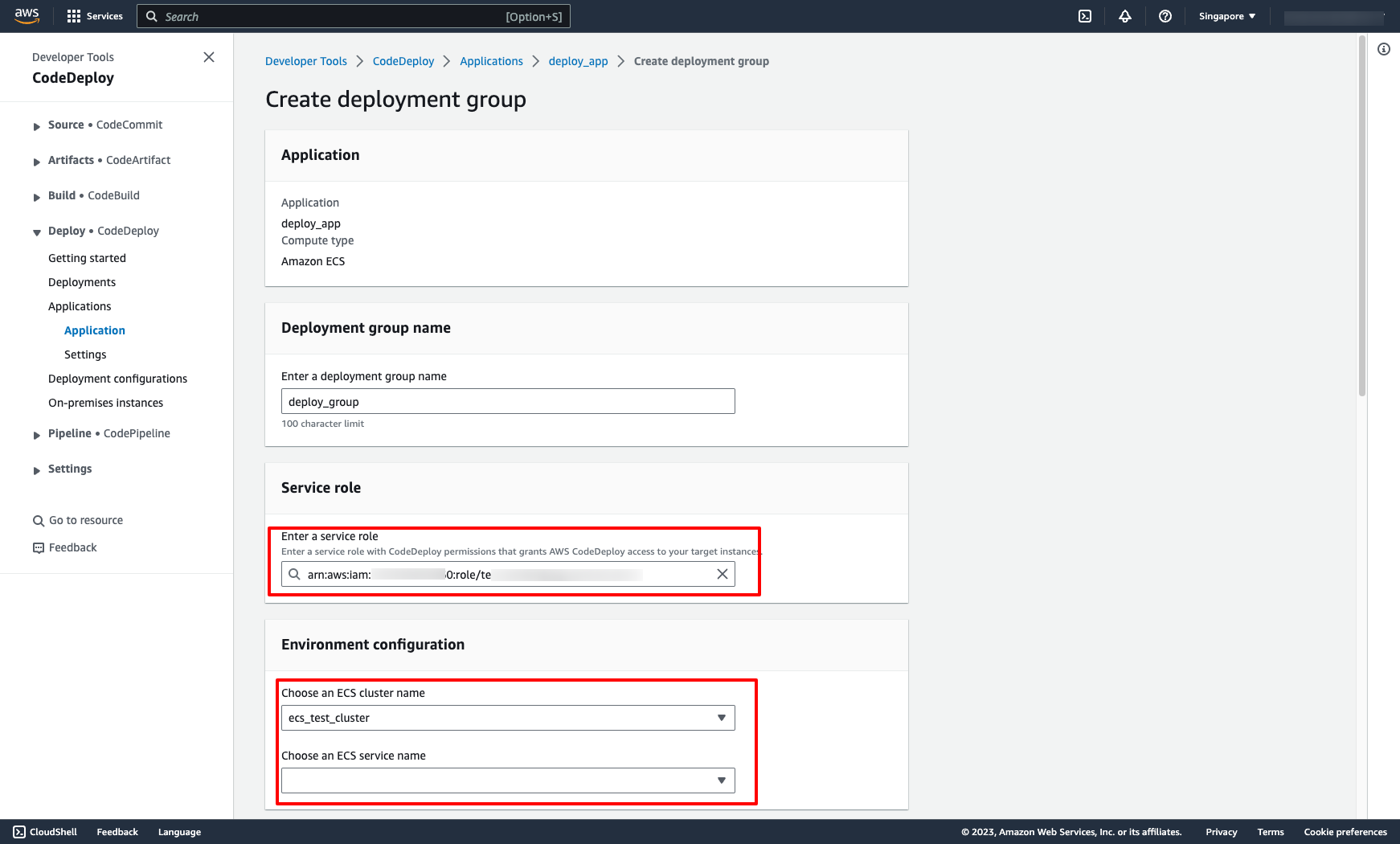

Next, we create a deployment group in CodeDeploy application.

Go to application → Choose “Create deployment group”:

Choose the Service role for CodeDeploy

Choose ECS cluster and ECS service, assuming we already have a ECS cluster before

We can choose EC2/On-premises or Lambda or Amazon ECS. In this case, we choose Amazon ECS.

Next, we create a deployment group in CodeDeploy application.

Go to application → Choose “Create deployment group”:

Choose the Service role for CodeDeploy

Choose ECS cluster and ECS service, assuming we already have a ECS cluster before

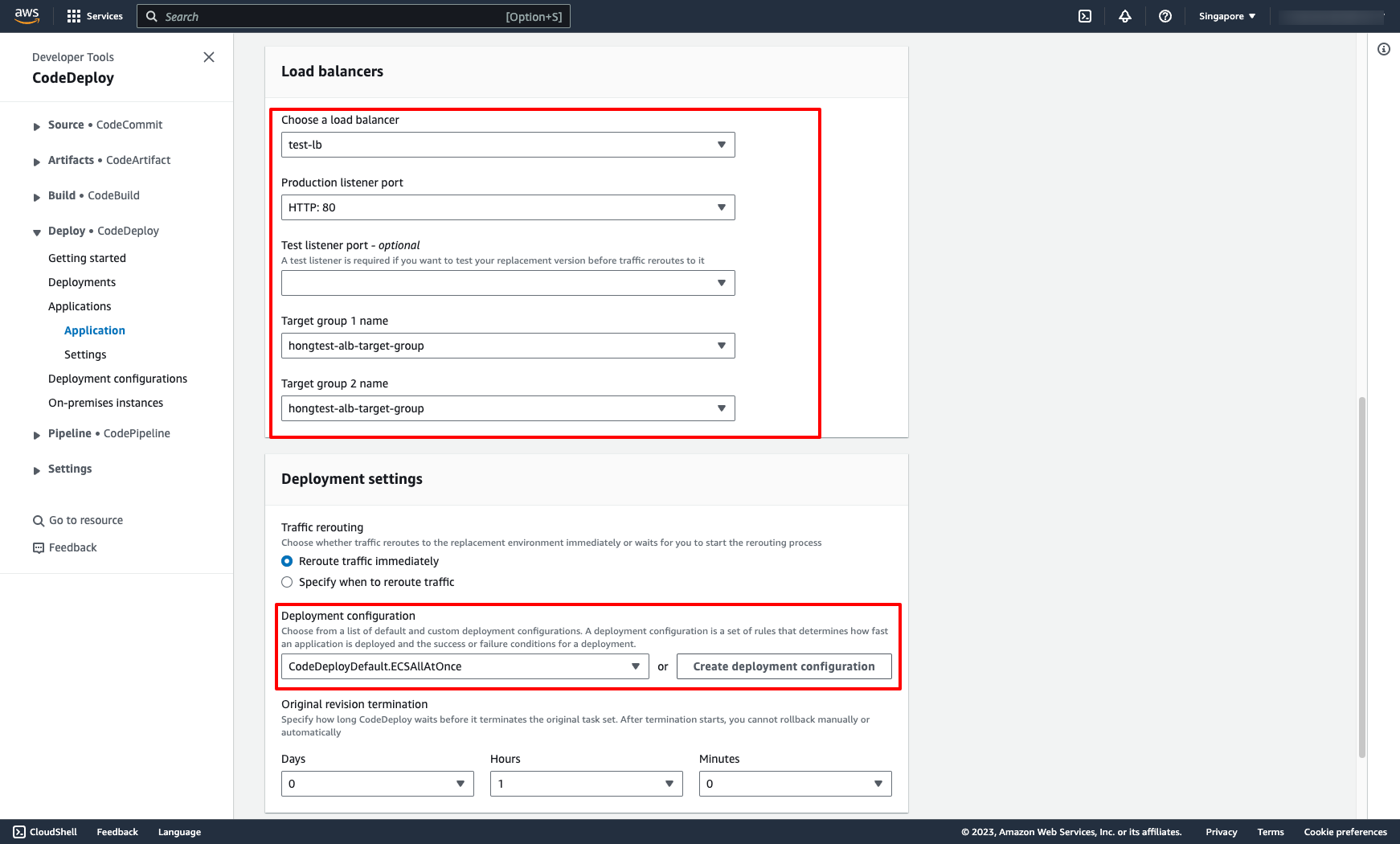

Choose Load Balancer and target groups:

Choose Load Balancer and target groups:

Creating CodePipeline

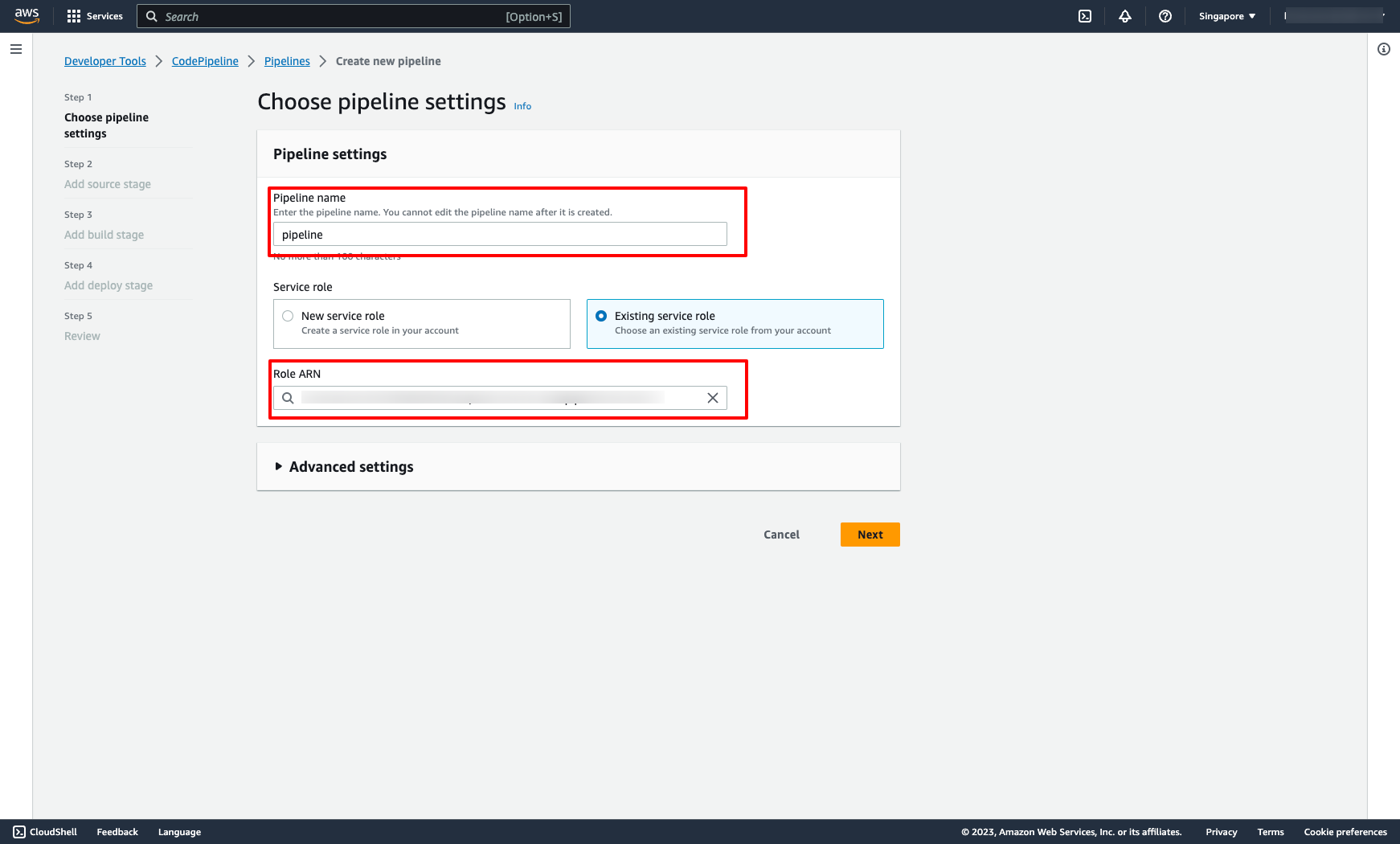

Go to Pipelines → Click to “Create pipeline” and do following the steps in screen:

Creating CodePipeline

Go to Pipelines → Click to “Create pipeline” and do following the steps in screen:

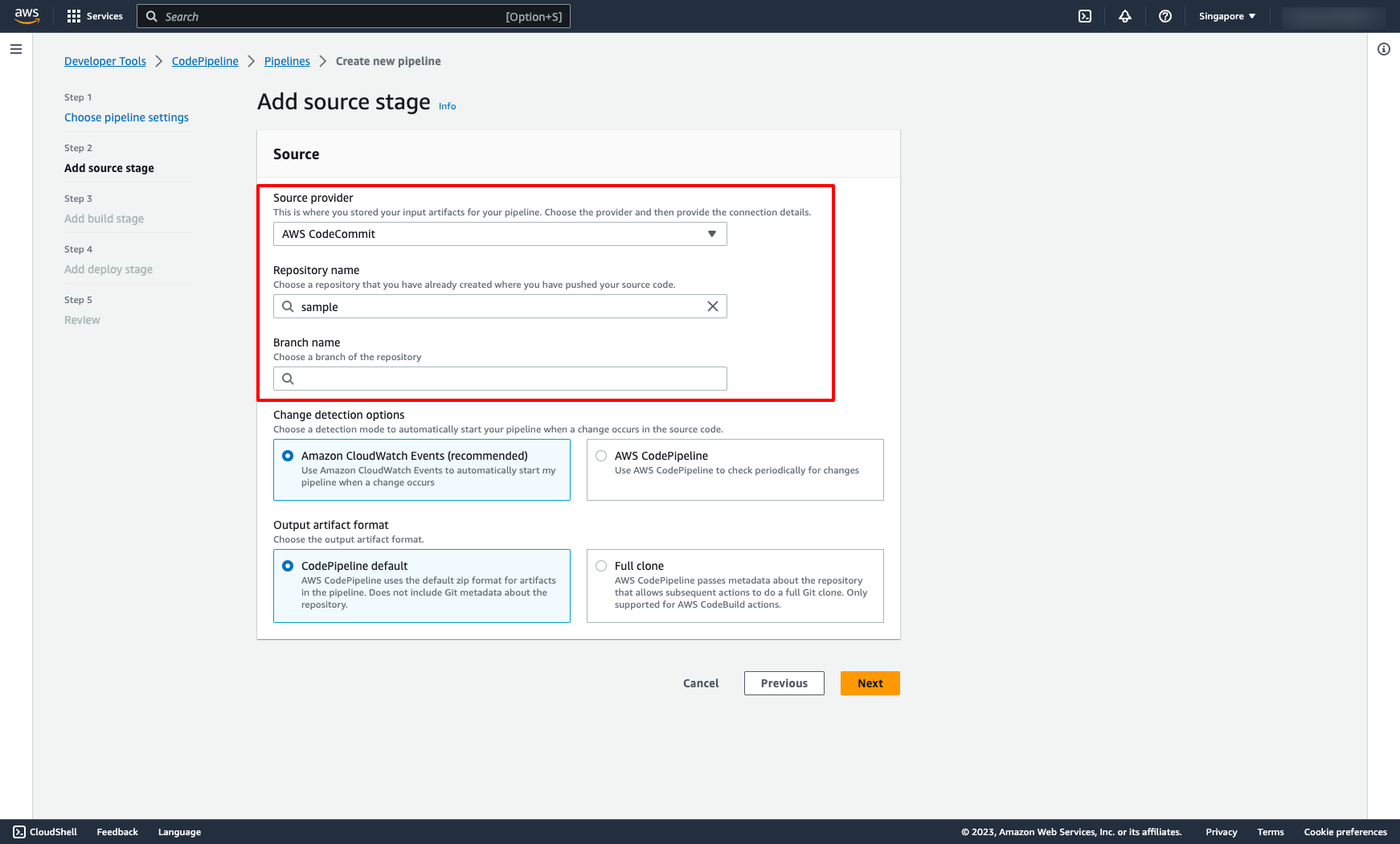

Choose Source provider you want:

Choose Source provider you want:

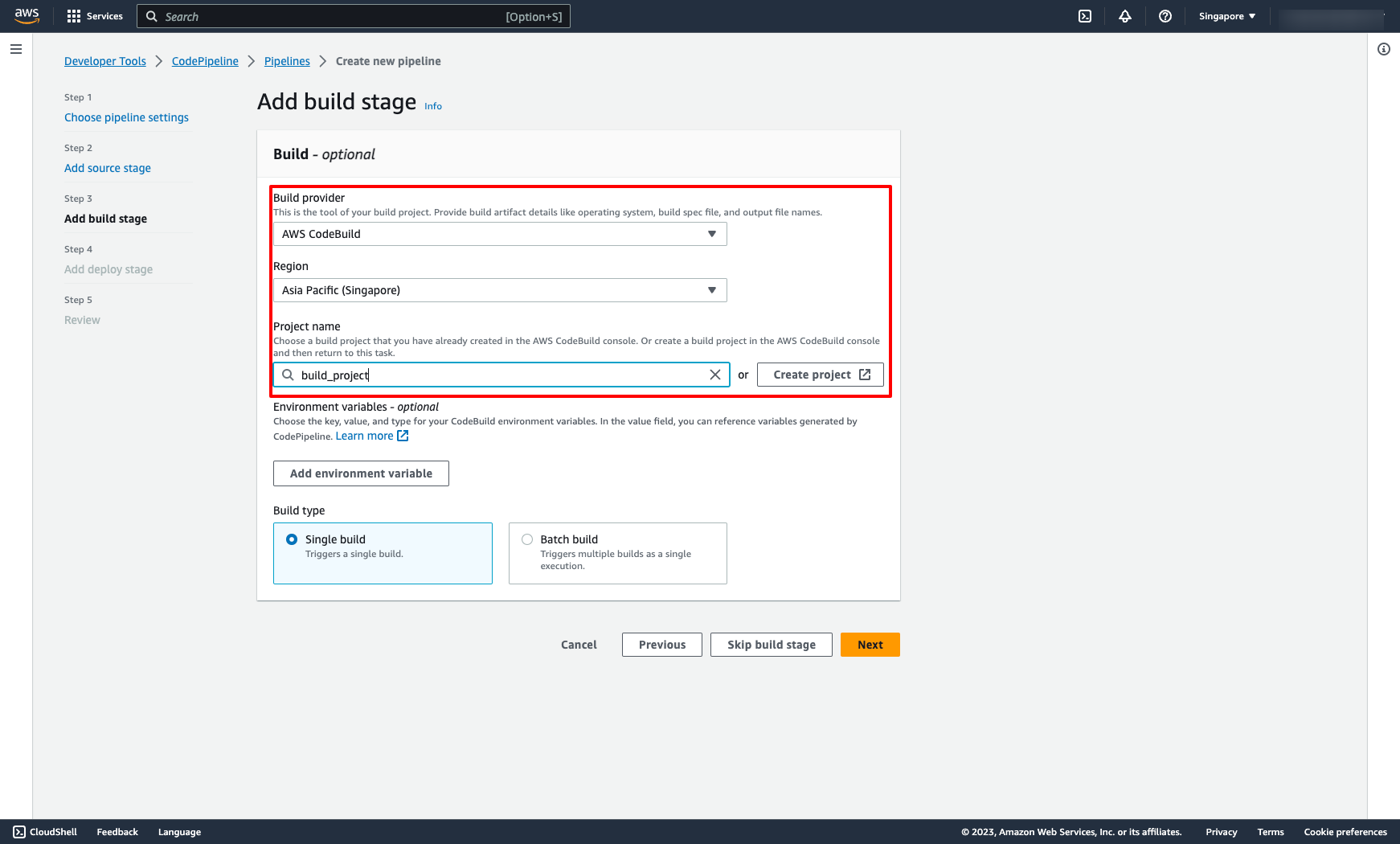

Choose Build provider and build project:

Choose Build provider and build project:

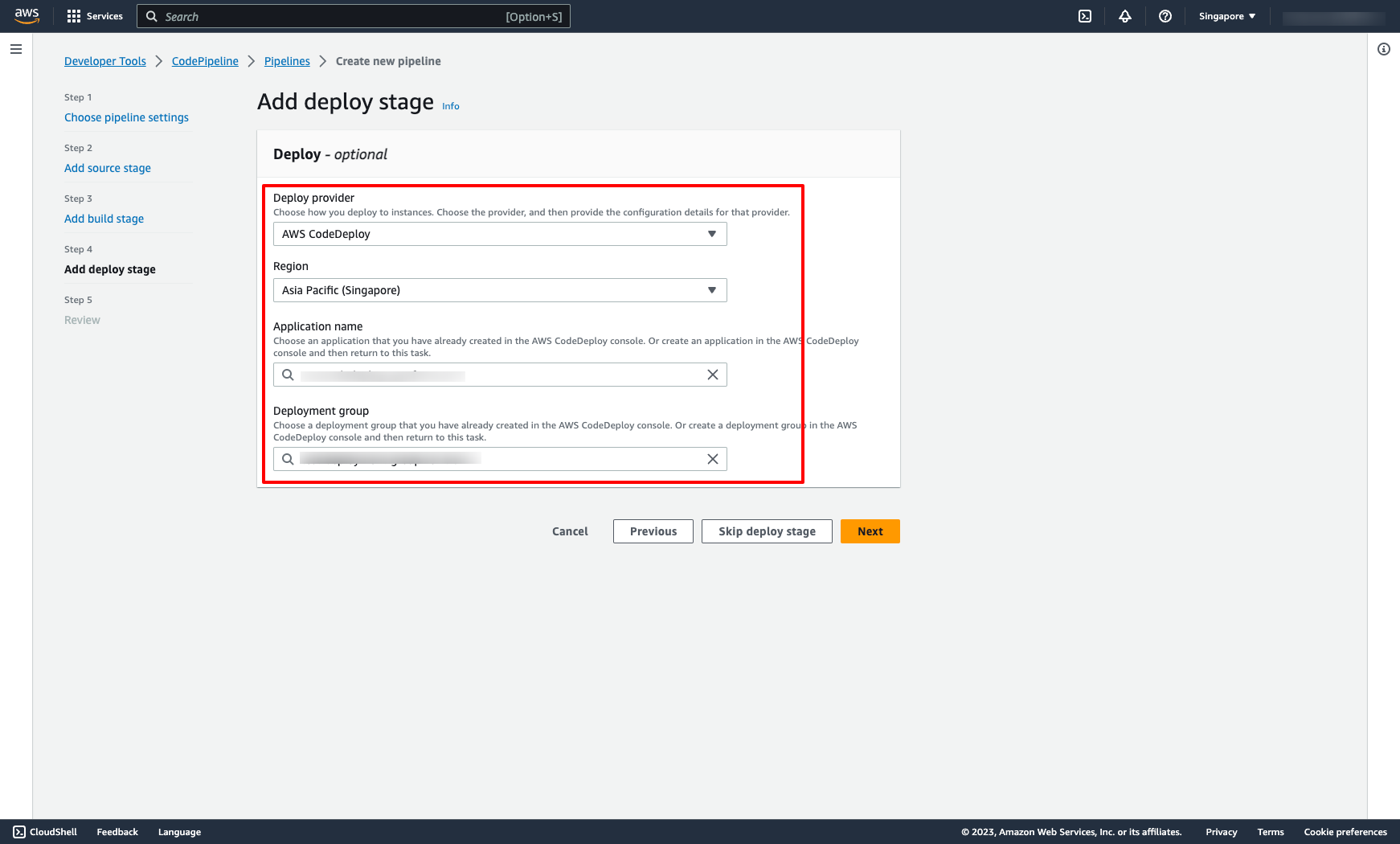

Choose Deploy provider and application:

Choose Deploy provider and application:

After preparing the CI/CD pipeline on AWS, the next step is committing Dockerfile, buildspec.yml, appspec.yml and taskdef.json file on your CodeCommit repo.

Dockerfile can be configured following your requirement.

Here is sample buildspec.yml for CodeBuild and appspec.yml for CodeDeploy:

After preparing the CI/CD pipeline on AWS, the next step is committing Dockerfile, buildspec.yml, appspec.yml and taskdef.json file on your CodeCommit repo.

Dockerfile can be configured following your requirement.

Here is sample buildspec.yml for CodeBuild and appspec.yml for CodeDeploy: //buildspec.yml

version: 0.2

phases:

install:

runtime-versions:

nodejs: 14

pre_build:

commands:

- echo Logging in to Amazon ECR...

- aws --version

- aws ecr get-login-password --region $AWS_DEFAULT_REGION | docker login --username AWS --password-stdin ${ECR_REPOSITORY_URI}

- COMMIT_HASH=$(echo $CODEBUILD_RESOLVED_SOURCE_VERSION | cut -c 1-7)

- IMAGE_TAG=${COMMIT_HASH:=latest}

build:

commands:

- echo Build started on `date`

- echo Building the Docker image...

- docker build -t ${ECR_REPOSITORY_URI}:latest .

- docker tag ${ECR_REPOSITORY_URI}:latest ${ECR_REPOSITORY_URI}:$IMAGE_TAG

post_build:

commands:

- echo Build completed on `date`

- echo Pushing the Docker images...

- docker push ${ECR_REPOSITORY_URI}:latest

- docker push ${ECR_REPOSITORY_URI}:$IMAGE_TAG

- echo Writing image definitions file...

- printf '[{"name":"hello-world","imageUri":"%s"}]' ${ECR_REPOSITORY_URI}:$IMAGE_TAG > imagedefinitions.json

- sed -i 's#<IMAGE_NAME>#'"$ECR_REPOSITORY_URI:$IMAGE_TAG"'#g' taskdef.json

artifacts:

files:

- imagedefinitions.json

- appspec.yml

- taskdef.json

// appspec.yml

version: 0.0

Resources:

- TargetService:

Type: AWS::ECS::Service

Properties:

TaskDefinition: <TASK_DEFINITION>

LoadBalancerInfo:

ContainerName: <CONTAINER_NAME>

ContainerPort: 80 Please make sure you already replace all variables by your correct information.

And here is sample taskdef.json:

//taskdef.json

{

"containerDefinitions": [

{

"name": "<CONTAINER_NAME>",

"image": "<IMAGE_NAME>",

"cpu": 256,

"memory": 512,

"portMappings": [

{

"containerPort": 80,

"hostPort": 80,

"protocol": "tcp"

}

],

"essential": true,

"entryPoint": [],

"environment": [],

"mountPoints": [],

"volumesFrom": []

}

],

"family": "<FAMILY_NAME>",

"taskRoleArn": "arn:aws:iam::942XXXXX:role/test-iam-for-ecs-task",

"executionRoleArn": "arn:aws:iam::942XXXXX:role/test-iam-for-ecs-task",

"networkMode": "awsvpc",

"volumes": [],

"status": "ACTIVE",

"placementConstraints": [],

"compatibilities": [

"EC2",

"FARGATE"

],

"requiresCompatibilities": [

"FARGATE"

],

"cpu": "256",

"memory": "512",

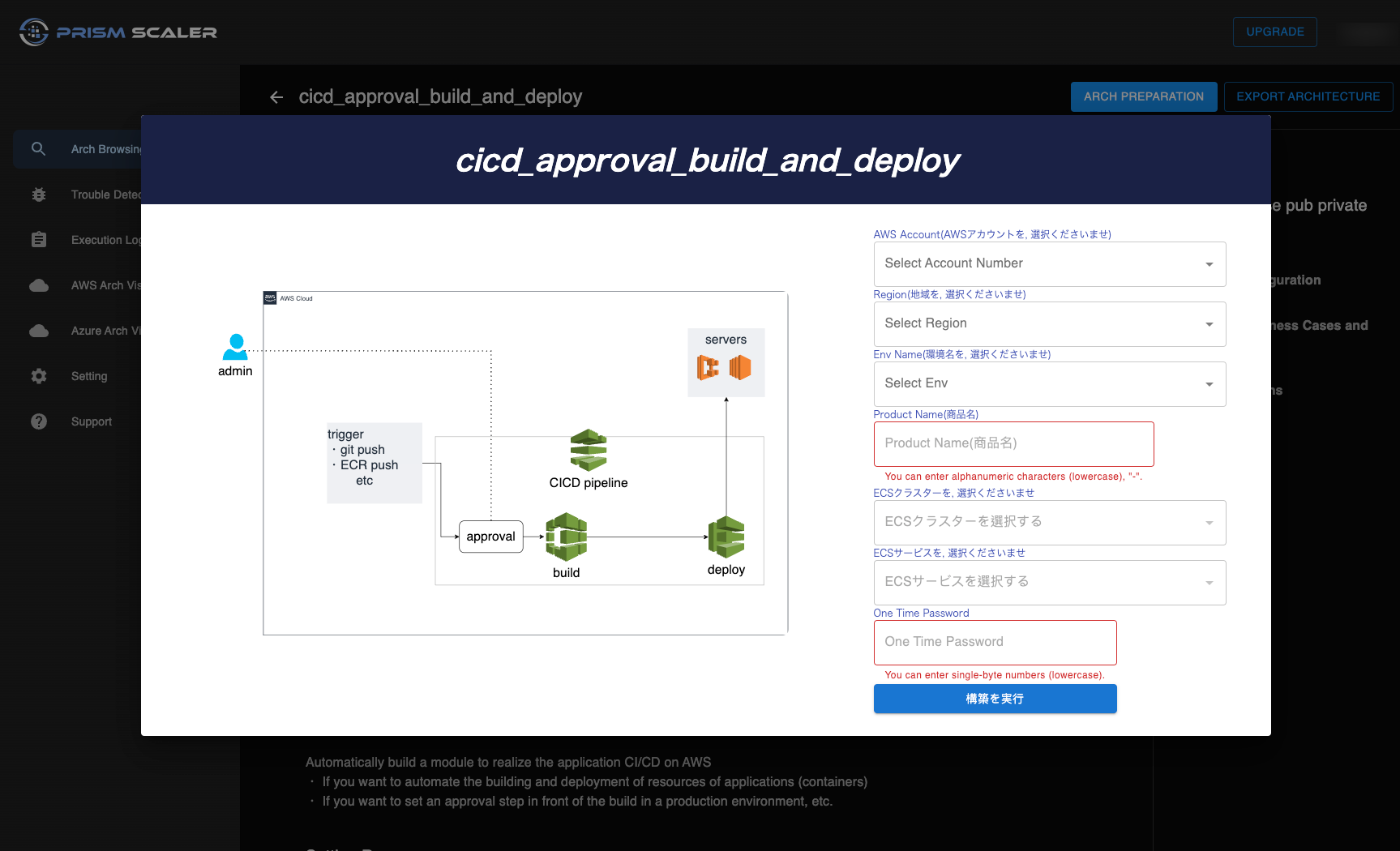

} 5. Creating AWS CodePipeline with PrismScaler

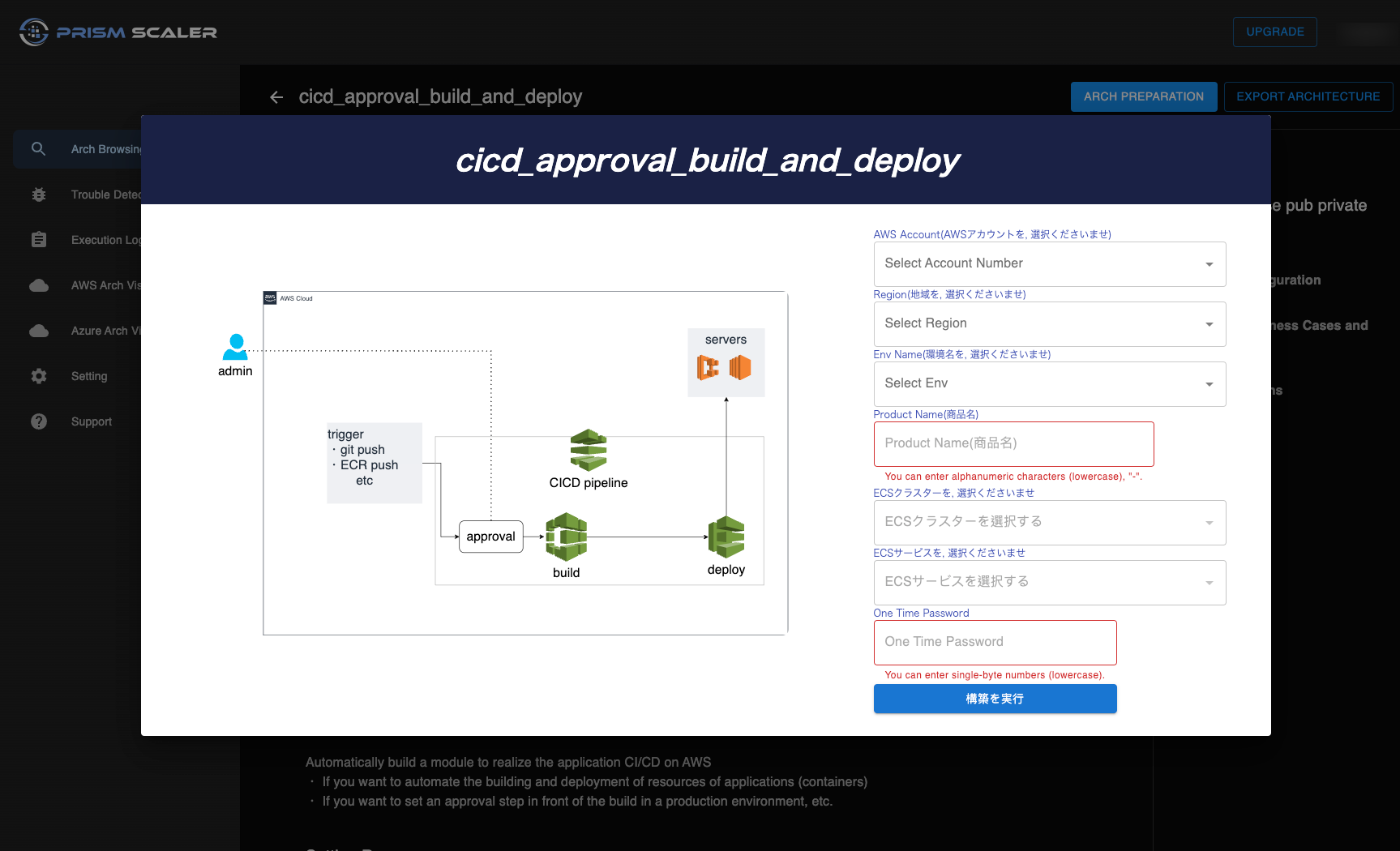

As we followed the steps of completing a CI/CD pipeline on AWS in the previous section, we see that there are quite a few steps and processes that need to be done manually on the AWS console.

With PrismScaler, the above process will be simpler in just one form.

All we need to do is filling out all of fields in this form, next apply, and then we have a completed Pipeline.

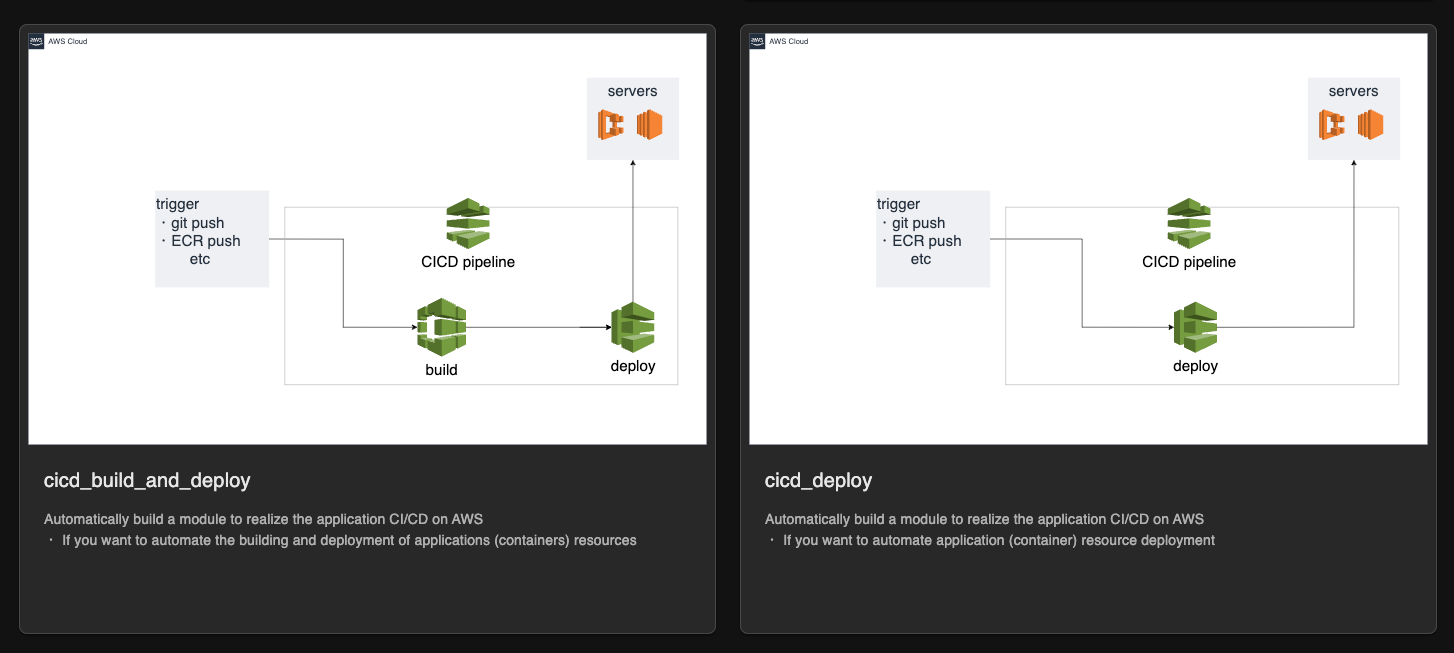

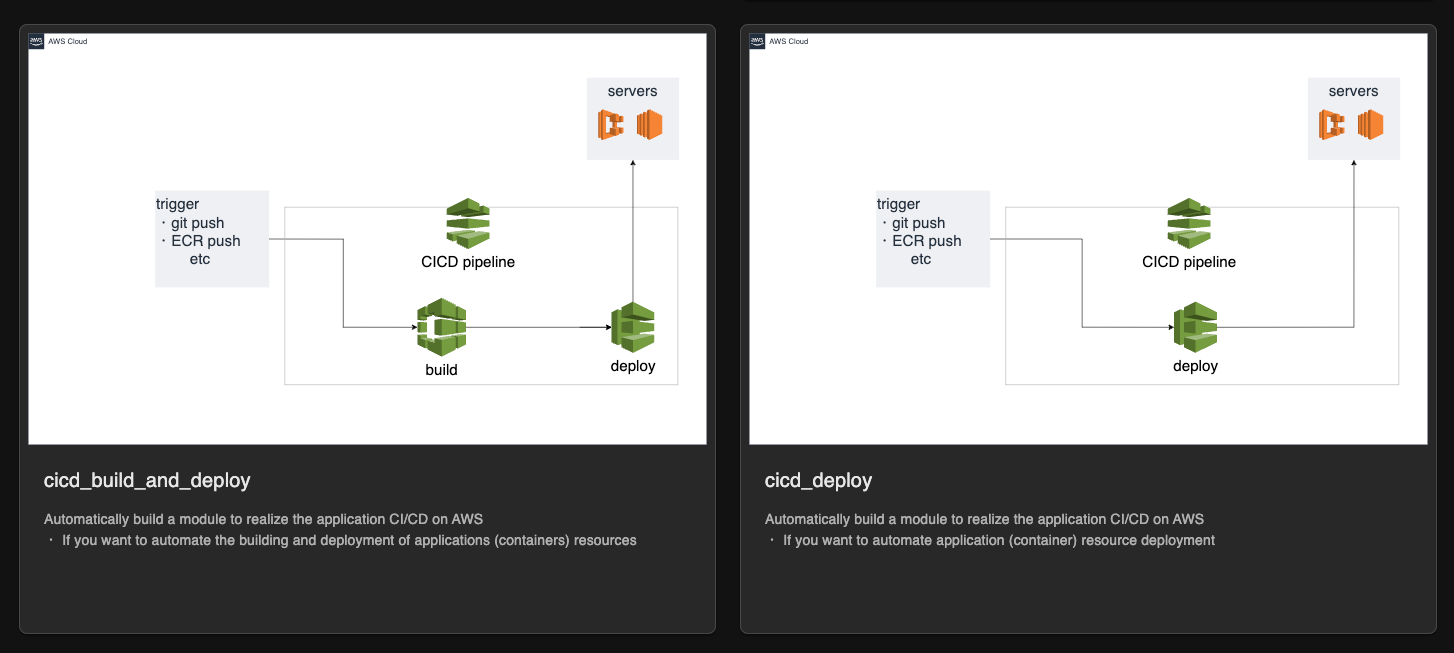

PrismScaler automatically build a module to realize the application CI/CD on AWS:

・ If you want to automate the building and deployment of resources of applications (containers)

・ If you want to set an approval step in front of the build in a production environment, etc.

And PrismScaler supports many kinds of pipeline on AWS, such as:

All we need to do is filling out all of fields in this form, next apply, and then we have a completed Pipeline.

PrismScaler automatically build a module to realize the application CI/CD on AWS:

・ If you want to automate the building and deployment of resources of applications (containers)

・ If you want to set an approval step in front of the build in a production environment, etc.

And PrismScaler supports many kinds of pipeline on AWS, such as:

All we need to do is filling out all of fields in this form, next apply, and then we have a completed Pipeline.

PrismScaler automatically build a module to realize the application CI/CD on AWS:

・ If you want to automate the building and deployment of resources of applications (containers)

・ If you want to set an approval step in front of the build in a production environment, etc.

And PrismScaler supports many kinds of pipeline on AWS, such as:

All we need to do is filling out all of fields in this form, next apply, and then we have a completed Pipeline.

PrismScaler automatically build a module to realize the application CI/CD on AWS:

・ If you want to automate the building and deployment of resources of applications (containers)

・ If you want to set an approval step in front of the build in a production environment, etc.

And PrismScaler supports many kinds of pipeline on AWS, such as:

Estimated Time: 5-10 minutes (without DevOps knowledge)

6. ECR, ECS basic knowledge

Amazon Elastic Container Registry (ECR) is a fully managed container registry service provided by AWS. It allows you to store, manage, and deploy Docker container images, making it easier to build, manage, and deploy containerized applications.

Image Storage:

- ECR provides a secure and scalable repository to store your Docker container images. Each repository can hold multiple versions of your images.

- Images are stored within ECR repositories, and you can organize repositories based on your application's needs.

- ECR integrates seamlessly with AWS Identity and Access Management (IAM) for fine-grained access control. You can define who has permission to push or pull images from each repository.

- You can set up resource-based policies to control access at the repository level, ensuring that only authorized users and services can interact with the images.

- You can use the standard Docker CLI to push (upload) your container images to ECR and pull (download) them from ECR.

- ECR provides a unique URI for each repository that you can use as the destination when pushing images from your local development environment or a CI/CD pipeline.

- ECR allows you to define lifecycle policies to automate the cleanup of older images. You can set rules to keep a specific number of image versions, expire images after a certain period, or based on other criteria.

- This ensures that you don't accumulate unnecessary or outdated images, helping you manage storage costs.

- ECR seamlessly integrates with Amazon ECS (Elastic Container Service) and Kubernetes, making it a natural choice when deploying containerized applications using these orchestration platforms.

- This integration simplifies the deployment process, as your ECS tasks or Kubernetes pods can directly pull images from ECR without additional configuration.

- ECR offers an image scanning feature that automatically analyzes images for vulnerabilities. This helps you identify security issues before deploying images into production.

- If vulnerabilities are detected, you can take appropriate actions to address them.

- ECR is built with high availability in mind, ensuring that your container images are accessible and reliable.

- Images are replicated across multiple availability zones, and ECR handles the underlying infrastructure, so you don't need to worry about server maintenance.

- ECS helps you orchestrate and manage the execution of Docker containers. It handles the complexities of deploying, scaling, and maintaining containers, allowing you to focus on your application logic.

- ECS ensures that your containers are placed on appropriate resources based on their requirements and availability.

- ECS enables you to scale your application easily by adjusting the number of containers in your tasks or services.

- You can use auto-scaling policies to dynamically adjust the number of running containers based on resource utilization, traffic, or other metrics.

- ECS helps optimize resource utilization by efficiently packing containers on a cluster of EC2 instances or using AWS Fargate, a serverless compute engine for containers.

- This reduces infrastructure costs by maximizing the utilization of your underlying compute resources.

- ECS seamlessly integrates with Elastic Load Balancing (ELB) services, allowing you to distribute incoming traffic across multiple containers or instances.

- This ensures high availability and improved performance for your applications.

- ECS supports service discovery, making it easier for your containers to discover and communicate with each other. This is essential for microservices architecture.

- You can use Route 53 for DNS-based service discovery or integrate with other service discovery mechanisms.

- ECS provides rolling updates and blue/green deployments, allowing you to release new versions of your application without causing downtime or disruptions.

- This helps maintain the availability and stability of your application.

- ECS supports different pricing models, including on-demand EC2 instances or serverless Fargate tasks.

- Fargate, in particular, allows you to run containers without managing the underlying infrastructure, which can lead to cost savings by eliminating the need to provision and manage EC2 instances.

- ECS seamlessly integrates with other AWS services, such as Amazon ECR (Elastic Container Registry), AWS CloudWatch for monitoring, AWS Identity and Access Management (IAM) for security, and more.