1. Introduction

The introduction provides an overview of the article's focus, which is to present a comprehensive guide on AWS load balancing in the context of ECS (Elastic Container Service). It may briefly explain the significance of load balancing in ensuring high availability and scalability for containerized applications.

2. Purpose

This section states the purpose of the article, which is to educate readers about AWS load balancing concepts and demonstrate how to set up load balancing for ECS services. It also aims to highlight the differences between EC2 and Fargate launch types and provide insights into container scaling using auto scaling.

3. What is AWS Load balancer?

Amazon Web Services (AWS) provides several types of load balancers to distribute incoming traffic across multiple targets, such as Amazon EC2 instances, containers, and IP addresses, to ensure high availability, fault tolerance, and efficient resource utilization. Load balancers play a crucial role in improving the performance and reliability of applications hosted in the cloud.

Here are the main types of AWS load balancers:

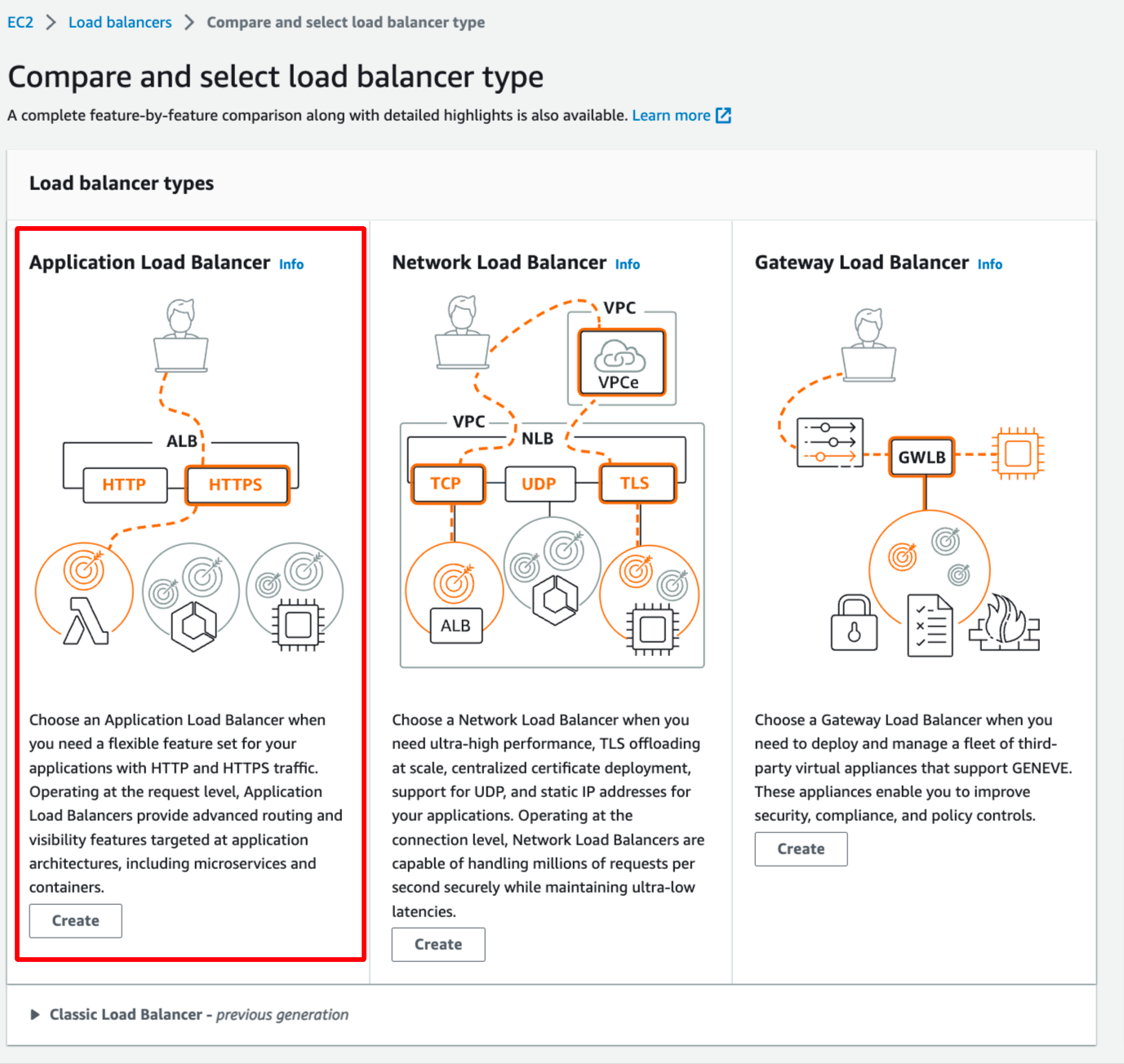

- Application Load Balancer (ALB):

- ALB operates at the application layer (Layer 7) and is best suited for routing HTTP/HTTPS traffic.

- It supports advanced routing and content-based routing using rules and listeners.

- ALB can distribute traffic to multiple targets, such as EC2 instances, ECS tasks, and Lambda functions.

- It can be integrated with AWS WAF (Web Application Firewall) for security enhancements.

- Network Load Balancer (NLB):

- NLB operates at the transport layer (Layer 4) and is designed to handle high levels of traffic efficiently.

- It's suited for TCP/UDP-based traffic and provides ultra-low latencies.

- NLB can distribute traffic to targets within the same or different Availability Zones.

- Gateway Load Balancer (GWLB):

- GWLB is used for routing traffic to third-party appliances, such as virtual firewalls, intrusion detection systems, and more.

- It operates at the application layer and supports Layer 7 policies.

- High Availability: Load balancers help distribute traffic to healthy targets, ensuring that your applications remain available even if some targets experience failures.

- Auto Scaling: Load balancers work seamlessly with auto scaling groups, enabling your application to scale up or down based on demand.

- Security: Load balancers can offload SSL/TLS encryption and decryption, enhancing the security of your applications.

- Health Checks: Load balancers perform regular health checks on targets to ensure they are responsive and healthy.

- Efficient Traffic Distribution: Load balancers intelligently distribute traffic to achieve optimal resource utilization and performance.

- Centralized Management: Load balancers are managed services, reducing the operational overhead for configuring and maintaining complex load balancing setups.

4. What is ECS?

Amazon Elastic Container Service (ECS) is a fully managed container orchestration service provided by Amazon Web Services (AWS). It enables you to easily run, scale, and manage containerized applications using Docker containers. ECS simplifies the deployment and management of containers by handling the underlying infrastructure, allowing you to focus on your application logic.

Key features of AWS ECS include:

- Container Management: ECS allows you to define and run Docker containers without having to manage the underlying infrastructure. You can create and manage container clusters where your applications' containers are deployed.

- Scalability: ECS makes it easy to scale your applications up or down based on demand. You can use Auto Scaling to automatically adjust the number of containers running in response to changes in traffic.

- Integration: It integrates seamlessly with other AWS services like Elastic Load Balancing, Amazon VPC (Virtual Private Cloud), IAM (Identity and Access Management), and CloudWatch for monitoring and logging.

- Task Definitions: You define your application's containers and their configurations using task definitions. These definitions specify which Docker images to use, CPU and memory requirements, and container-to-container networking.

- Scheduling: ECS supports different scheduling strategies, including the ability to place containers on specific instances or let ECS choose where to place them based on available resources and constraints.

- Service Discovery: It offers service discovery features through the use of Amazon Route 53 or AWS Cloud Map, making it easier for containers to discover and communicate with each other.

- Security: ECS integrates with AWS Identity and Access Management (IAM) for fine-grained access control to your containers and resources.

- Managed Container Registry: AWS provides Amazon Elastic Container Registry (ECR) for storing and managing Docker images securely. ECS can easily pull images from ECR for container deployments.

- Compatibility: ECS is compatible with the Docker Compose file format, making it easier to migrate existing Docker-based applications to AWS ECS.

- EC2 Launch Type: In this mode, ECS manages containers on a cluster of EC2 instances that you provision and manage. You need to set up and maintain the EC2 instances yourself.

- Fargate Launch Type: With Fargate, you don't need to worry about the underlying EC2 instances. AWS takes care of provisioning and managing them for you. You can focus solely on your containers.

- Resource Management:

- EC2 Launch Type:

- Requires you to manage and provision the underlying EC2 instances where your containers run.

- You have full control over the EC2 instances, including instance types, scaling policies, and OS configuration.

- Well-suited for applications that require specific instance types or configurations, or when you want to leverage existing EC2 infrastructure.

- Fargate Launch Type:

- AWS manages the underlying infrastructure (EC2 instances) for you, and you don't have direct access to the instances.

- Ideal for applications where you want to abstract away the infrastructure details and focus solely on running containers.

- Simplifies the deployment process by eliminating the need to manage EC2 instances, making it more suitable for serverless and microservices architectures.

- EC2 Launch Type:

- Scaling:

- EC2 Launch Type:

- You can use Amazon EC2 Auto Scaling to scale your EC2 instances based on demand.

- You have granular control over the scaling policies and can manually configure instance scaling rules.

- Fargate Launch Type:

- Fargate abstracts the underlying instances, so you don't directly manage scaling for EC2 instances.

- Instead, you define scaling policies at the task level, allowing you to scale the number of containers (tasks) automatically.

- EC2 Launch Type:

- Pricing:

- EC2 Launch Type:

- You pay for the EC2 instances you provision, as well as any other AWS resources you use (e.g., load balancers).

- Pricing depends on the instance types, regions, and usage.

- Fargate Launch Type:

- Pricing is based on the vCPU and memory resources allocated to your tasks, with no additional charges for EC2 instances.

- Fargate offers a more predictable and granular pricing model.

- EC2 Launch Type:

- Isolation:

- EC2 Launch Type:

- Containers on the same EC2 instance share the underlying hardware resources.

- You can use EC2 features like placement groups to control the instance placement of containers.

- Fargate Launch Type:

- Fargate tasks run in complete isolation from each other on the shared infrastructure.

- This isolation ensures greater security and eliminates the "noisy neighbor" problem.

- EC2 Launch Type:

- Complexity and Control:

- EC2 Launch Type:

- Offers more control over the underlying infrastructure but requires additional management tasks.

- Suitable when you have specific infrastructure requirements or want to run other applications alongside ECS containers.

- Fargate Launch Type:

- Simplifies the management of infrastructure but may be less suitable for complex or specialized setups.

- Ideal for a serverless, hands-off container deployment experience.

- EC2 Launch Type:

5. Creating AWS Load balancing to ECS with AWS console

This section provides a step-by-step guide on how to set up AWS load balancing for ECS services using the AWS Management Console. It covers creating load balancers, defining target groups, and integrating them with ECS services.

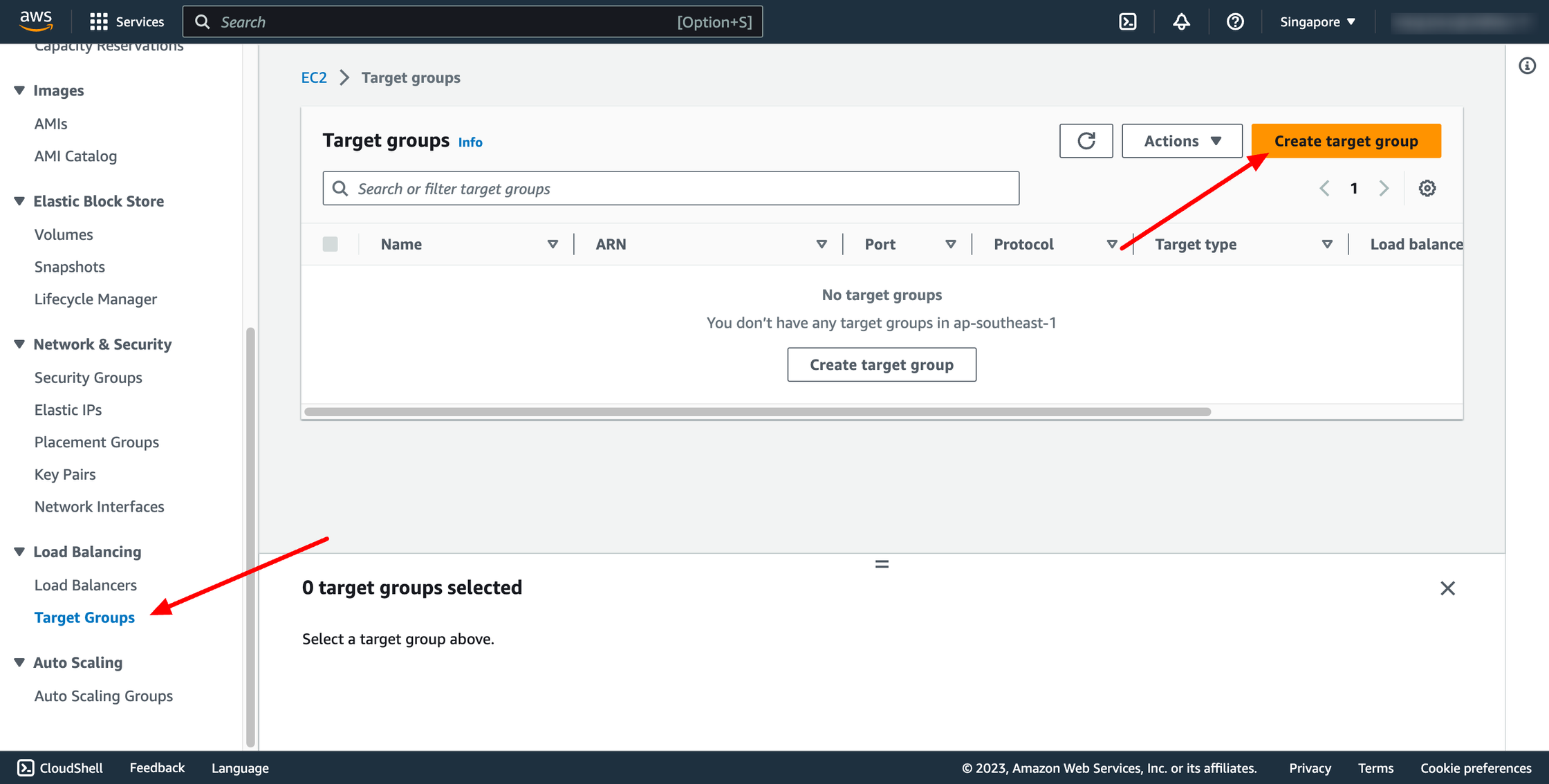

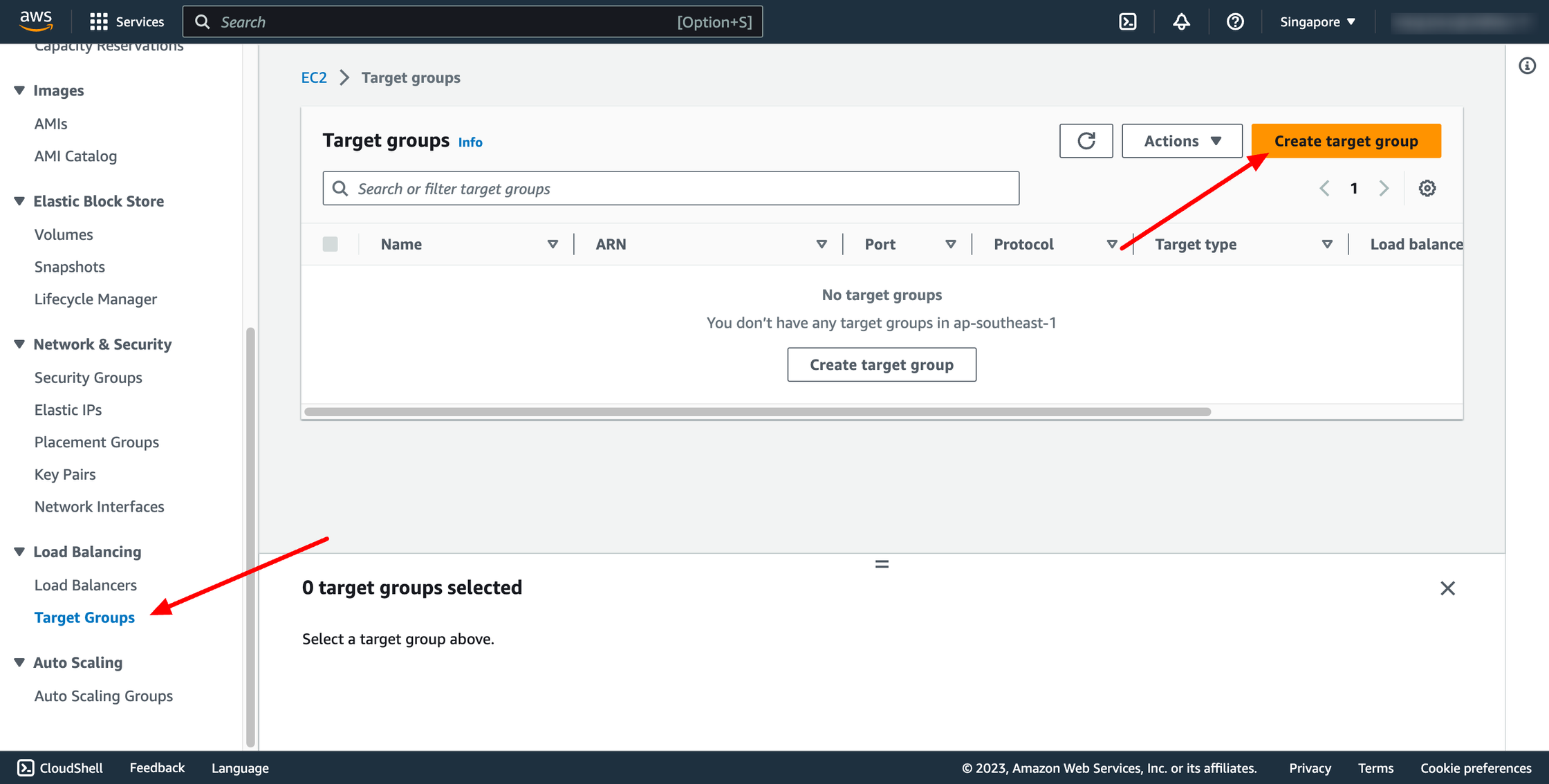

Step 1. Create target group

Each target group is used to route requests to one or more registered targets. When a rule condition is met, traffic is forwarded to the corresponding target group.

Go to Load balancer feature in EC2 service → go to Target group → click to Create target group:

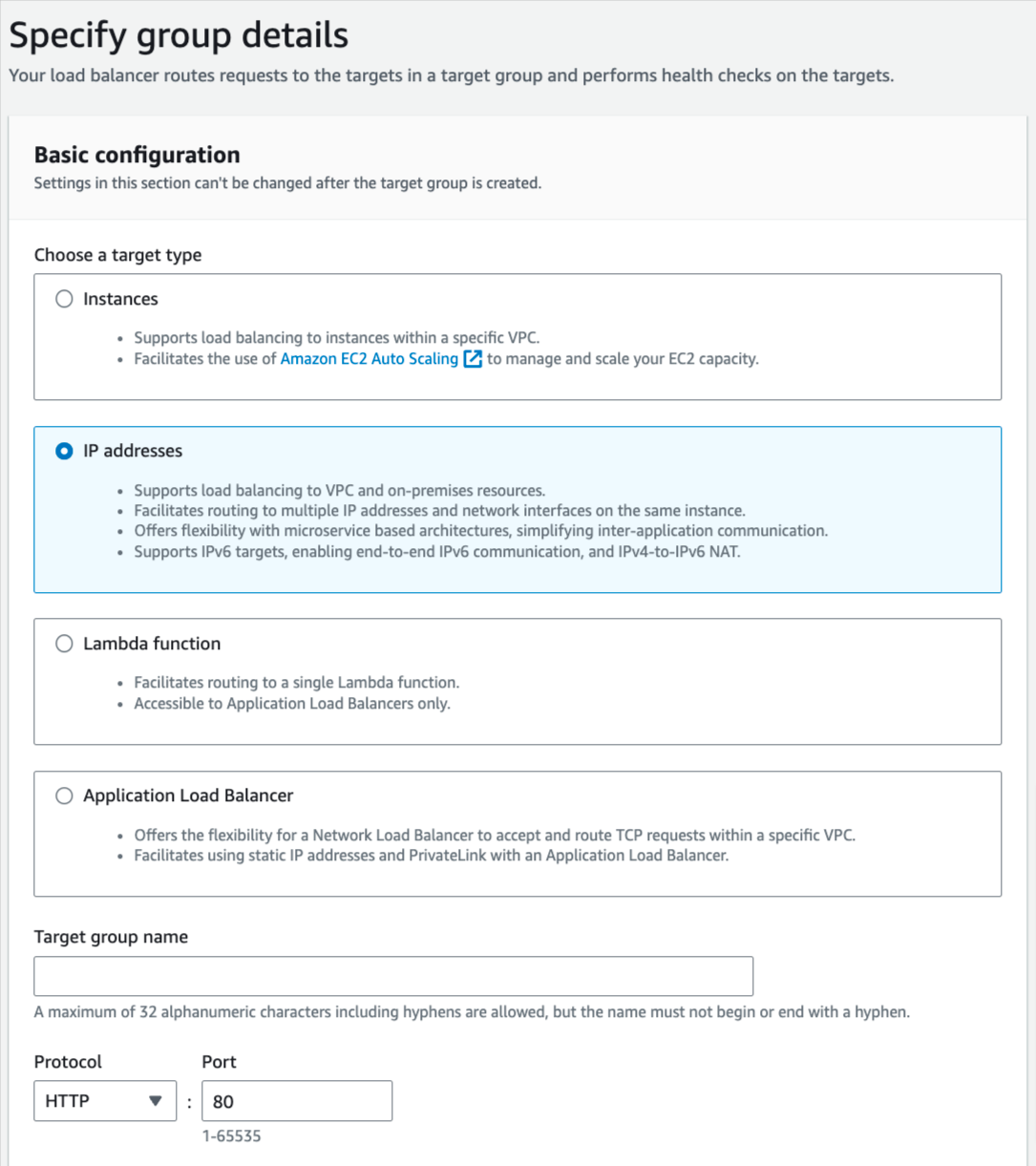

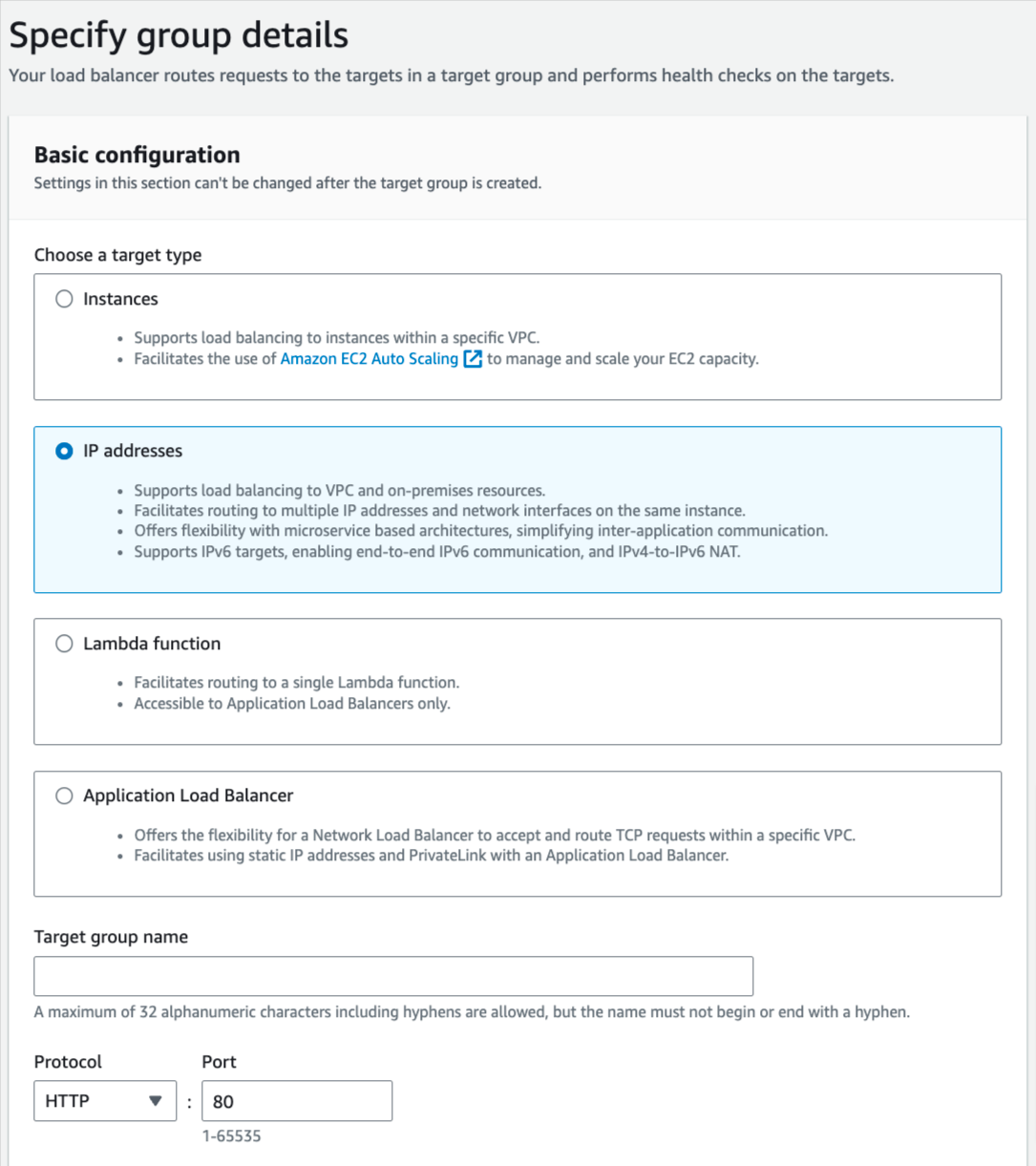

For Choose a target type,Instances to register targets by instance ID, IP addresses to register targets by IP address, or Lambda function to register a Lambda function as a target.

If your service's task definition uses the awsvpc network mode (which is required for the Fargate launch type), you must choose IP addresses as the target type This is because tasks that use the awsvpc network mode are associated with an elastic network interface, not an Amazon EC2 instance.

If the ECS service is EC2 launch type, please choose Instances.

If the ECS service is Fargate launch type, please choose IP addresses.

For Choose a target type,Instances to register targets by instance ID, IP addresses to register targets by IP address, or Lambda function to register a Lambda function as a target.

If your service's task definition uses the awsvpc network mode (which is required for the Fargate launch type), you must choose IP addresses as the target type This is because tasks that use the awsvpc network mode are associated with an elastic network interface, not an Amazon EC2 instance.

If the ECS service is EC2 launch type, please choose Instances.

If the ECS service is Fargate launch type, please choose IP addresses.

You can skip the target selection, we will update later. Then, create target group.

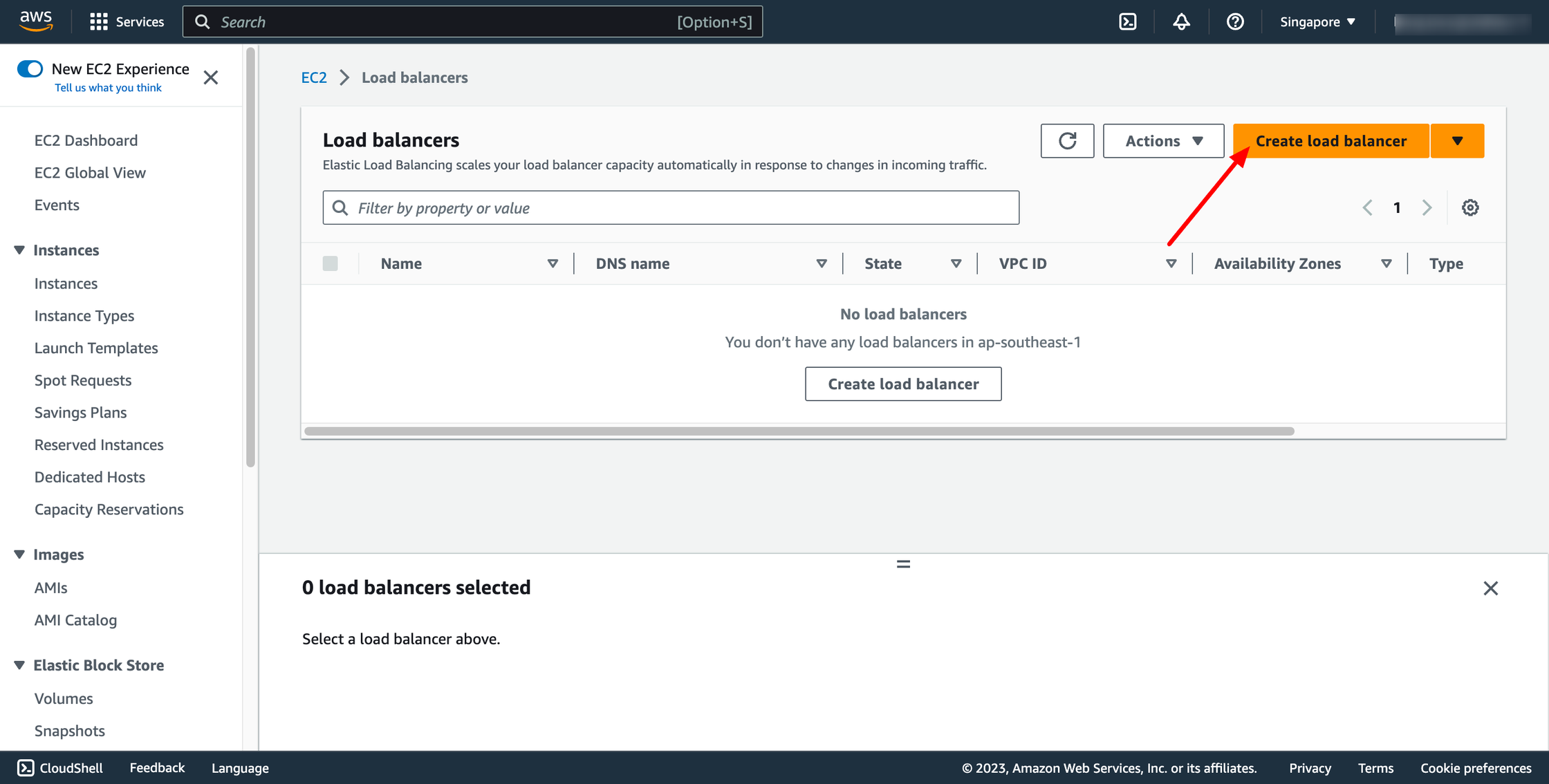

Step 2. Create Load balancer

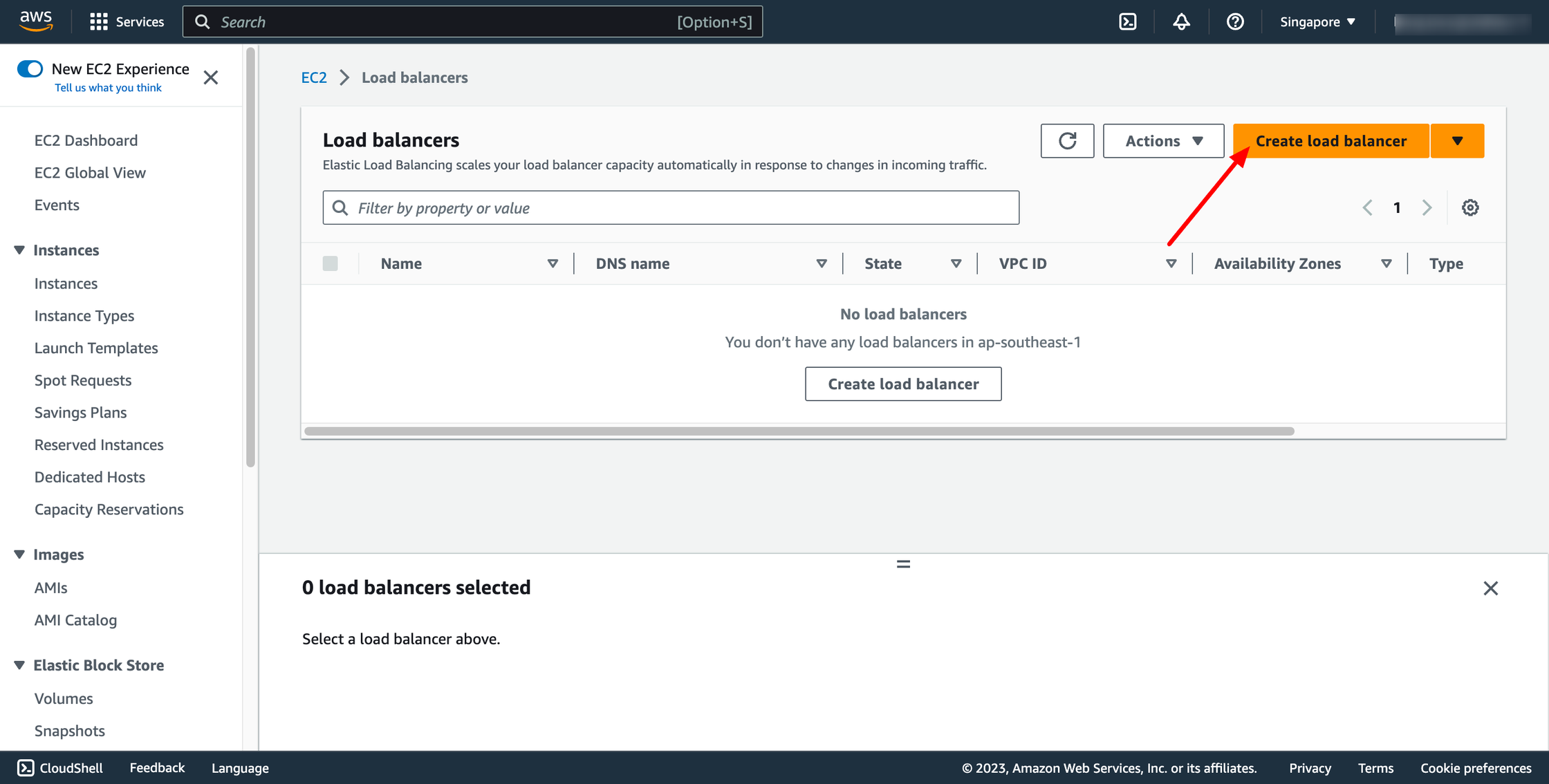

Navigate to Load Balancers → click to Create load balancer:

You can skip the target selection, we will update later. Then, create target group.

Step 2. Create Load balancer

Navigate to Load Balancers → click to Create load balancer:

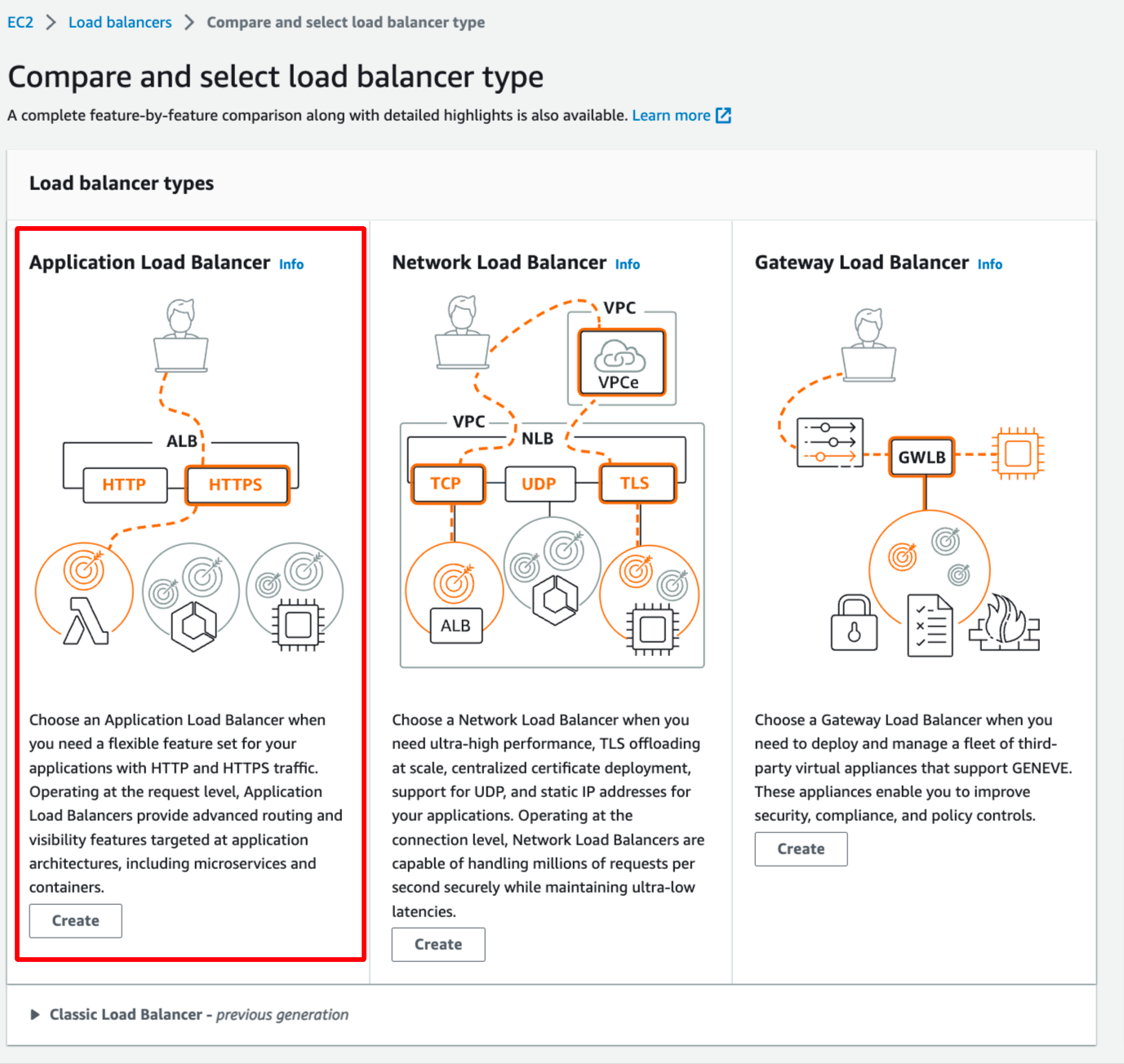

In this blog, we use Application Load Balancer, please choose it:

In this blog, we use Application Load Balancer, please choose it:

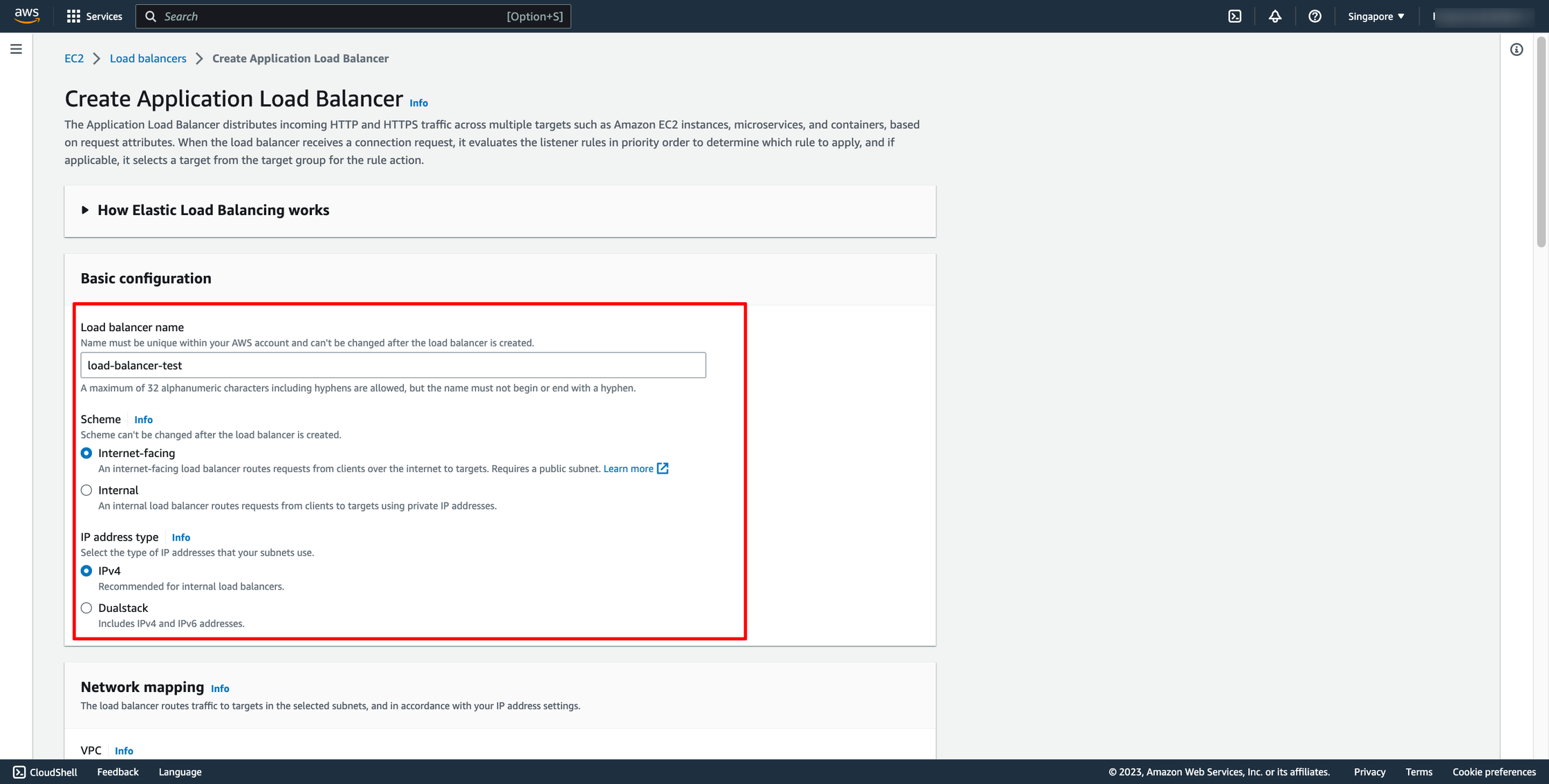

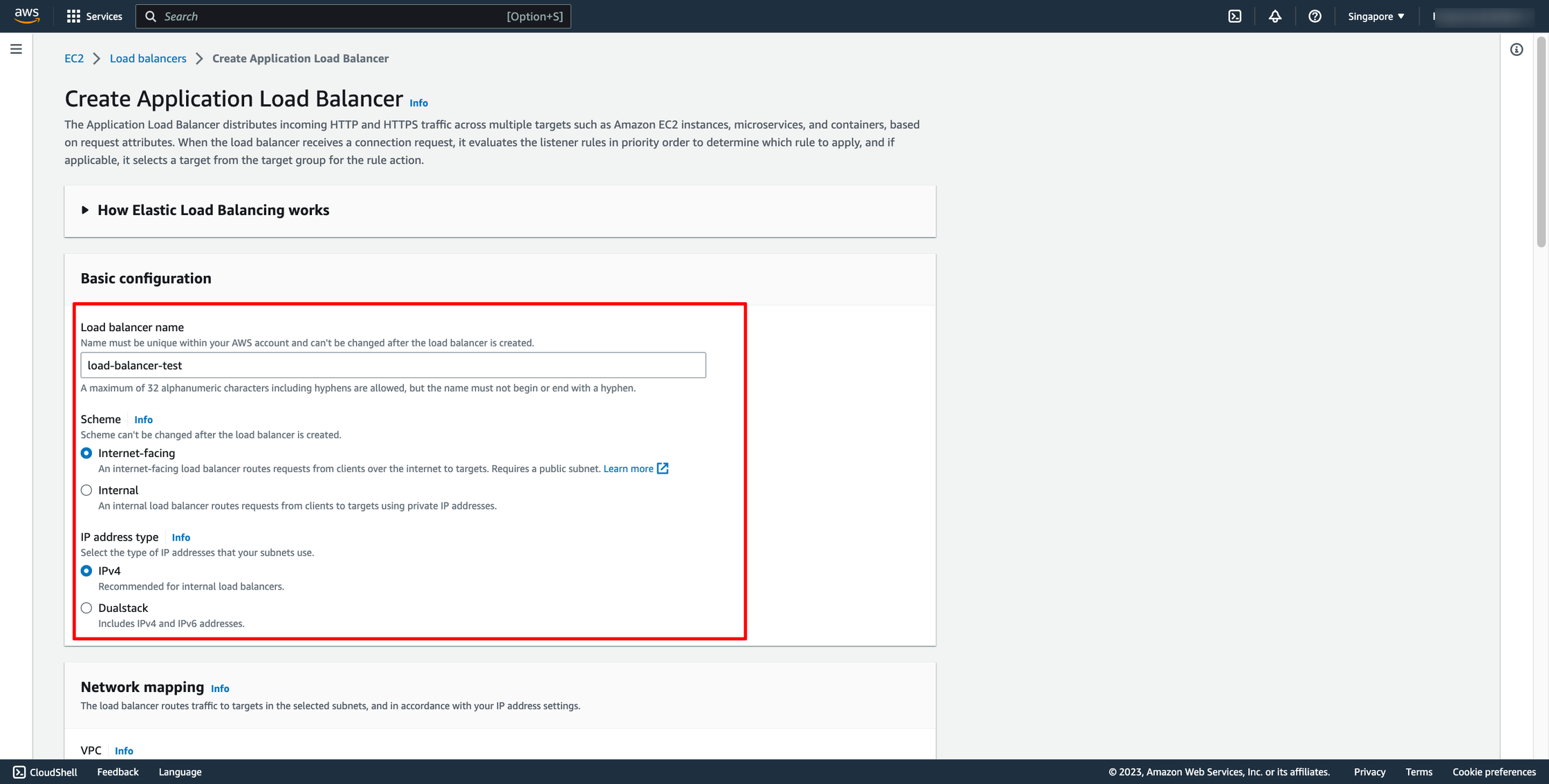

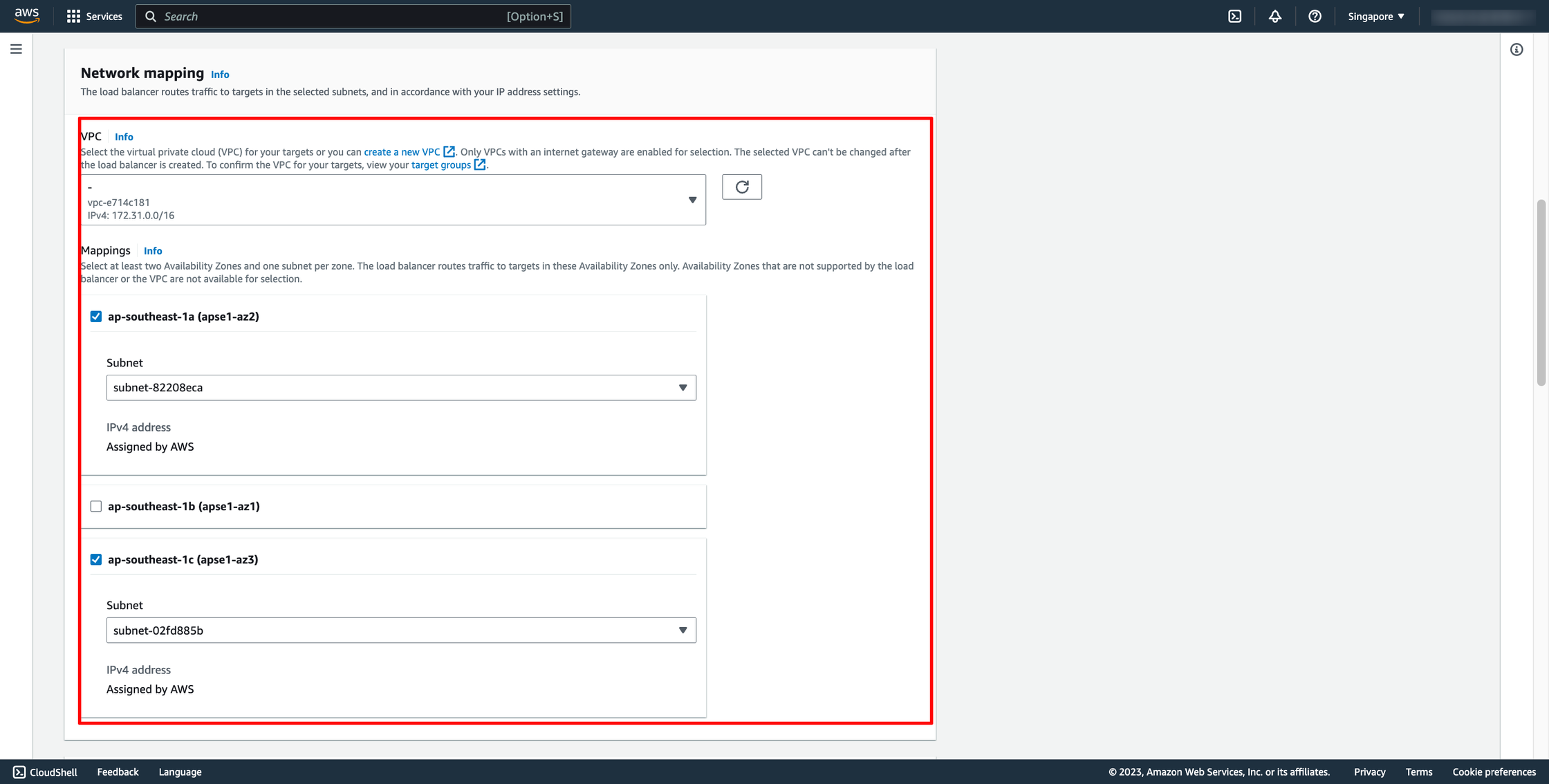

Configure basic configuration:

Configure basic configuration:

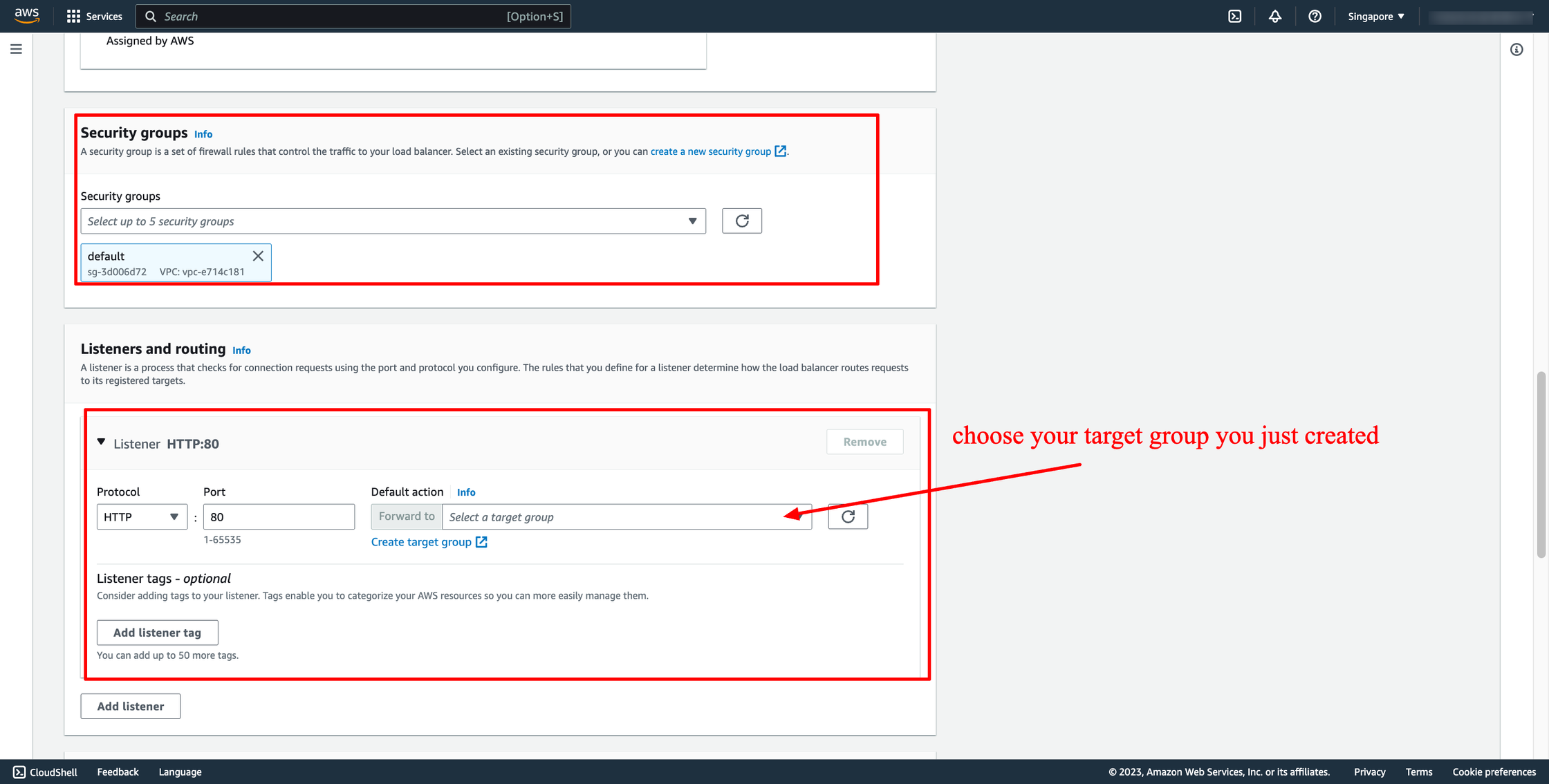

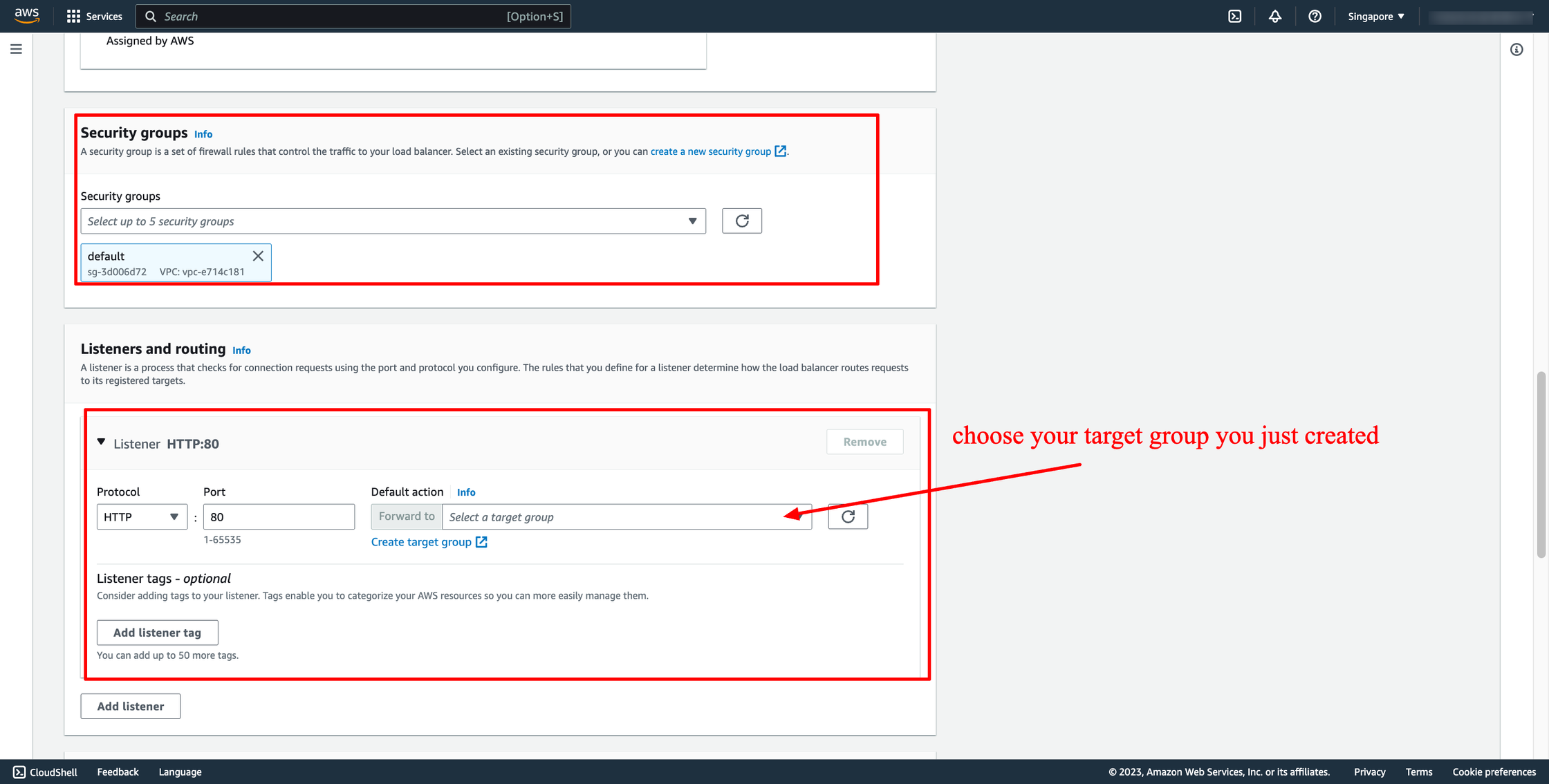

Choose Security group and Target group:

Note: Please make sure your security group has enough rules for access from Internet like port 80 and port 443.

Choose Security group and Target group:

Note: Please make sure your security group has enough rules for access from Internet like port 80 and port 443.

Then, create load balancer.

Waiting for the state of Load balancer from “Provisioning” to “Active”.

Step 3. Create ECS service with Load balancer

Assuming you already have an ECS cluster and task definition. Now, you just need to create a new service in your cluster.

Go to your ECS cluster → In the Services tab → Choose “Create”.

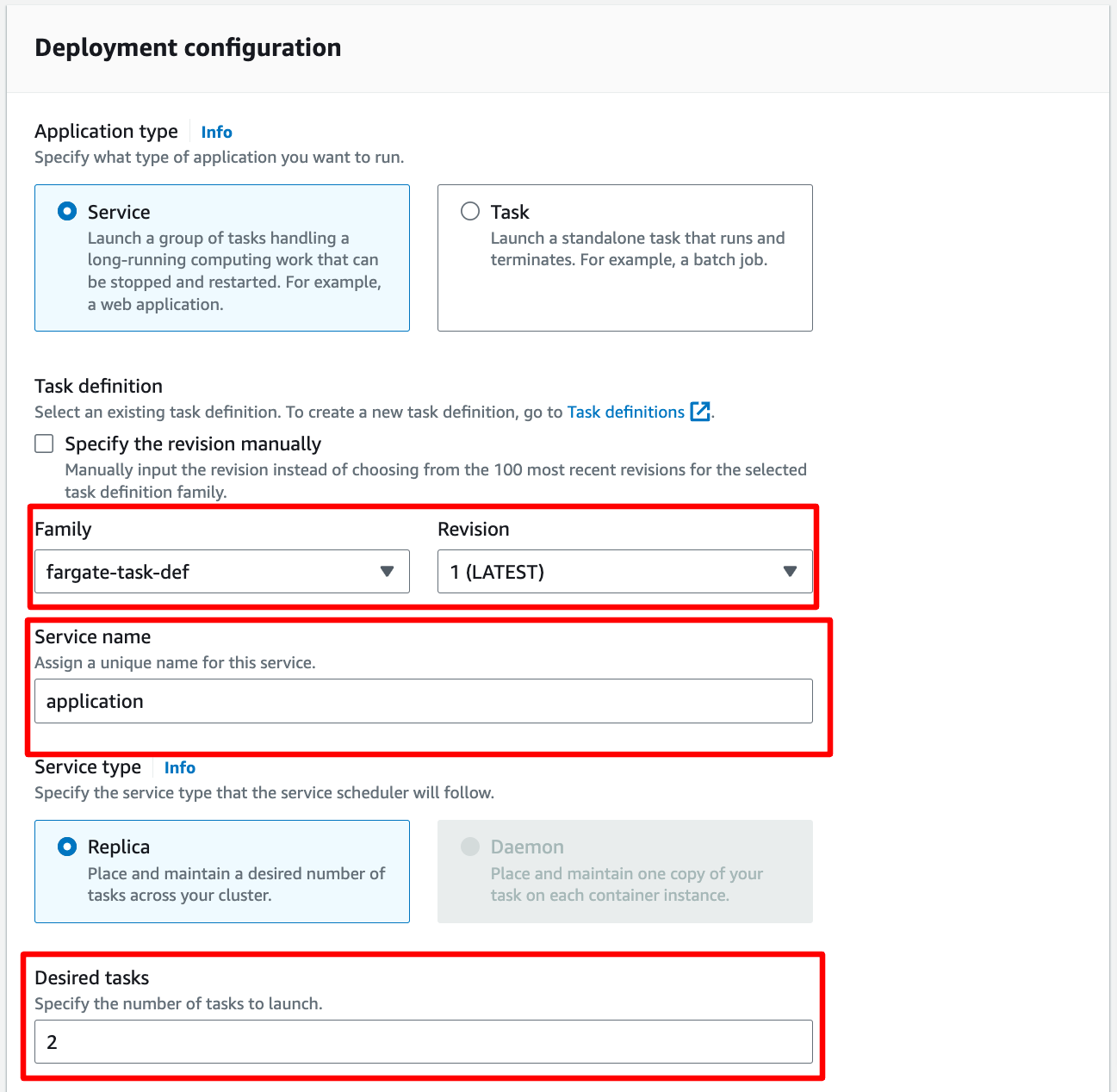

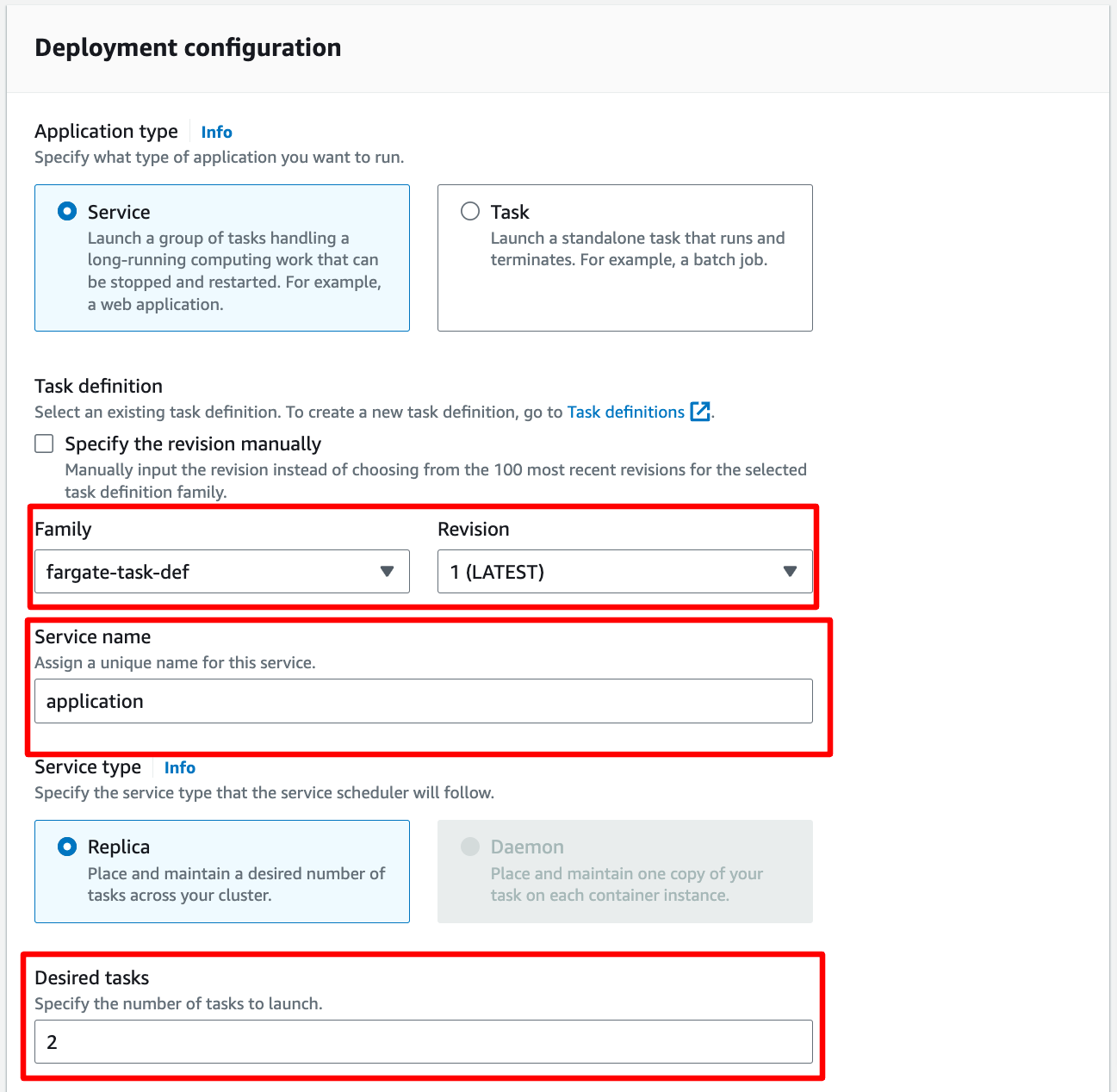

In Deployment configuration, choose your task definition, enter the service name and desired tasks. You should enter the number of tasks is greater than one because we have ALB here, we want to load balancing between many tasks.

Then, create load balancer.

Waiting for the state of Load balancer from “Provisioning” to “Active”.

Step 3. Create ECS service with Load balancer

Assuming you already have an ECS cluster and task definition. Now, you just need to create a new service in your cluster.

Go to your ECS cluster → In the Services tab → Choose “Create”.

In Deployment configuration, choose your task definition, enter the service name and desired tasks. You should enter the number of tasks is greater than one because we have ALB here, we want to load balancing between many tasks.

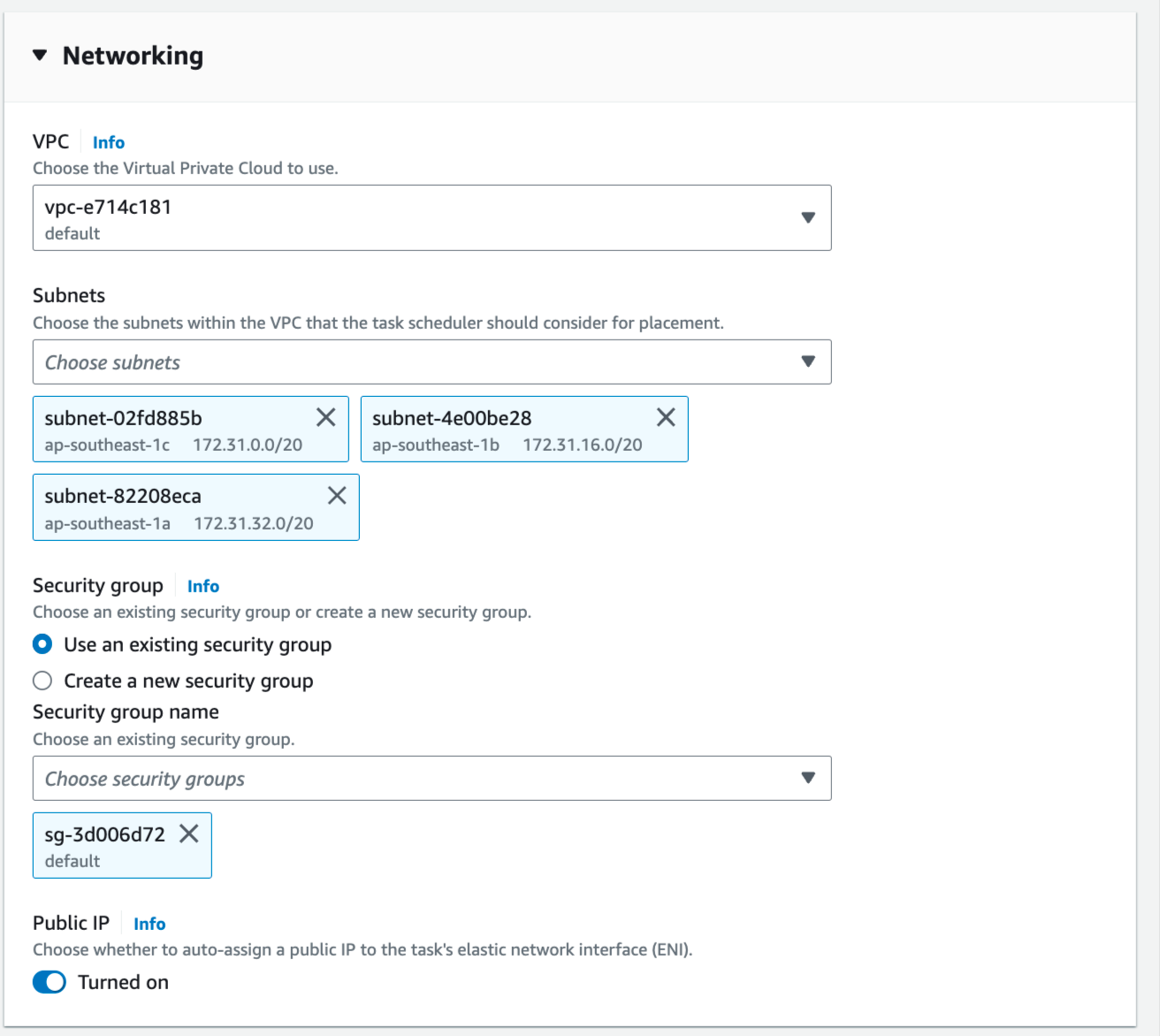

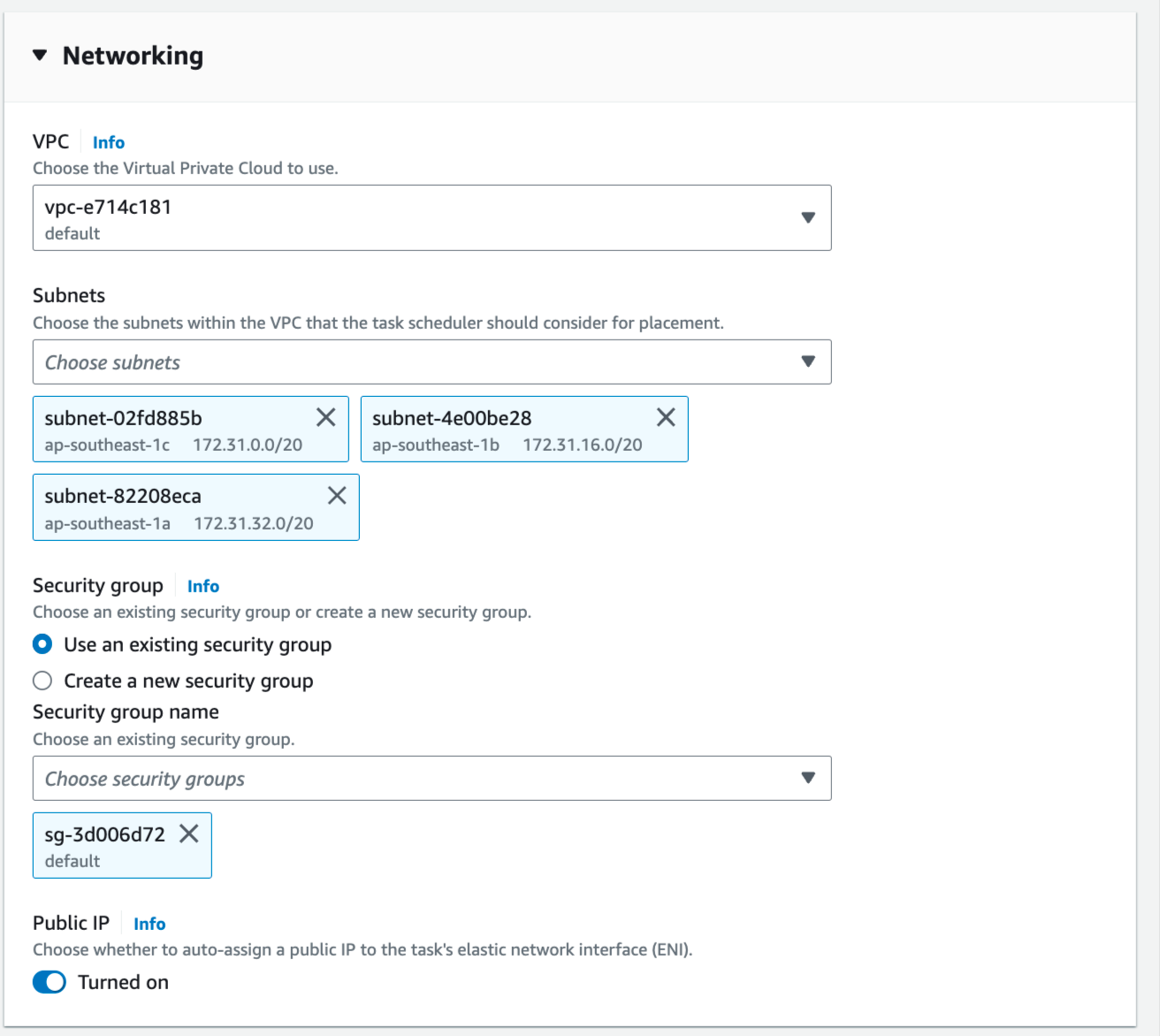

In Networking, you can leave them by default or configure with another VPC:

In Networking, you can leave them by default or configure with another VPC:

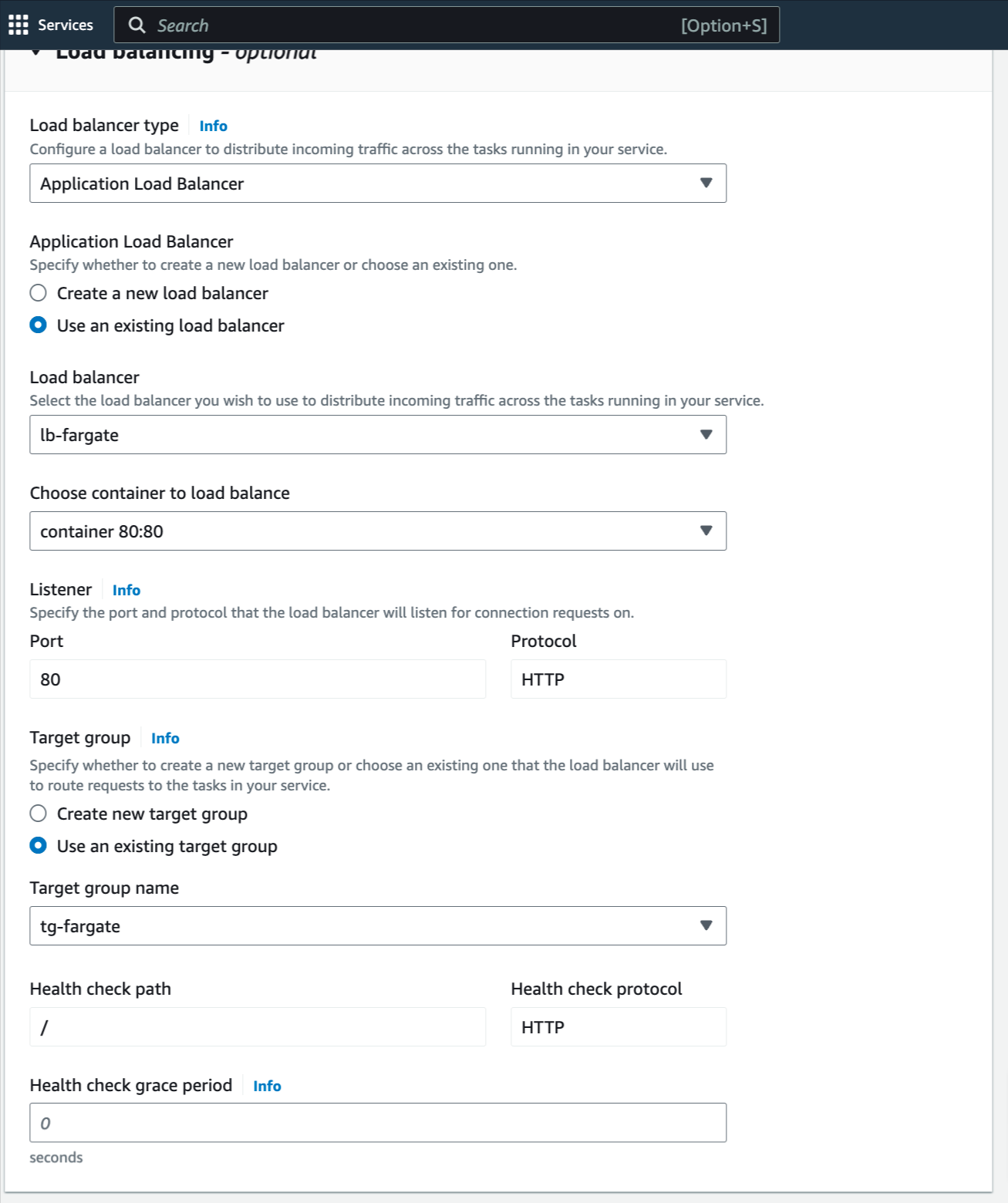

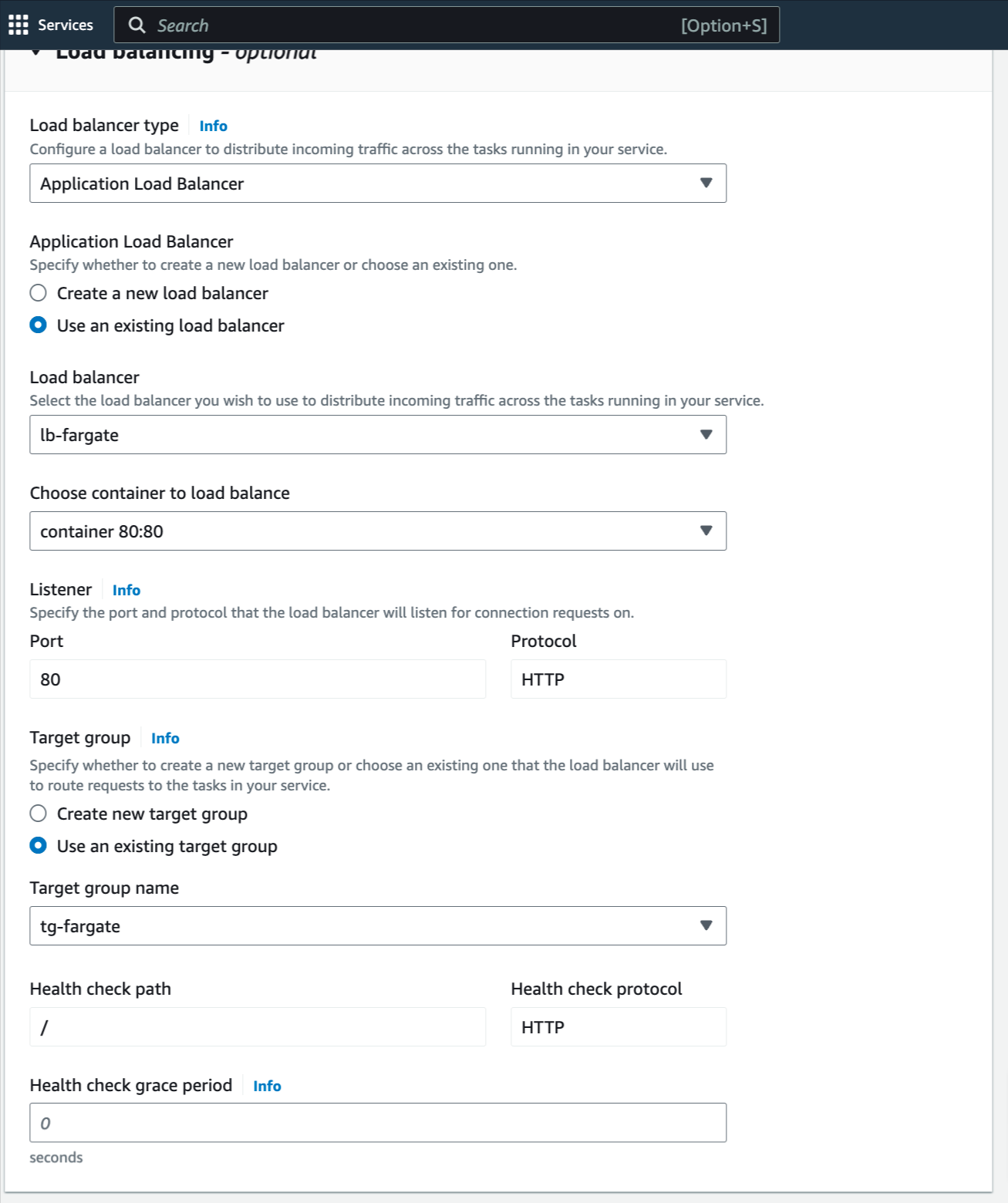

In Load Balancing, please choose the ALB you just created in the previous step, choose the existing target group also:

In Load Balancing, please choose the ALB you just created in the previous step, choose the existing target group also:

Now, the service is ready to create, click to create.

Estimated Time

With DevOps knowledge: 8-10 hours

Without DevOps knowledge: 4-5 days

Now, the service is ready to create, click to create.

Estimated Time

With DevOps knowledge: 8-10 hours

Without DevOps knowledge: 4-5 days

For Choose a target type,Instances to register targets by instance ID, IP addresses to register targets by IP address, or Lambda function to register a Lambda function as a target.

If your service's task definition uses the awsvpc network mode (which is required for the Fargate launch type), you must choose IP addresses as the target type This is because tasks that use the awsvpc network mode are associated with an elastic network interface, not an Amazon EC2 instance.

If the ECS service is EC2 launch type, please choose Instances.

If the ECS service is Fargate launch type, please choose IP addresses.

For Choose a target type,Instances to register targets by instance ID, IP addresses to register targets by IP address, or Lambda function to register a Lambda function as a target.

If your service's task definition uses the awsvpc network mode (which is required for the Fargate launch type), you must choose IP addresses as the target type This is because tasks that use the awsvpc network mode are associated with an elastic network interface, not an Amazon EC2 instance.

If the ECS service is EC2 launch type, please choose Instances.

If the ECS service is Fargate launch type, please choose IP addresses.

You can skip the target selection, we will update later. Then, create target group.

Step 2. Create Load balancer

Navigate to Load Balancers → click to Create load balancer:

You can skip the target selection, we will update later. Then, create target group.

Step 2. Create Load balancer

Navigate to Load Balancers → click to Create load balancer:

In this blog, we use Application Load Balancer, please choose it:

In this blog, we use Application Load Balancer, please choose it:

Configure basic configuration:

Configure basic configuration:

- For Scheme, choose Internet-facing or Internal. An internet-facing load balancer routes requests from clients to targets over the internet. An internal load balancer routes requests to targets using private IP addresses.

- For IP address type, choose the IP adressing for the containers subnets.

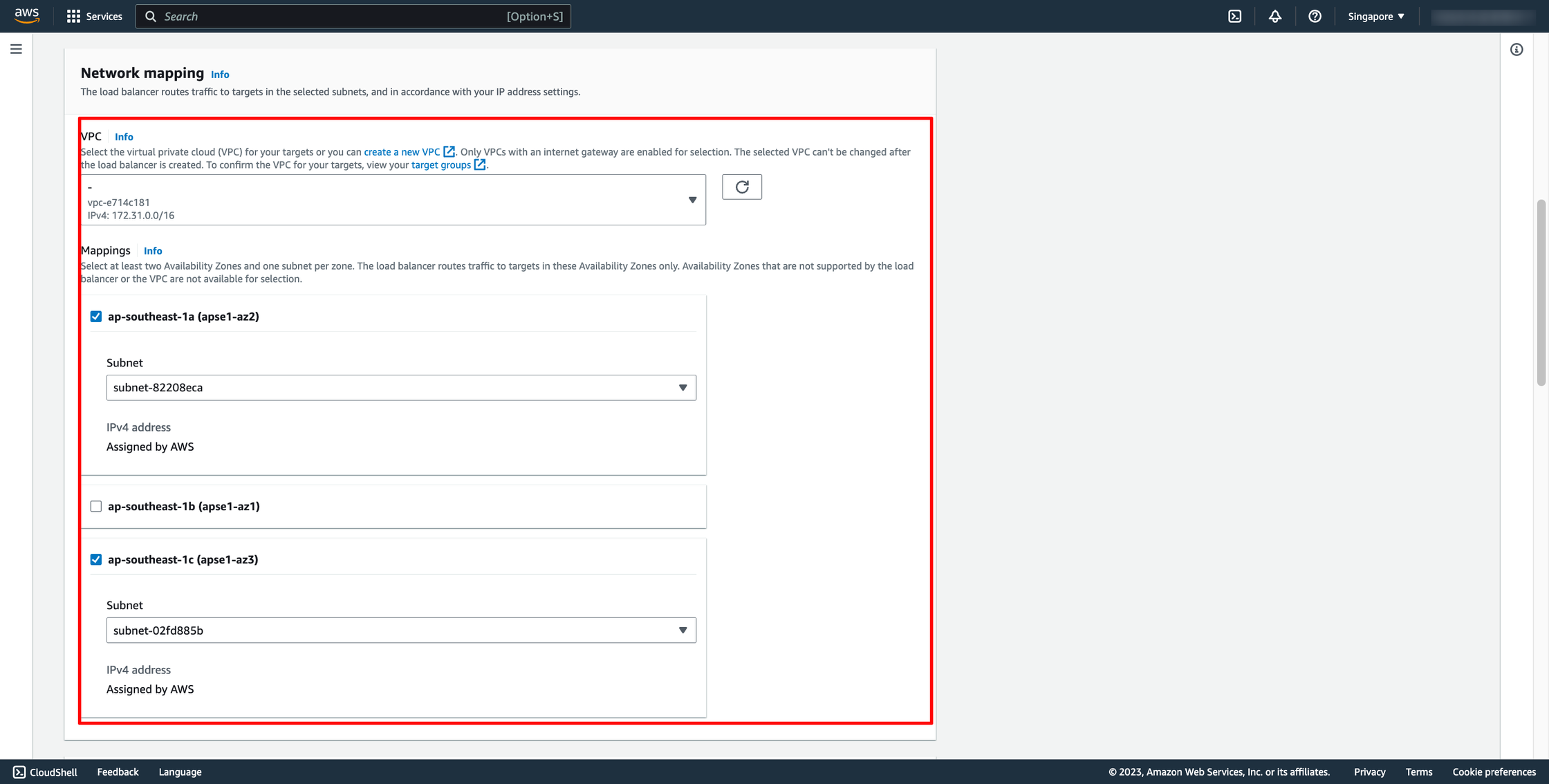

- For VPC, select the same VPC that you used for the container instances on which you intend to run your service.

- For Mappings, select the Availability Zones to use for your load balancer. If there is one subnet for that Availability Zone, it is selected. If there is more than one subnet for that Availability Zone, select one of the subnets. You can select only one subnet per Availability Zone. Your load balancer subnet configuration must include all Availability Zones that your container instances reside in.

Choose Security group and Target group:

Note: Please make sure your security group has enough rules for access from Internet like port 80 and port 443.

Choose Security group and Target group:

Note: Please make sure your security group has enough rules for access from Internet like port 80 and port 443.

Then, create load balancer.

Waiting for the state of Load balancer from “Provisioning” to “Active”.

Step 3. Create ECS service with Load balancer

Assuming you already have an ECS cluster and task definition. Now, you just need to create a new service in your cluster.

Go to your ECS cluster → In the Services tab → Choose “Create”.

In Deployment configuration, choose your task definition, enter the service name and desired tasks. You should enter the number of tasks is greater than one because we have ALB here, we want to load balancing between many tasks.

Then, create load balancer.

Waiting for the state of Load balancer from “Provisioning” to “Active”.

Step 3. Create ECS service with Load balancer

Assuming you already have an ECS cluster and task definition. Now, you just need to create a new service in your cluster.

Go to your ECS cluster → In the Services tab → Choose “Create”.

In Deployment configuration, choose your task definition, enter the service name and desired tasks. You should enter the number of tasks is greater than one because we have ALB here, we want to load balancing between many tasks.

In Networking, you can leave them by default or configure with another VPC:

In Networking, you can leave them by default or configure with another VPC:

In Load Balancing, please choose the ALB you just created in the previous step, choose the existing target group also:

In Load Balancing, please choose the ALB you just created in the previous step, choose the existing target group also:

Now, the service is ready to create, click to create.

Estimated Time

With DevOps knowledge: 8-10 hours

Without DevOps knowledge: 4-5 days

Now, the service is ready to create, click to create.

Estimated Time

With DevOps knowledge: 8-10 hours

Without DevOps knowledge: 4-5 days

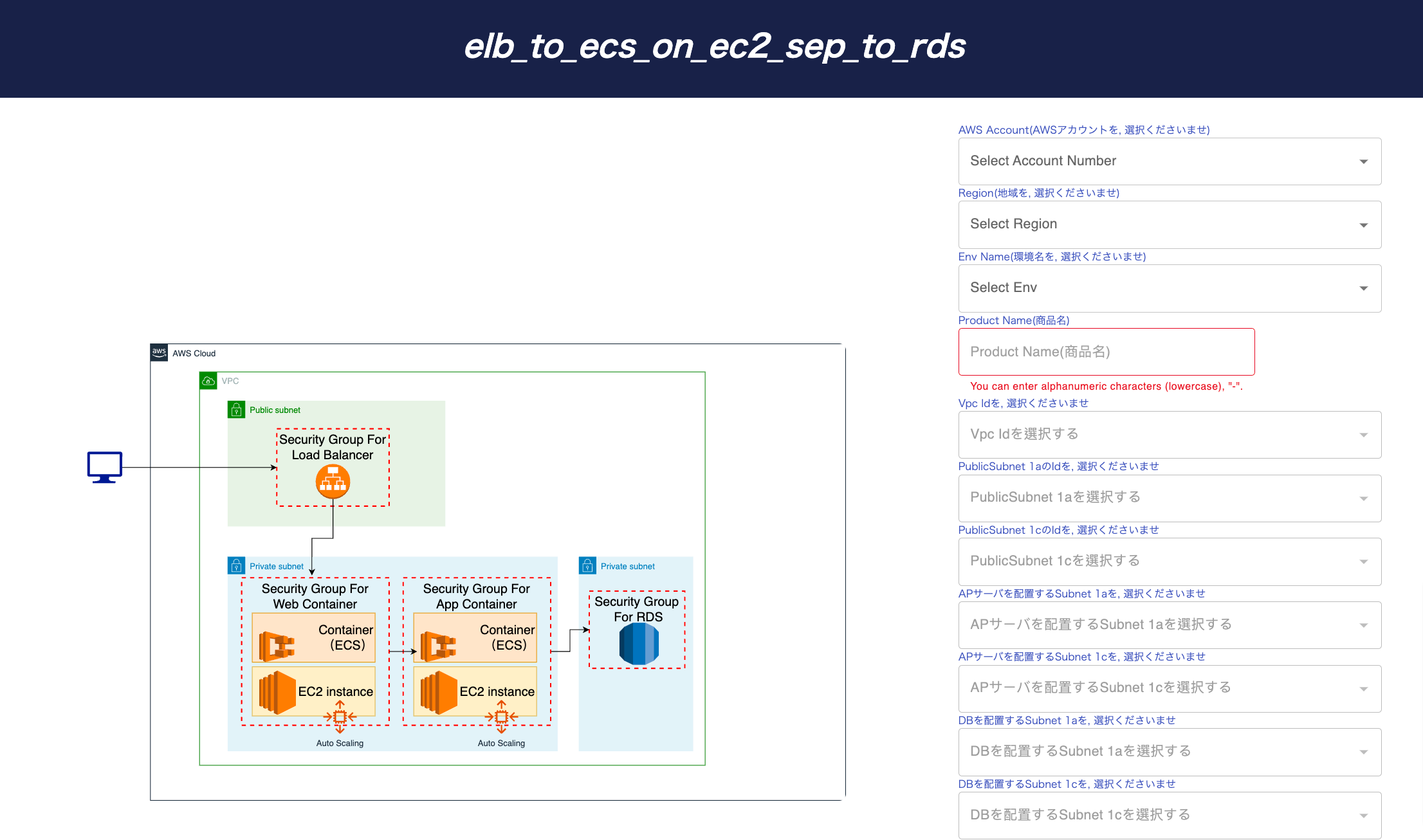

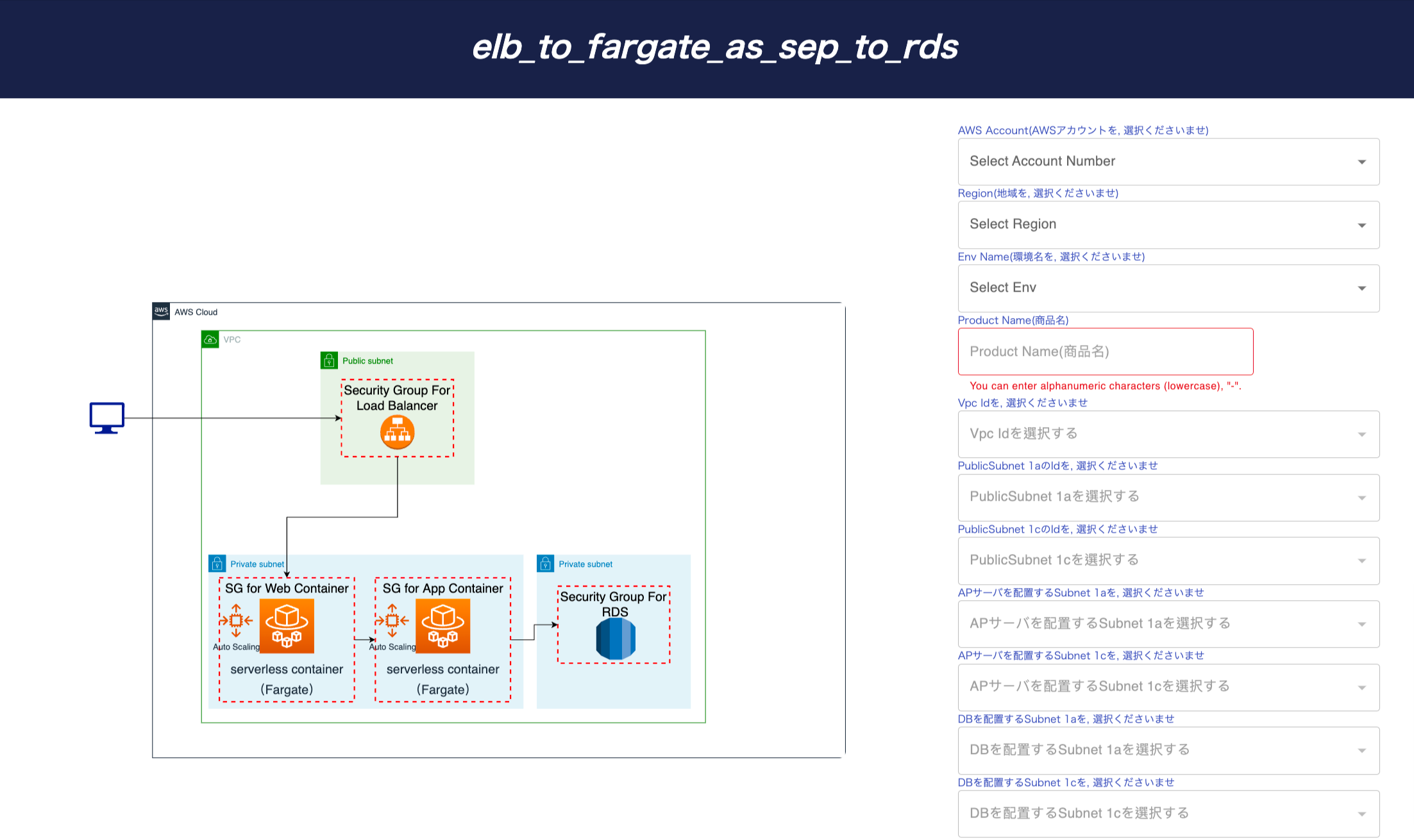

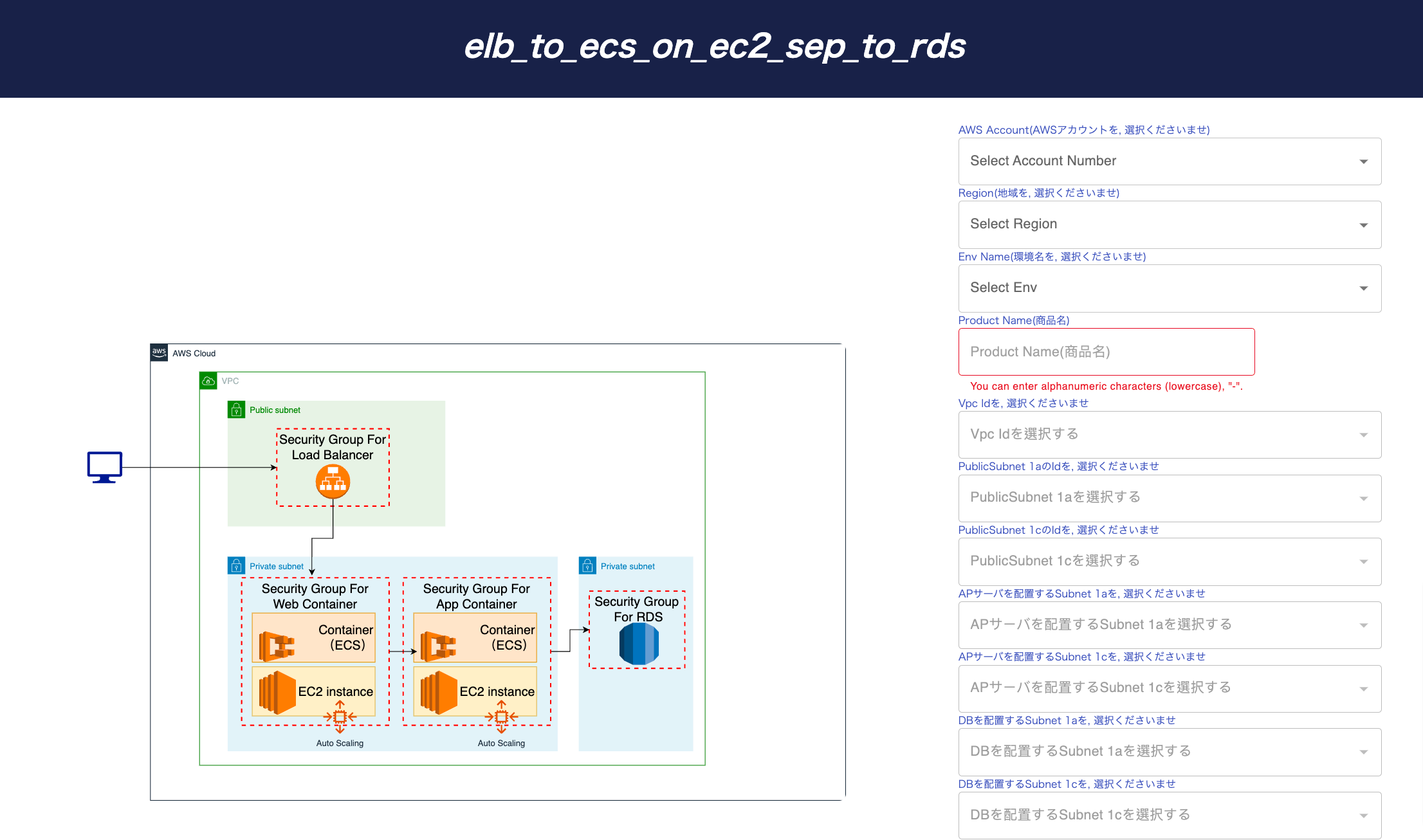

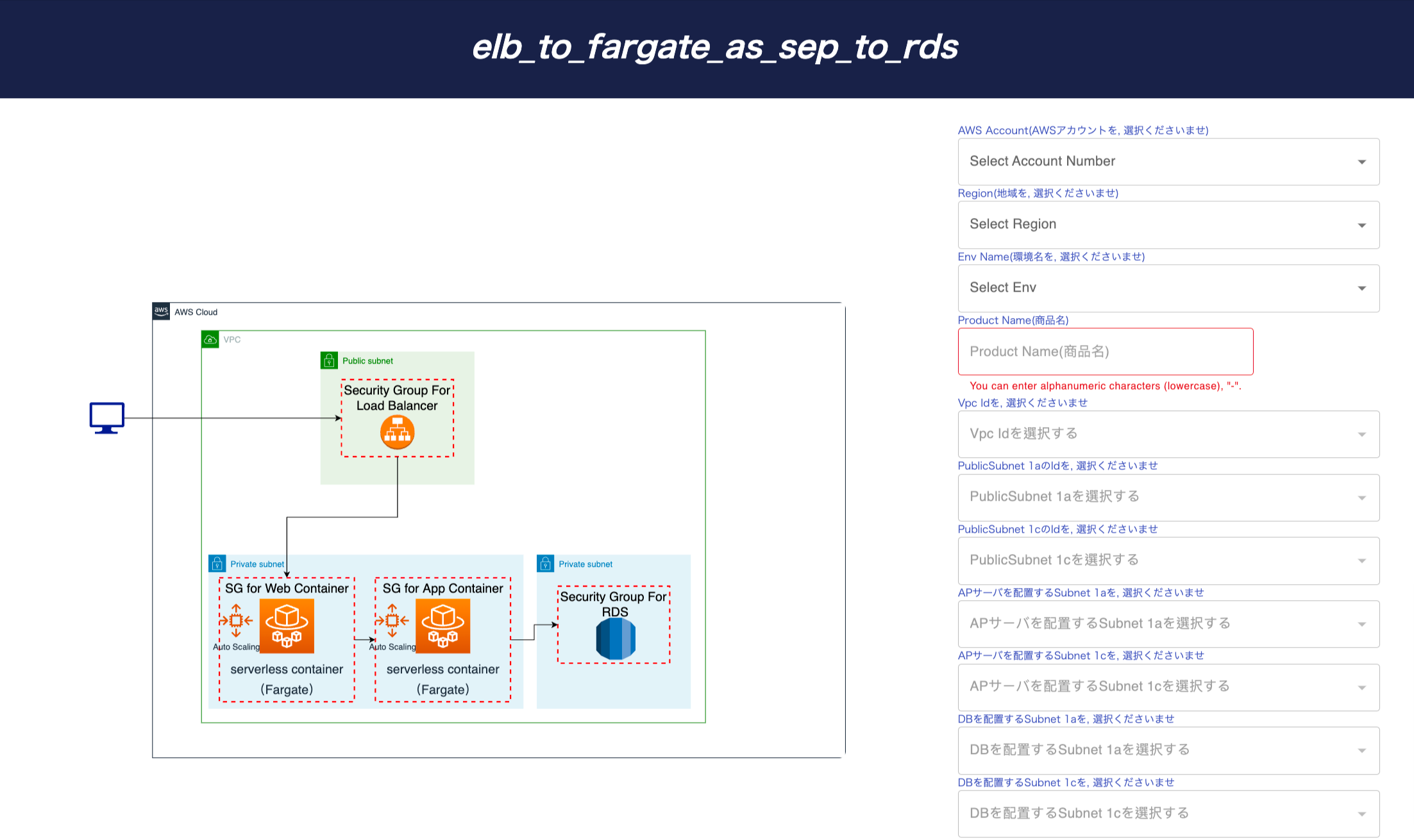

6. Creating AWS Load balancing to ECS with Prismscaler

Prism Scaler provides you with an intuitive model and a concise form, you just need to fill in the necessary information and press create, now PrismScaler will automatically build a simple web application/system that moves on a container on AWS.

Detailed Configuration on AWS:

Detailed Configuration on AWS:

Detailed Configuration on AWS:

Detailed Configuration on AWS:

- One load balancer, public

- As a web server, make the Fargate container private

- As an AP server, the Fargate container is private

- One RDS for private

- Role, SecurityGroup, cluster associated with the above

- If it is necessary to separate the web server and the AP server for security matters, etc.

- If the access may increase rapidly, such as a shopping app

- It is necessary to save data such as user information, and when the user is changed, the screening and processing of the screen are changed.

- If you want to implement a web server and AP server with a serverless container

7. What is different between EC2 type and Fargate type creation?

- Underlying Infrastructure:

- EC2 Launch Type:

- In the EC2 launch type, you are responsible for provisioning and managing the underlying Amazon Elastic Compute Cloud (EC2) instances.

- You have full control over the EC2 instances, including their instance types, operating systems, security groups, and network configurations.

- This launch type is suitable when you want more control over the infrastructure, need to run multiple containers on the same EC2 instance, or have specific hardware requirements.

- Fargate Launch Type:

- With the Fargate launch type, AWS fully manages the underlying infrastructure for you.

- You don't have direct access to the EC2 instances running your containers; AWS abstracts this layer.

- Fargate is designed for a serverless experience where you can focus solely on your containers without worrying about EC2 instances. It's ideal for microservices architectures and simplifies container deployment.

- EC2 Launch Type:

- Resource Allocation:

- EC2 Launch Type:

- You allocate resources (CPU and memory) to your containers based on the EC2 instance type you choose.

- Resource allocation is at the instance level, meaning containers share the instance's resources.

- Fargate Launch Type:

- In Fargate, you specify the CPU and memory resources for each individual task (container).

- This granular resource allocation ensures better isolation between tasks and makes it easier to match resources to your application's requirements.

- EC2 Launch Type:

- Scaling:

- EC2 Launch Type:

- You can use Amazon EC2 Auto Scaling to scale your EC2 instances based on demand.

- You manually configure scaling policies and rules for the EC2 instances.

- Fargate Launch Type:

- Fargate abstracts the scaling of the underlying infrastructure.

- You define scaling policies at the task level, allowing you to automatically scale the number of containers (tasks) based on demand.

- EC2 Launch Type:

- Pricing:

- EC2 Launch Type:

- Pricing includes costs for the EC2 instances, along with any additional AWS resources you use.

- Costs depend on the chosen instance types, regions, and usage.

- Fargate Launch Type:

- Pricing is based on the vCPU and memory resources allocated to your tasks, with no separate charges for EC2 instances.

- Fargate offers a more predictable and granular pricing model.

- EC2 Launch Type:

- Isolation:

- EC2 Launch Type:

- Containers on the same EC2 instance share the underlying hardware resources.

- You can use placement groups and strategies to control the placement of containers.

- Fargate Launch Type:

- Fargate tasks run in complete isolation from each other on the shared infrastructure.

- This isolation ensures greater security and eliminates resource contention issues.

- EC2 Launch Type:

8. Describe more about how to scale containers in ECS in auto scaling case

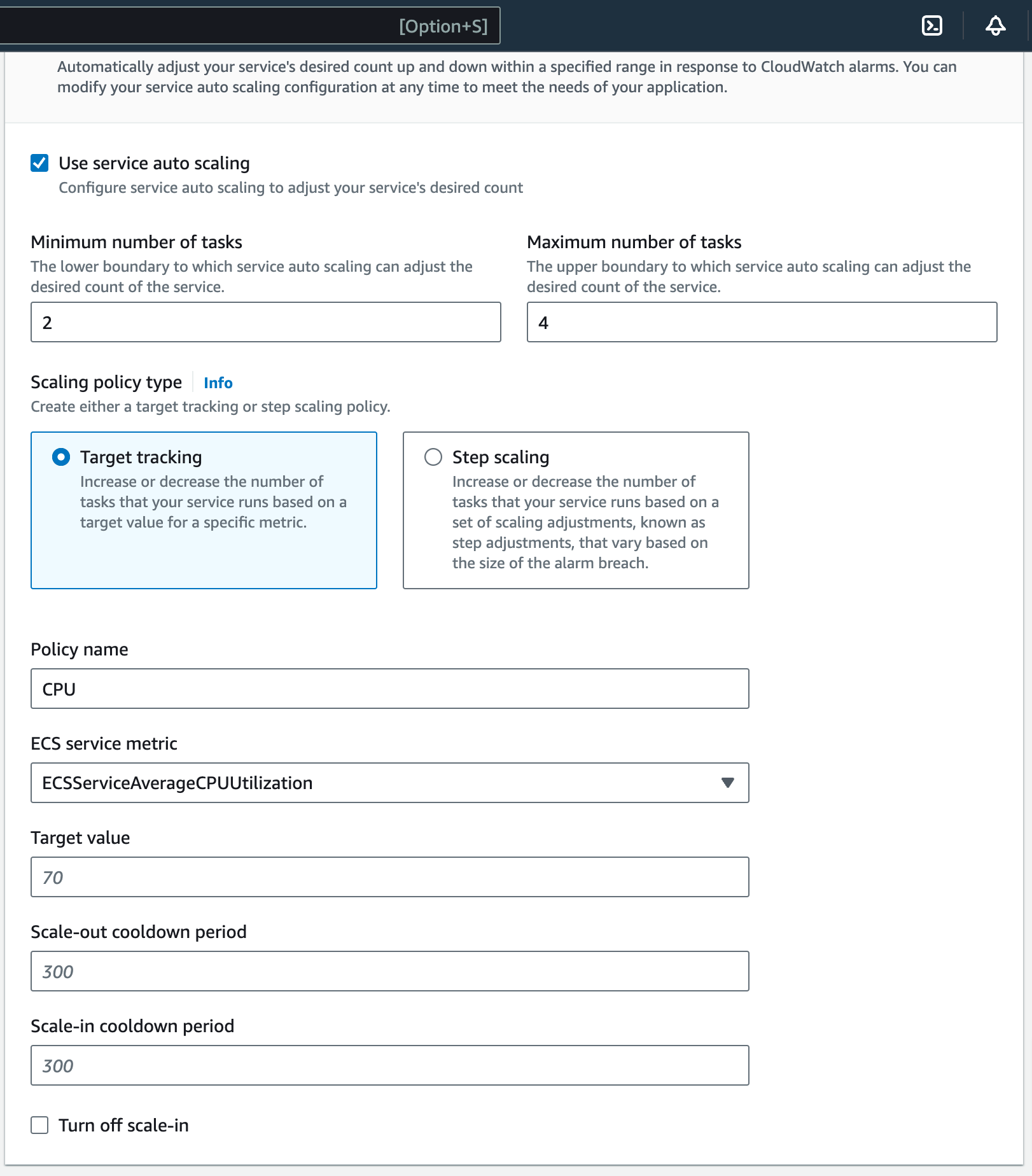

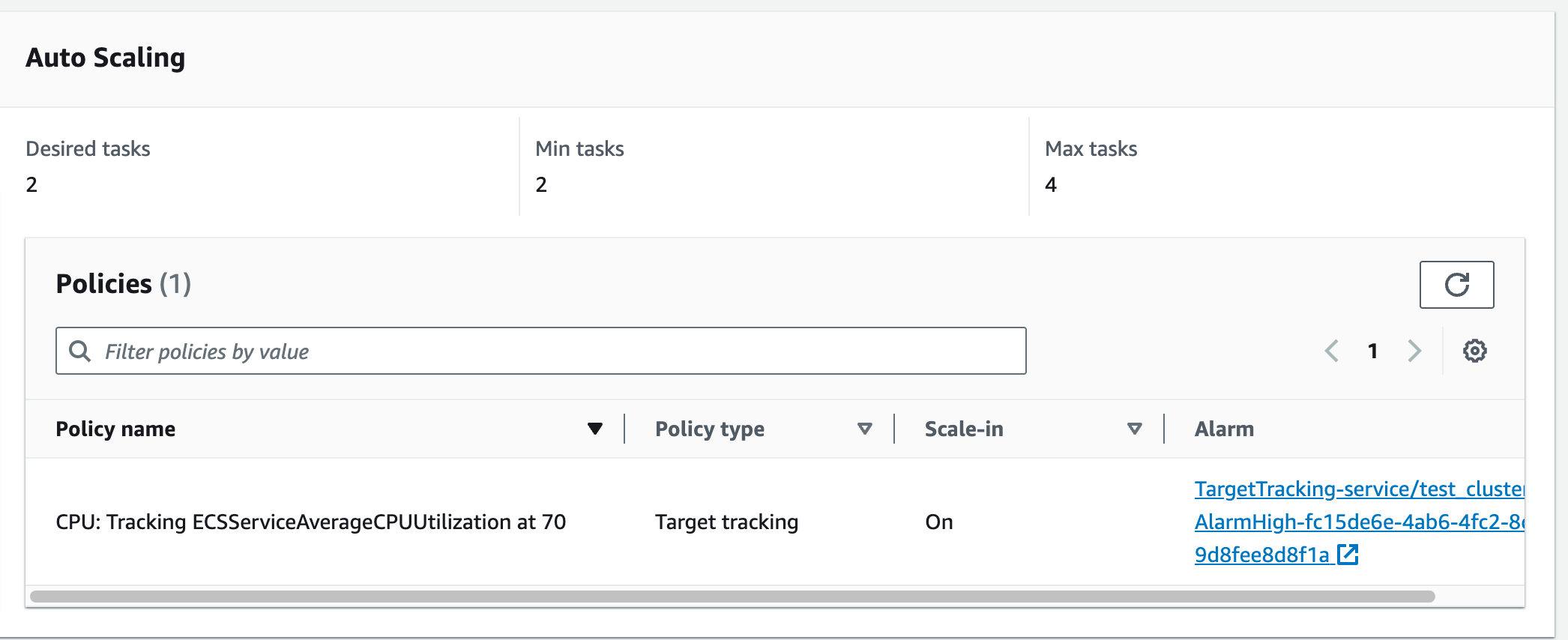

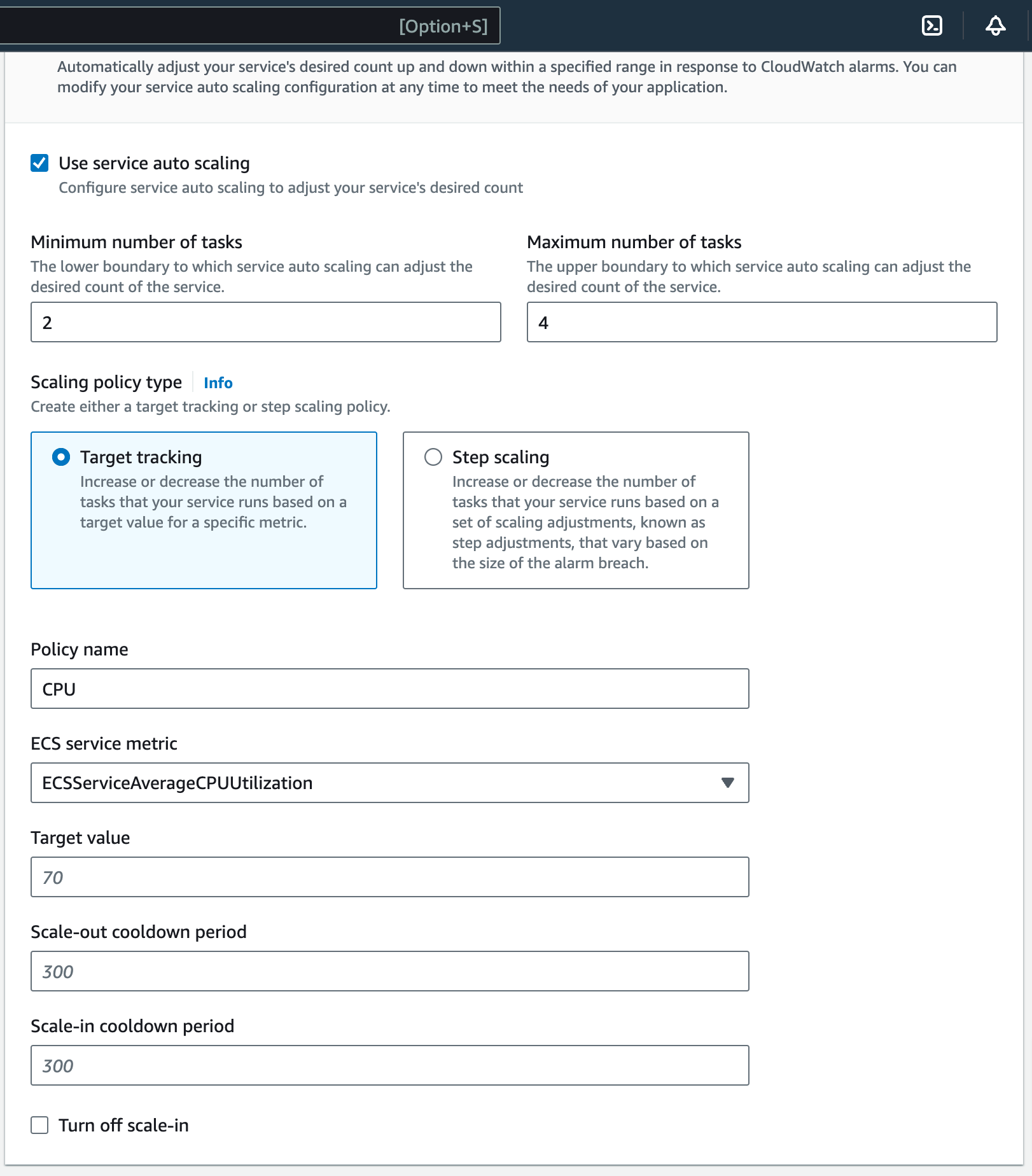

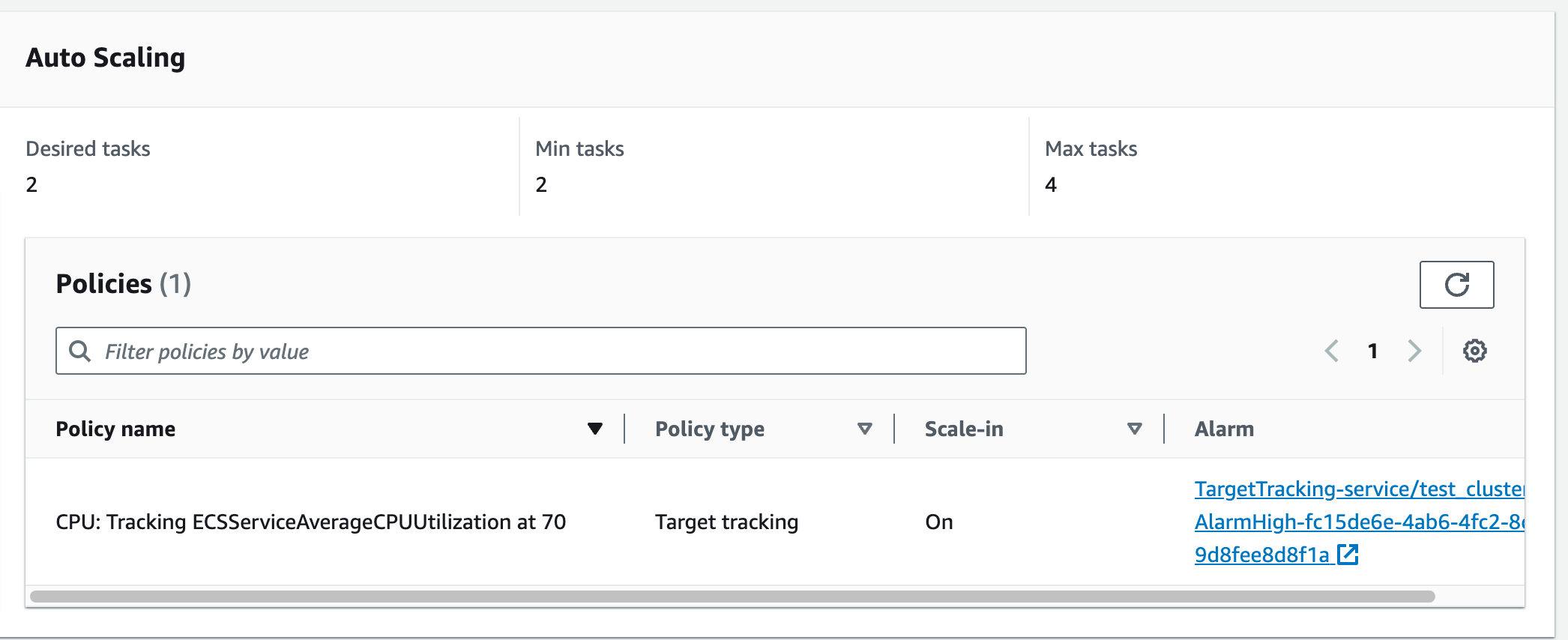

Scaling containers in Amazon Elastic Container Service (ECS) using auto scaling involves automatically adjusting the number of containers (tasks) based on predefined scaling policies and rules. ECS integrates with Amazon Elastic Container Service Auto Scaling (ECS Auto Scaling) to achieve this.

To enable auto scaling for your ECS service, follow these steps:

- ECS Service Auto Scaling:

- Open the ECS service in the AWS Management Console.

- Click on the "Auto Scaling" tab.

- Create a scaling policy based on your desired metric, such as CPU utilization or a custom CloudWatch metric.

- Set up scaling triggers and define the scaling behavior (e.g., add or remove tasks when the metric crosses a certain threshold).

- Configure the minimum and maximum number of tasks to run in your service.

9. Reference

The reference section lists the sources or external materials used in the article, allowing readers to explore further or verify the information provided.