1. Introduction

Hello! We are a writer team from Definer Inc.

In this issue, you are wondering how to integrate Azure Pipelines and GCP Cloud Run.

Let's take a look at the actual screens and resources to explain in detail.

2. Purpose/Use Cases

While Azure Pipelines is primarily a CI/CD tool for Microsoft Azure, it can also be used to build CI/CD pipelines for deploying applications to Google Cloud Platform (GCP) services like Cloud Run. Here are some use cases where you might consider using Azure Pipelines to build CI/CD pipelines for deploying applications to Cloud Run:

- Multi-cloud Deployments: If your organization has a hybrid or multi-cloud strategy, where some applications are deployed on GCP and others on Azure, using Azure Pipelines allows you to have a unified CI/CD pipeline across both cloud platforms.

- Azure Pipelines Expertise: If your team is already familiar with Azure Pipelines and has existing CI/CD workflows built on it, leveraging the same tool for deploying applications to Cloud Run can streamline your processes and reduce the learning curve.

- Integration with Azure DevOps: If your organization uses Azure DevOps for project management, issue tracking, and other software development processes, using Azure Pipelines ensures tighter integration and better visibility of CI/CD workflows within your existing DevOps environment.

- Consistent CI/CD Experience: If you prefer the user experience and capabilities of Azure Pipelines, and it meets your CI/CD requirements, using it for Cloud Run deployments allows you to maintain a consistent CI/CD experience across different cloud providers.

- Cross-Platform Compatibility: Azure Pipelines supports a wide range of build agents, including Linux, macOS, and Windows, enabling you to build and deploy applications to Cloud Run from different development environments.

3. Azure Pipeline Introduction

Azure Pipelines is a cloud-based continuous integration and continuous delivery (CI/CD) platform provided by Microsoft Azure. It enables teams to automate the building, testing, and deployment of applications across various platforms and cloud providers. With Azure Pipelines, you can define flexible and customizable pipelines to deliver software faster and with higher quality.

Key features of Azure Pipelines include:

- Build and Test Automation: Azure Pipelines supports building and testing applications in a wide range of languages and platforms, including .NET, Java, Node.js, Python, and more. It offers a variety of built-in tasks and integrations with popular testing frameworks.

- Continuous Integration: Azure Pipelines enables you to automatically build and test your code whenever changes are pushed to a repository, providing early feedback and reducing integration issues.

- Continuous Delivery: You can define release pipelines to automate the deployment of applications to various environments, including Azure, on-premises servers, or other cloud providers. This allows for seamless and repeatable application deployments.

- Flexible Pipeline Configurations: Azure Pipelines provides a declarative YAML-based syntax to define pipelines, allowing you to version control and manage your pipeline configurations alongside your application code.

- Extensive Integration Ecosystem: Azure Pipelines integrates with a wide range of development tools, including Azure DevOps, GitHub, Bitbucket, Jira, and more. It offers a marketplace with hundreds of extensions to extend its functionality and integrate with various services and technologies.

- Multi-Cloud and Hybrid Support: While Azure Pipelines is primarily designed for Microsoft Azure, it also supports building and deploying applications to other cloud platforms, such as Amazon Web Services (AWS), Google Cloud Platform (GCP), and on-premises environments.

- Security and Compliance: Azure Pipelines includes built-in security measures, including secure code handling, secret management, and compliance with industry standards like ISO 27001, SOC 1, and SOC 2.

4. Step 1: Setup Connection

We will implement this as soon as possible.

First, we will create Container Registry and Azure Pipeline as preparation.

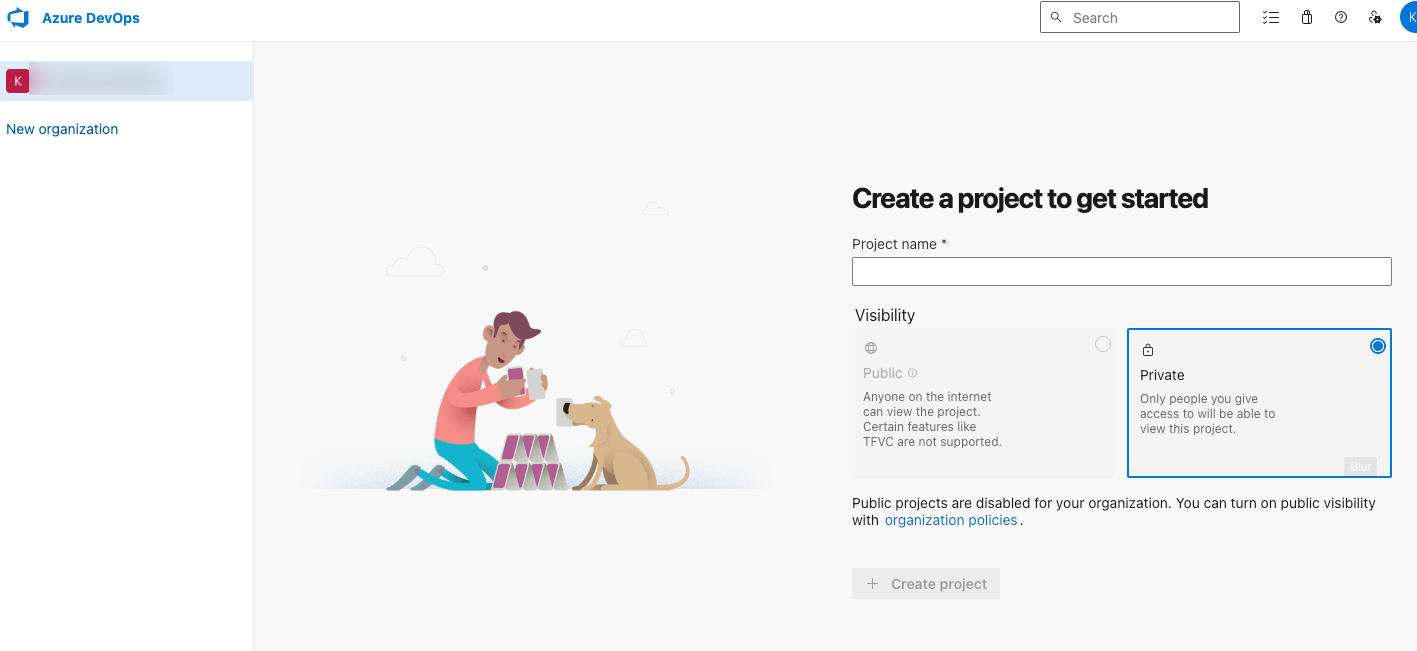

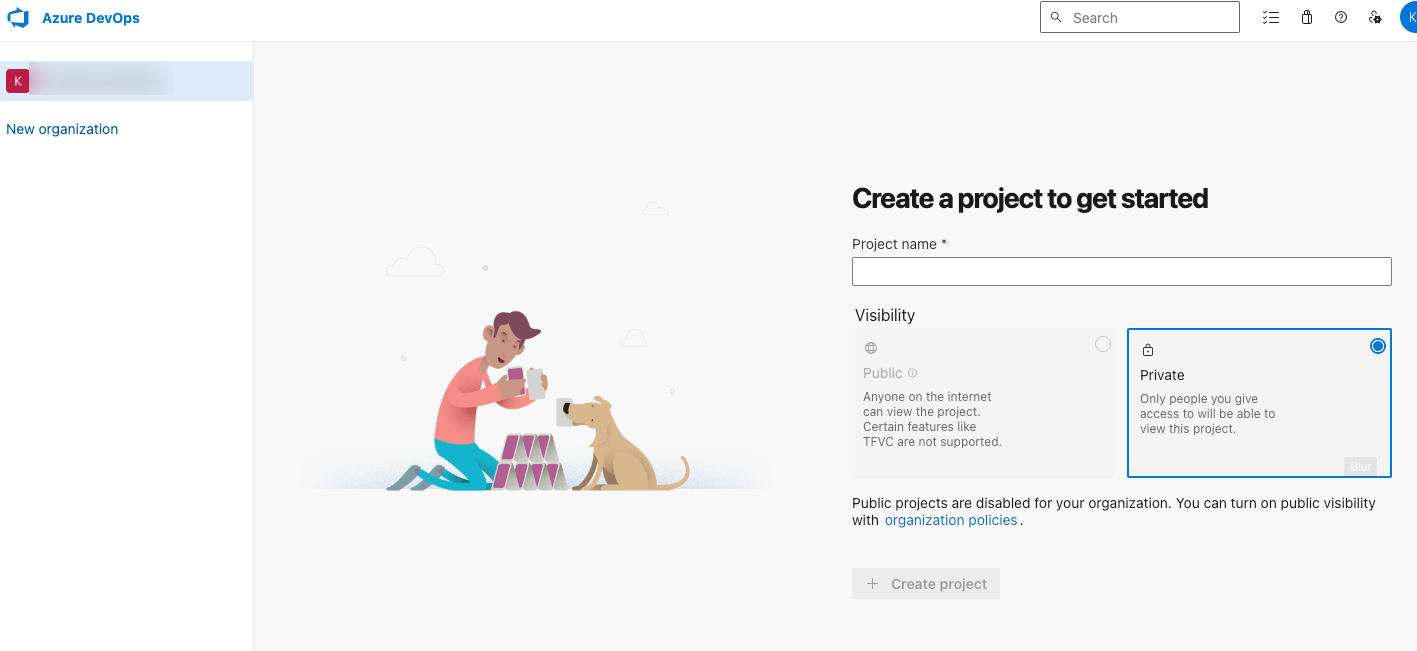

① Azure DevOps project setup

Access the Azure DevOps console and create a new private project.

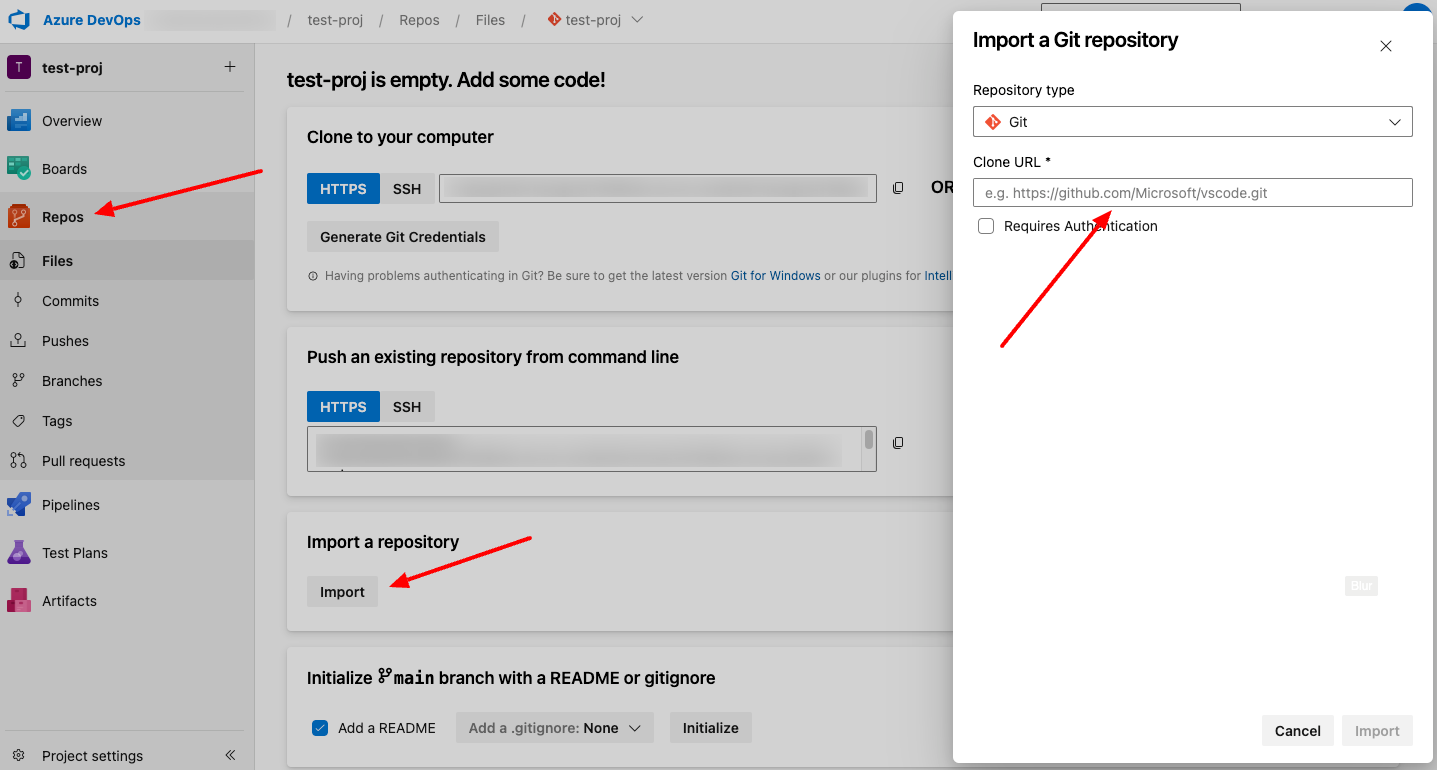

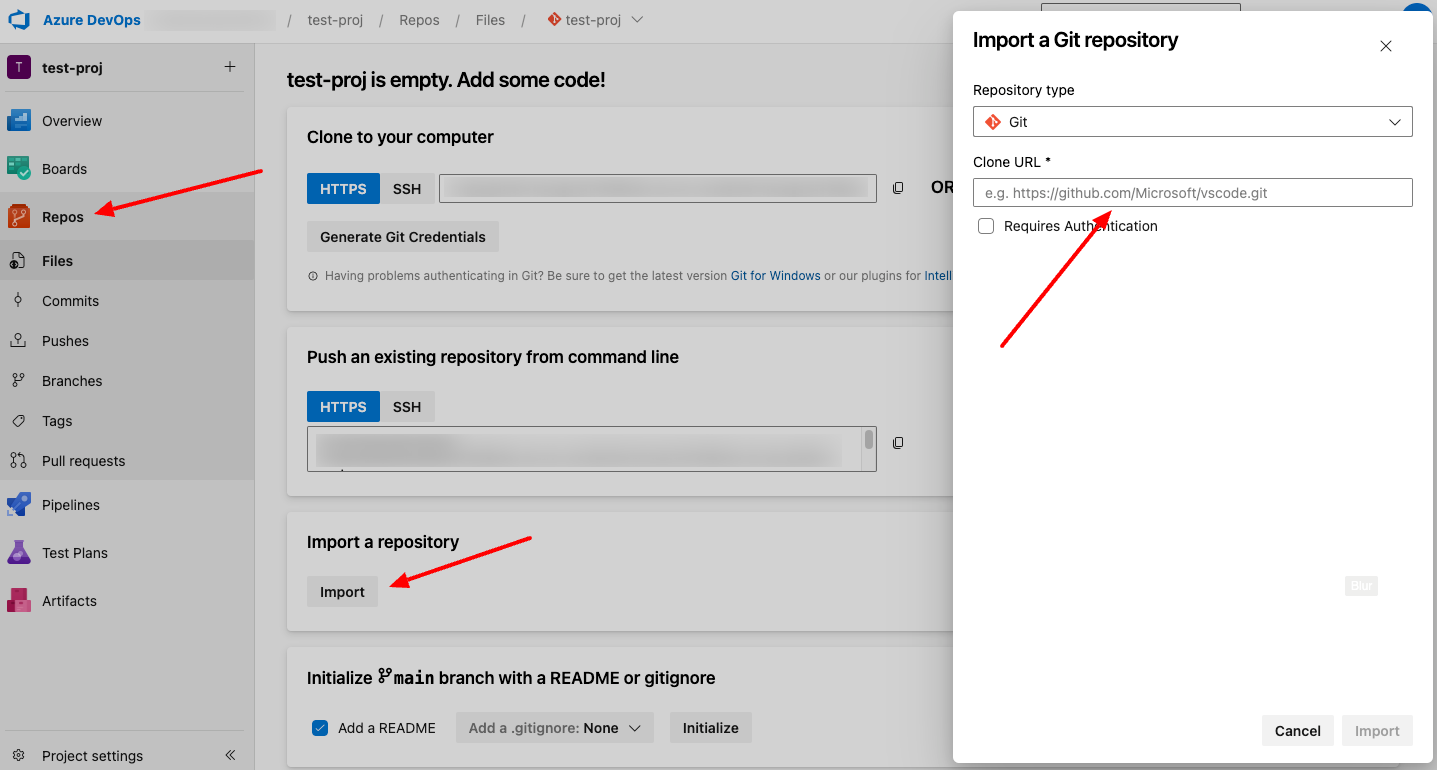

Select "Repo" in the right tab and clone the following repository from GitHub.

② Create a service account for Azure Pipeline in GCP

Login to your GCP account and create a service account

Create it with the following command.

Please note the key as it will be used later.

② Create a service account for Azure Pipeline in GCP

Login to your GCP account and create a service account

Create it with the following command.

Please note the key as it will be used later.

② Create a service account for Azure Pipeline in GCP

Login to your GCP account and create a service account

Create it with the following command.

Please note the key as it will be used later.

② Create a service account for Azure Pipeline in GCP

Login to your GCP account and create a service account

Create it with the following command.

Please note the key as it will be used later. ## Create a GCP Service Account

gcloud iam service-accounts create azure-pipelines-publisher \

--display-name="for_azure_pipelines" \

--project=${PROJECT_ID}

## Granting storage privileges to service accounts

gcloud projects add-iam-policy-binding ${PROJECT_ID} \

--member serviceAccount:azure-pipelines@${PROJECT_ID}.iam.gserviceaccount.com \

--role roles/storage.admin \

--project=${PROJECT_ID}

## Granting Deployment Authority to a Service Account

gcloud projects add-iam-policy-binding ${PROJECT_ID} \

--member serviceAccount:azure-pipelines@${PROJECT_ID}.iam.gserviceaccount.com \

--role roles/run.admin \

--project=${PROJECT_ID}

## Key Issuance

gcloud iam service-accounts keys create azure-pipelines.json \

--iam-account azure-pipelines@${PROJECT_ID}.iam.gserviceaccount.com \

--project=${PROJECT_ID}

③ Connect Azure Pipeline to Container Registry

Now, we will connect Azure Pipeline to GCP registry.

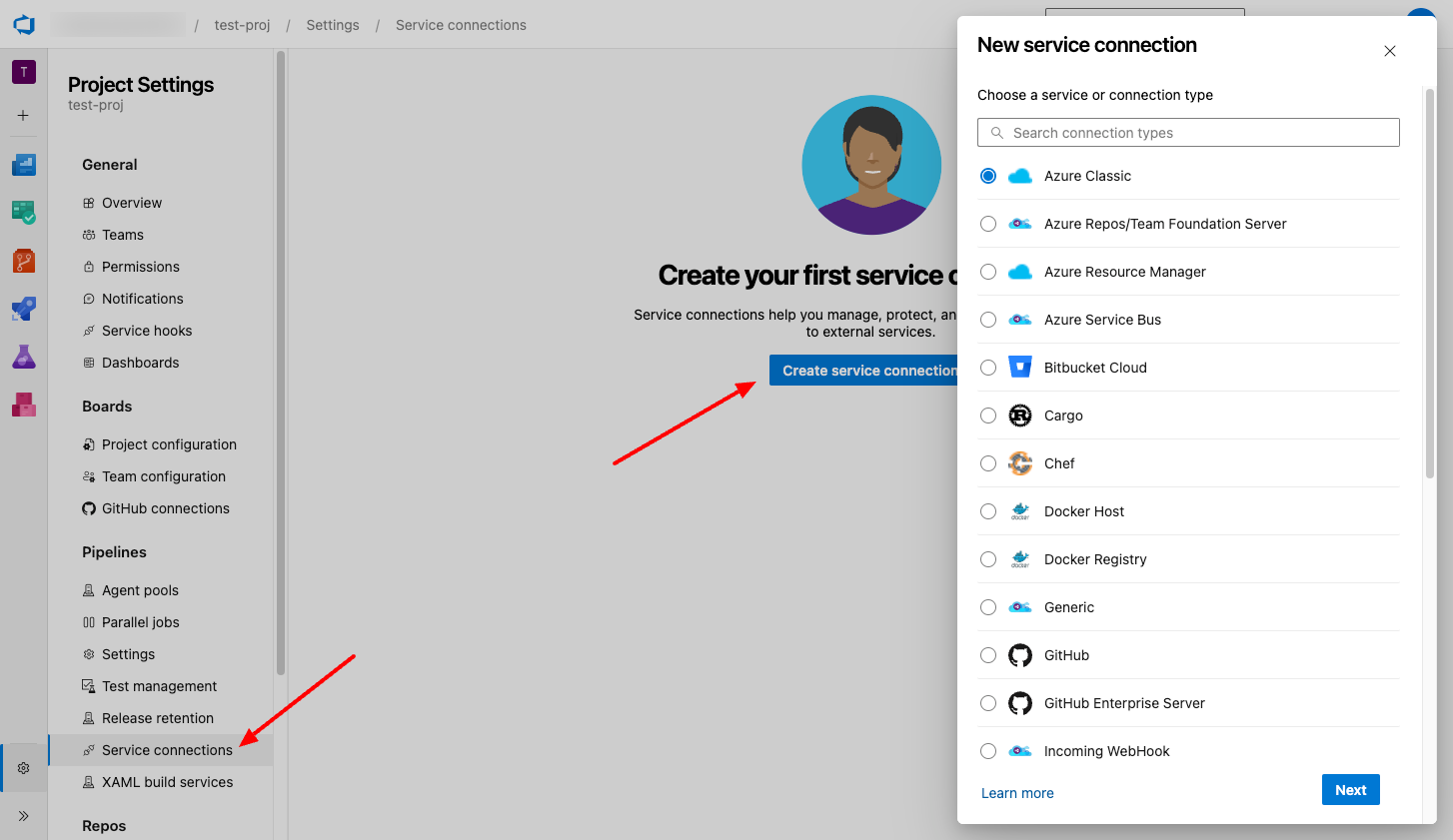

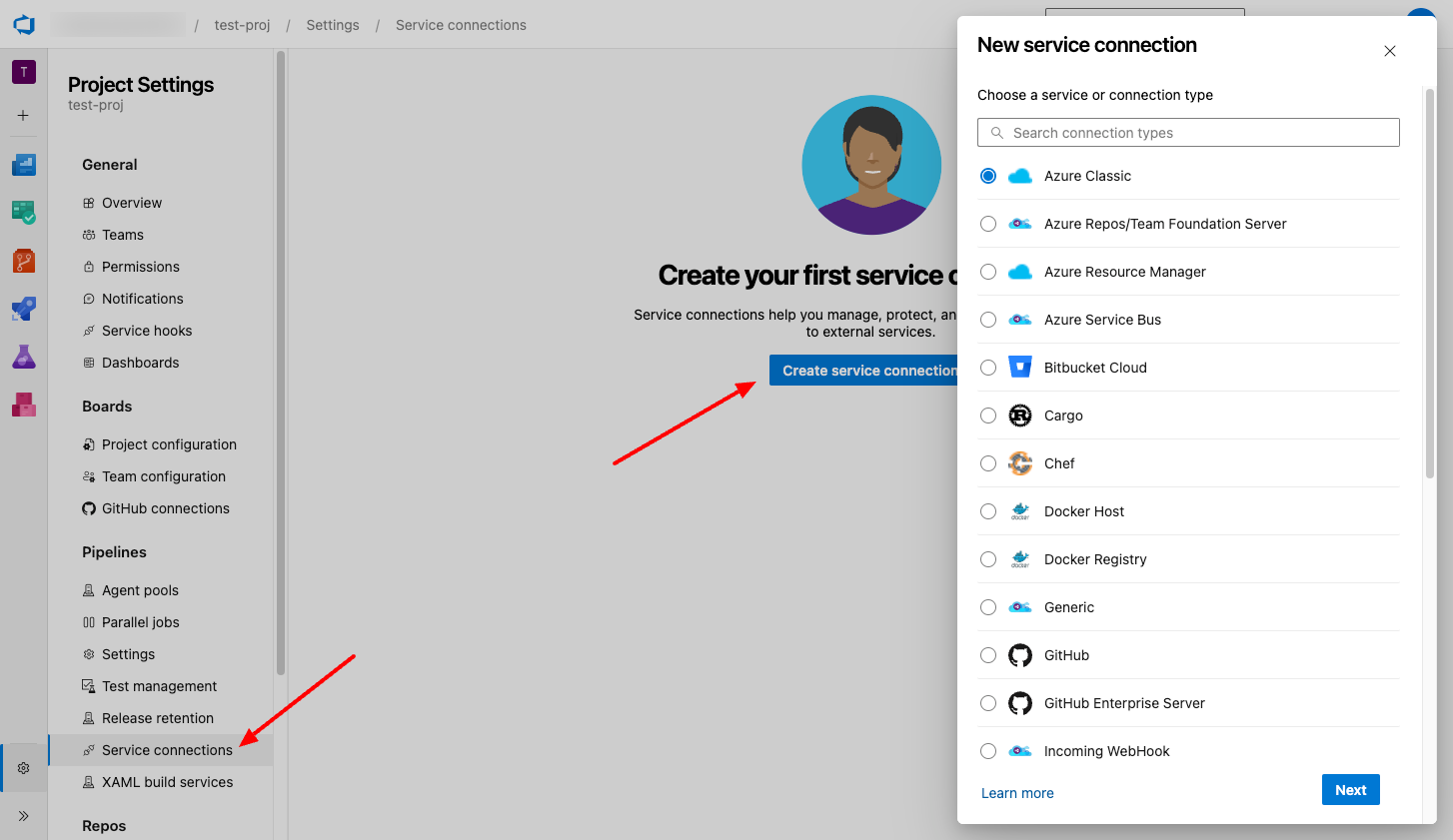

In Azure DevOps, select "Project Settings" > "Pipelines" > "Service Connections"

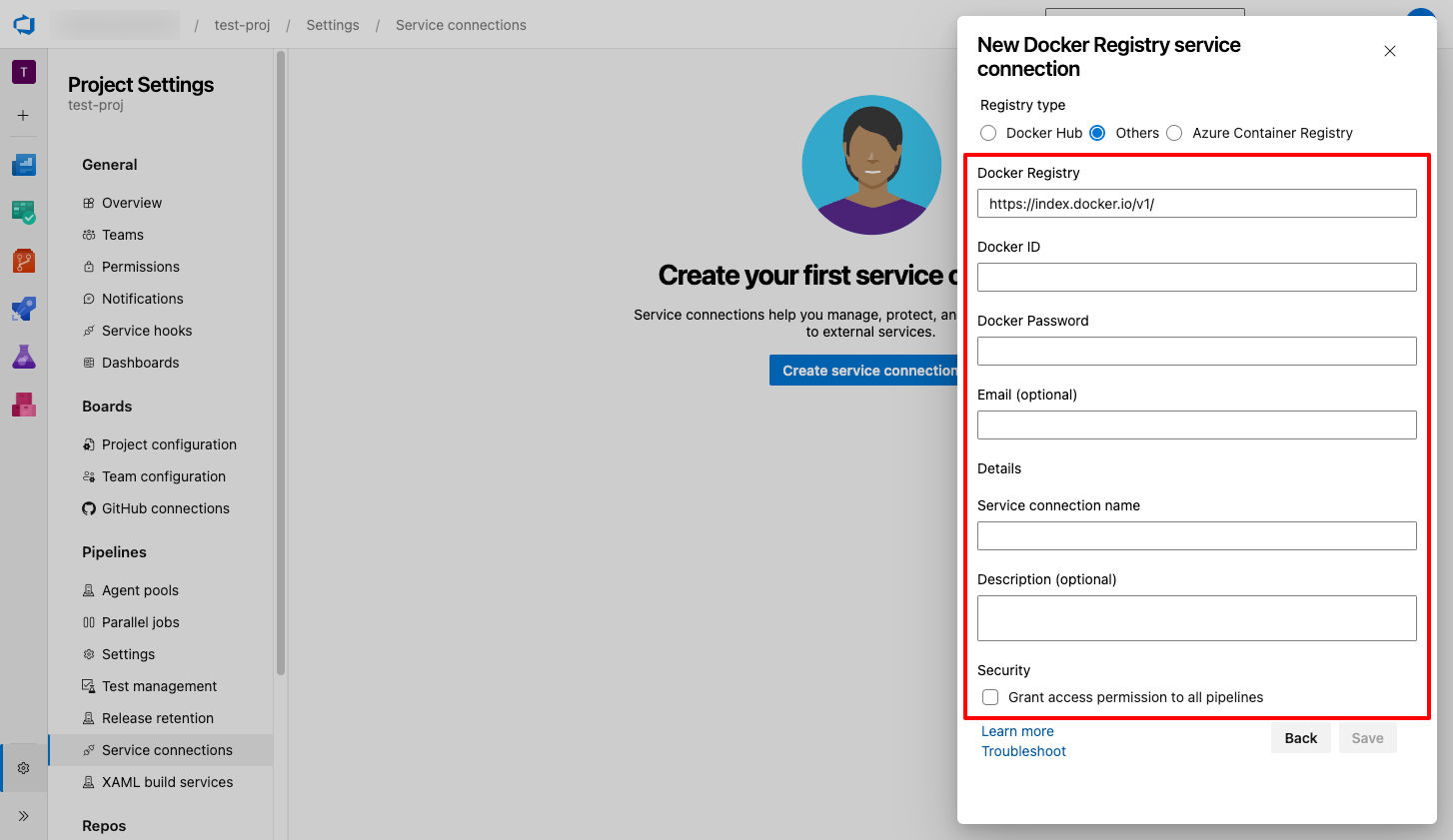

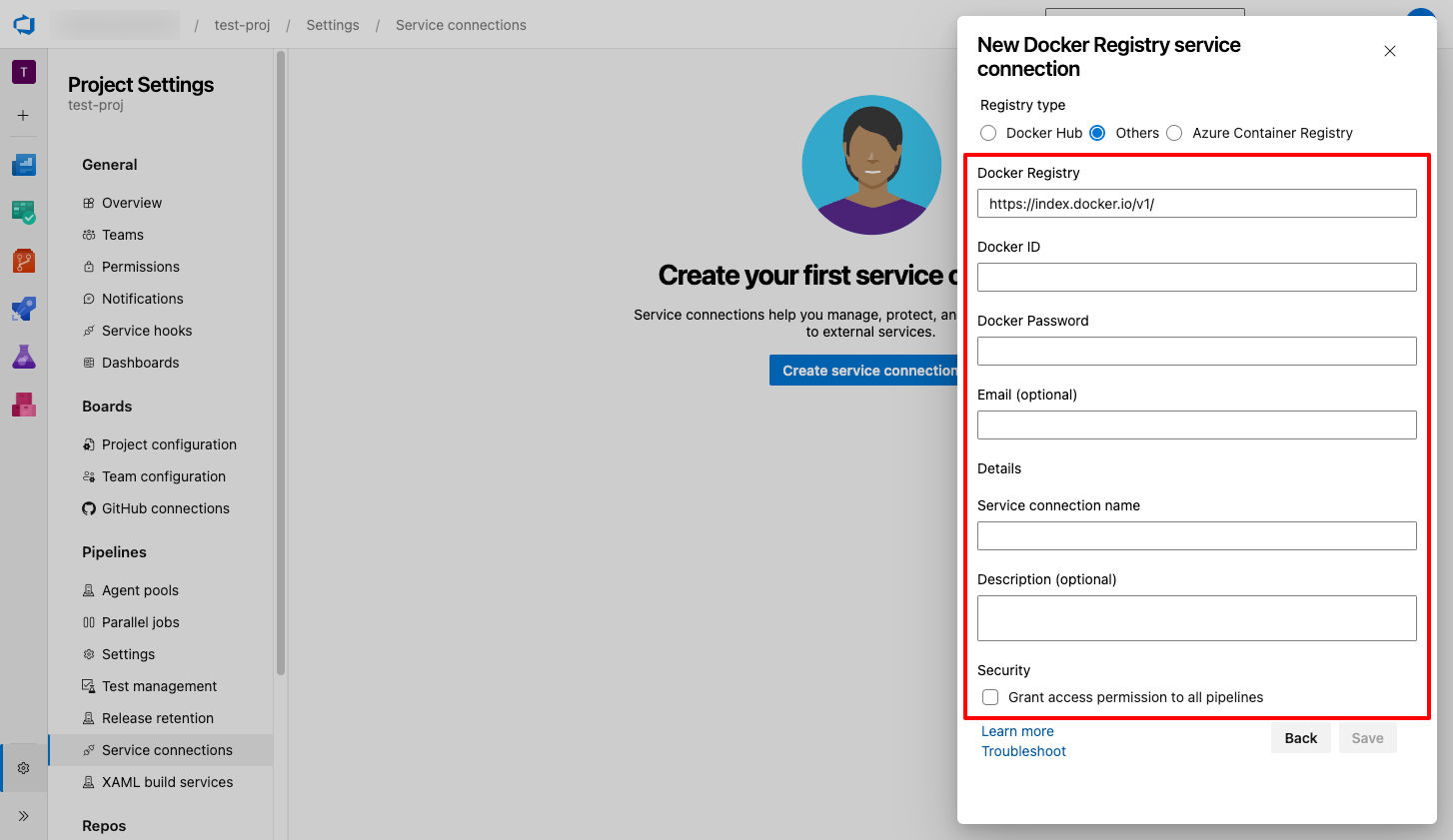

Click "Create Service Connections" and copy and paste the GCP registry ID in the "Docker Registry" field and the key you just memorized in the "Password" field.

Registry type: Others

Docker Registry:

Password: Paste the content of service account key file.

Now, we will connect Azure Pipeline to GCP registry.

In Azure DevOps, select "Project Settings" > "Pipelines" > "Service Connections"

Click "Create Service Connections" and copy and paste the GCP registry ID in the "Docker Registry" field and the key you just memorized in the "Password" field.

Registry type: Others

Docker Registry:

https://gcr.io/PROJECT_ID, replacing PROJECT_ID with the name of your project (for example, https://gcr.io/azure-pipelines-test-project-12345).Password: Paste the content of service account key file.

5. Step 2: Deployment Implementation

Next, we will implement the deployment part.

① Applying the configuration file

In the root of the repository, create a file named

azure-pipelines.yml.Copy and paste the following yaml file to the command line.

Set the Google Cloud service account key as an environment variable. resources:

- repo: self

fetchDepth: 1

queue:

name: Hosted Ubuntu 1604

trigger:

- master

variables:

TargetFramework: 'netcoreapp3.1'

BuildConfiguration: 'Release'

DockerImageName: '${PROD_PROJECT_ID}/CloudDemo'

steps:

- task: DotNetCoreCLI@2

displayName: Publish

inputs:

projects: 'applications/clouddemo/netcore/CloudDemo.MvcCore.sln'

publishWebProjects: false

command: publish

arguments: '--configuration $(BuildConfiguration) --framework=$(TargetFramework)'

zipAfterPublish: false

modifyOutputPath: false

- task: PublishBuildArtifacts@1

displayName: 'Publish Artifact'

inputs:

PathtoPublish: '$(build.artifactstagingdirectory)'

- task: Docker@2

displayName: 'Login to Container Registry'

inputs:

command: login

containerRegistry: 'gcr-tutorial'

- task: Docker@2

displayName: 'Build and push image'

inputs:

Dockerfile: 'applications/clouddemo/netcore/Dockerfile'

command: buildAndPush

repository: '$(DockerImageName)'

The Azure pipeline YAML file explanation:

- resource: Specifies the repository to be used for the pipeline, with a fetch depth of 1, meaning only the latest commit history is fetched.

- queue: Specifies the agent pool or runner for the pipeline, in this case, "Hosted Ubuntu 1604", which is a pre-configured Ubuntu-based build agent provided by Azure Pipelines.

- trigger: It is triggered when there are changes on the "master" branch.

- variables: Defines variables to be used in the pipeline.

- steps: Represents the series of tasks that make up the pipeline. Each task is executed sequentially:

+ DotNetCoreCLI: This task uses the DotNetCoreCLI build task to publish the .NET Core application. It specifies the project file, build configuration, and target framework. The published output will not be zipped or have the output path modified.

+ PublishBuildArtifacts: This task publishes the artifacts generated in the previous step. The artifacts are published to the "$(build.artifactstagingdirectory)" path.

+ Docker: This task logs in to the specified container registry, in this case, "gcr-tutorial".

+ Docker: This task builds and pushes a Docker image. It uses the Dockerfile specified and the Docker image repository name defined in the "DockerImageName" variable.

② Create a deployment definition for Azure Pipeline

From Azure Pipieline, click "Releases" and create a pipeline.

Once the deployment script is set, the pipeline will be executed.

When the pipeline kicks in, the new code is applied to Cloud Run.

Deploy script:

- resource: Specifies the repository to be used for the pipeline, with a fetch depth of 1, meaning only the latest commit history is fetched.

- queue: Specifies the agent pool or runner for the pipeline, in this case, "Hosted Ubuntu 1604", which is a pre-configured Ubuntu-based build agent provided by Azure Pipelines.

- trigger: It is triggered when there are changes on the "master" branch.

- variables: Defines variables to be used in the pipeline.

- steps: Represents the series of tasks that make up the pipeline. Each task is executed sequentially:

+ DotNetCoreCLI: This task uses the DotNetCoreCLI build task to publish the .NET Core application. It specifies the project file, build configuration, and target framework. The published output will not be zipped or have the output path modified.

+ PublishBuildArtifacts: This task publishes the artifacts generated in the previous step. The artifacts are published to the "$(build.artifactstagingdirectory)" path.

+ Docker: This task logs in to the specified container registry, in this case, "gcr-tutorial".

+ Docker: This task builds and pushes a Docker image. It uses the Dockerfile specified and the Docker image repository name defined in the "DockerImageName" variable.

② Create a deployment definition for Azure Pipeline

From Azure Pipieline, click "Releases" and create a pipeline.

Once the deployment script is set, the pipeline will be executed.

When the pipeline kicks in, the new code is applied to Cloud Run.

Deploy script:

## Certification

gcloud auth activate-service-account \

--quiet \

--key-file <(echo $(ServiceAccountKey) | base64 -d) && \

## deploy

gcloud run deploy ${Cloud Run Name} \

--quiet \

--service-account=${Service Account Name} \

--allow-unauthenticated \

--image=${image path} \

--platform=managed \

--region=${Region} \

--project=${project ID} 6. Cited/Referenced Articles

7. About the proprietary solution "PrismScaler"

・PrismScaler is a web service that enables the construction of multi-cloud infrastructures such as AWS, Azure, and GCP in just three steps, without requiring development and operation.

・PrismScaler is a web service that enables multi-cloud infrastructure construction such as AWS, Azure, GCP, etc. in just 3 steps without development and operation.

・The solution is designed for a wide range of usage scenarios such as cloud infrastructure construction/cloud migration, cloud maintenance and operation, and cost optimization, and can easily realize more than several hundred high-quality general-purpose cloud infrastructures by appropriately combining IaaS and PaaS.

8. Contact us

This article provides useful introductory information free of charge. For consultation and inquiries, please contact "Definer Inc".

9. Regarding Definer

・Definer Inc. provides one-stop solutions from upstream to downstream of IT.

・We are committed to providing integrated support for advanced IT technologies such as AI and cloud IT infrastructure, from consulting to requirement definition/design development/implementation, and maintenance and operation.

・We are committed to providing integrated support for advanced IT technologies such as AI and cloud IT infrastructure, from consulting to requirement definition, design development, implementation, maintenance, and operation.

・PrismScaler is a high-quality, rapid, "auto-configuration," "auto-monitoring," "problem detection," and "configuration visualization" for multi-cloud/IT infrastructure such as AWS, Azure, and GCP.