1. Introduction

Hello! We are a writer team from Definer Inc.

Implementing automatic deployment (Continuous Deployment, CD) on Amazon Elastic Container Service (ECS) with CodePipeline is a practice that automates the process of deploying containerized applications to ECS. CodePipeline is a fully managed continuous integration and continuous deployment service provided by Amazon Web Services (AWS). By combining CodePipeline with ECS, you can set up a seamless and automated deployment pipeline that takes code changes from source code repositories, builds the container images, and deploys them to ECS clusters, ensuring fast and reliable application updates.

In this issue, you are wondering how to implement automatic deployment (CD) of ECS using AWS Codepipeline.

Let's take a look at the actual screens and resources to explain in detail.

2. Purpose/Use Cases

The purpose of implementing automatic deployment on ECS with CodePipeline is to streamline and automate the release and deployment of containerized applications. Here are some key purposes and benefits of using this approach:

(1) Continuous Delivery

(2) Version Control and Source Management

(3) Build and Test Automation

(4) Deployment Validation

(5) Infrastructure-as-Code

(6) Rollback and Recovery

(7) Scalability and Flexibility

3. What is CI/CD?

First, a review of CI/CD.

CI/CD stands for Continuous Integration / Continuous Delivery.

CI/CD does not refer to a specific technology, but rather to a software development methodology that automates testing and deployment tasks.

CI/CD enables early detection of bugs and automation of manual tasks to improve work efficiency.

What is CI (Continuous Integration)?

Integration in this case refers to build (the process of creating an executable file based on source code) and testing.

Automated builds and tests continuously check the behavior and quality of the program, allowing for quick modifications.

What is Continuous Delivery (CD)?

In this case, delivery is used in the sense of publication or delivery.

In other words, making the application available to users.

Continuous delivery allows for faster development.

What we will implement here is the Continuous Delivery (CD) pipeline of ECS.

Why do we need to implement automatic CD with AWS Codepipeline?

Implementing automatic Continuous Deployment (CD) with AWS CodePipeline offers several significant benefits for software development and deployment workflows.

Automatic CD streamlines the deployment process, reducing manual efforts and enabling faster, more efficient releases. It ensures consistency, reliability, and reduces the risk of human errors. In case of issues with a new deployment, easy rollbacks to a previously working version are possible.

The integration of testing tools in CodePipeline allows for continuous feedback and early issue detection. It can handle deployments at scale, making it suitable for large-scale applications and microservices architectures. Security checks and compliance validation can be integrated at each stage, ensuring application security and adherence to regulatory requirements.

CodePipeline keeps track of code versions and changes, providing traceability for auditing and troubleshooting. Automatic CD enhances developer productivity, allowing them to focus on building new features. It is flexible and extensible, allowing integration with other AWS services and third-party tools.

Overall, automatic Continuous Deployment with CodePipeline empowers development teams to embrace Agile and DevOps principles, delivering frequent and incremental updates to end-users with confidence.

4. Preparation for automated deployment with CodePipeline

The first step in creating an automated deployment mechanism with CodePipeline is to prepare S3 as a preliminary step.

ECS and ECR will be used as described in "Creating Microservices with Amazon ECS".

(1) Creating an S3 bucket: To create an S3 bucket using the AWS CLI, follow these steps:

Open the AWS CLI or a terminal with the AWS CLI installed.

- Run the following command to create an S3 bucket:

Replace

Replace

aws s3api create-bucket --bucket <bucket-name> --region <region> --create-bucket-configuration LocationConstraint=<region>

<bucket-name> with the desired name for your S3 bucket, and <region> with the region where you want to create the bucket. If you're creating the bucket in the Tokyo region, make sure to include --create-bucket-configuration LocationConstraint=ap-northeast-1 in the command.

(2) Enabling S3 versioning: To enable versioning for your S3 bucket, follow these steps:

- Run the following command to enable versioning for the bucket:

aws s3api put-bucket-versioning --bucket <bucket-name> --versioning-configuration Status=Enabled

<bucket-name> with the name of your S3 bucket.

Enabling versioning in S3 ensures that multiple versions of an object can be stored and tracked over time.

(3) Creating the imagedefinitions.json file: Create a file named imagedefinitions.json that will be used as input for deploying CodePipeline. The imagedefinitions.json file typically contains information about the container images and their corresponding tags or versions.

- Use a text editor to create a new file named imagedefinitions.json.

- Copy and paste the necessary information into the file. The structure and content of the file will depend on your specific application and deployment requirements. Refer to the AWS documentation for the expected format of the imagedefinitions.json file.

(4) Uploading imagedefinitions.json to S3: To upload the imagedefinitions.json file to your S3 bucket, follow these steps:

- Zip the imagedefinitions.json file into a compressed ZIP archive. ## Command to create S3

[cloudshell-user@ip-10-0-xx-xx ~]$aws s3api create-bucket \

--bucket ${S3 bucket name} \

--create-bucket-configuration LocationConstraint=ap-northeast-1

{

"Location": "http://${S3 bucket name}.s3.amazonaws.com/"

}

## Command to enable S3 versioning

[cloudshell-user@ip-10-0-xx-xx ~]$aws s3api put-bucket-versioning --bucket ${S3 bucket name} --versioning-configuration Status=Enabled imagedefinitions.json file

[

{

"name": "test-nginx",

"imageUri": "${AWS Account Number}.dkr.ecr.ap-northeast-1.amazonaws.com/test-repository:latest"

}

] 5. Automated Deployment Implementation with CodePipeline

Next, we will create a CodePipeline, which is our main goal.

① Basic settings for CodePipeline

Access the CodePipeline console, tab "Pipeline" -> "Create Pipeline"

For "Advanced Settings" -> "Artifact Store," select a custom location and click "Next.

② Source Stage Settings

Select the source provider "ECR" and select the repository and tags.

③ Build stage setting

This time, click Skip Build Stage

Click "Next" to create CodePipeline.

④ Add Source Stage

After the CodePipeline creation is complete, click "Edit.

Under "Source" -> "Add Action" -> Action Provider "S3", select the S3 you just created.

Enter "imaginedefinitions.json.zip" as the S3 object key and click Finish.

⑤ Change the deployment stage

On the edit screen, select "Deploy" → Edit button for ECS Deploy → Under Input Artifact, select the S3 output.

This will read the S3 input file and CodePipeline will deploy to ECS.

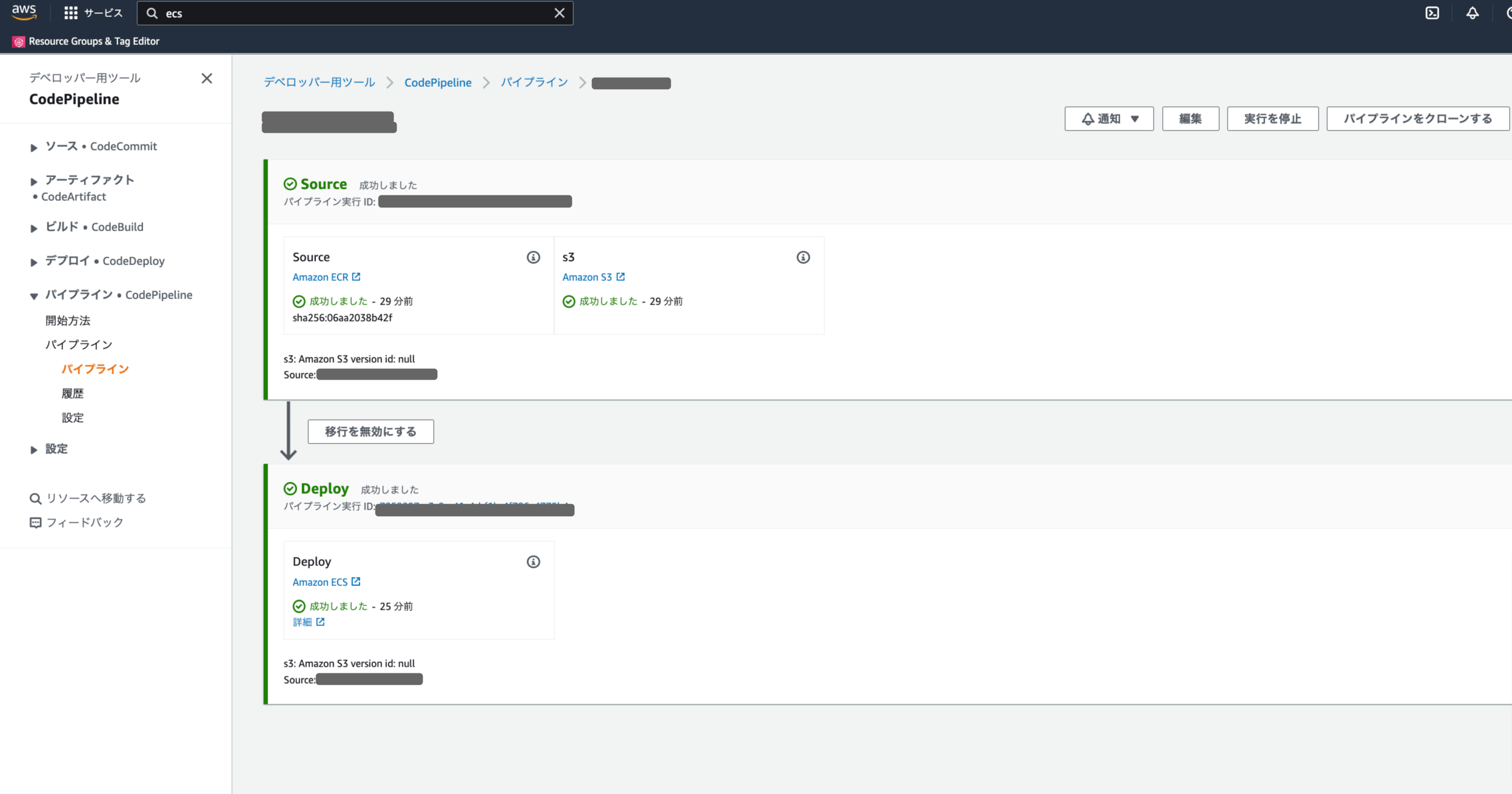

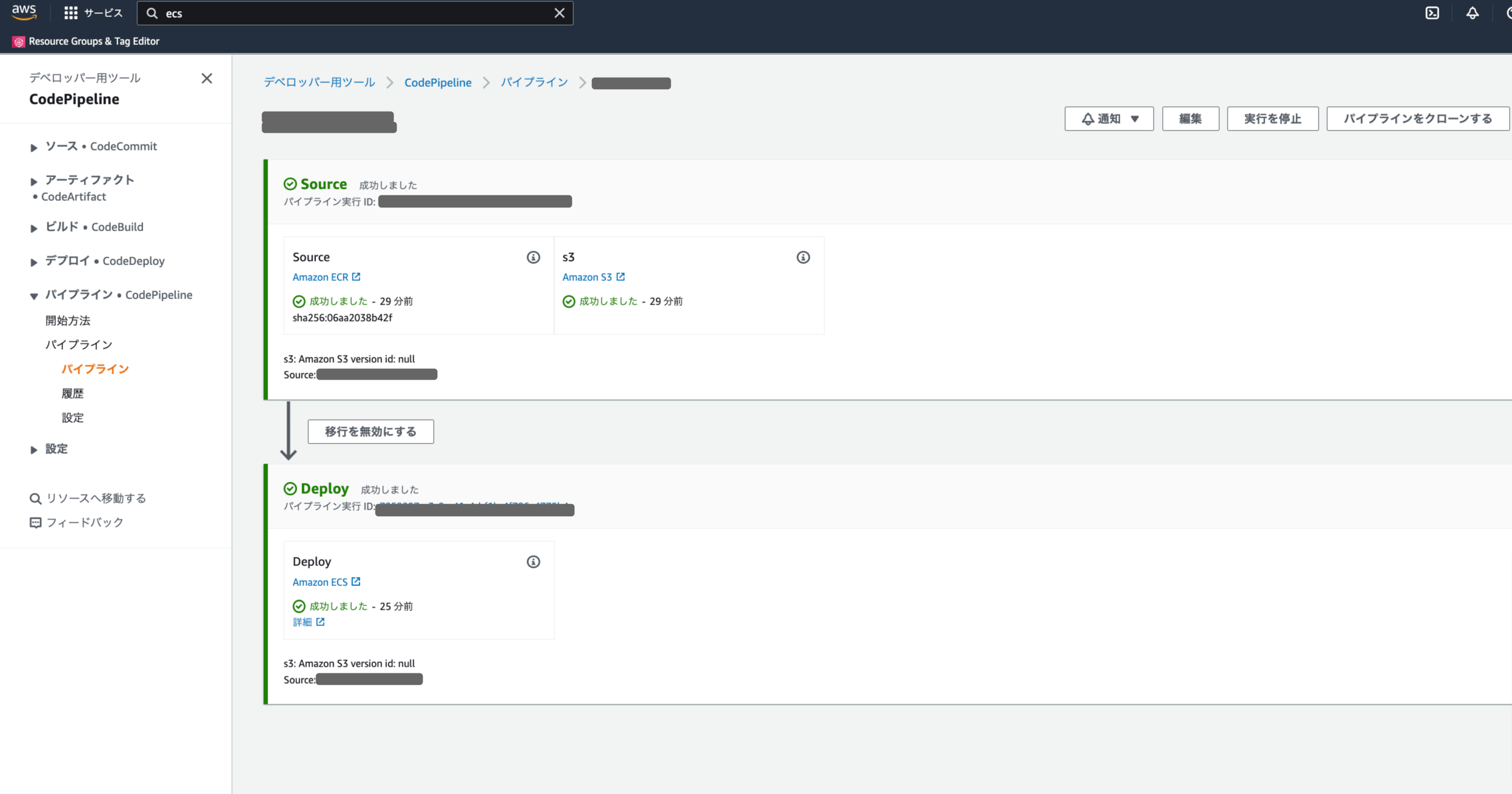

I just pushed a new container image to ECS and I can confirm that the ECS service has been updated automatically!

6. Cited/Referenced Articles

7. About the proprietary solution "PrismScaler"

・PrismScaler is a web service that enables the construction of multi-cloud infrastructures such as AWS, Azure, and GCP in just three steps, without requiring development and operation.

・PrismScaler is a web service that enables multi-cloud infrastructure construction such as AWS, Azure, GCP, etc. in just 3 steps without development and operation.

・The solution is designed for a wide range of usage scenarios such as cloud infrastructure construction/cloud migration, cloud maintenance and operation, and cost optimization, and can easily realize more than several hundred high-quality general-purpose cloud infrastructures by appropriately combining IaaS and PaaS.

8. Contact us

This article provides useful introductory information free of charge. For consultation and inquiries, please contact "Definer Inc".

9. Regarding Definer

・Definer Inc. provides one-stop solutions from upstream to downstream of IT.

・We are committed to providing integrated support for advanced IT technologies such as AI and cloud IT infrastructure, from consulting to requirement definition/design development/implementation, and maintenance and operation.

・We are committed to providing integrated support for advanced IT technologies such as AI and cloud IT infrastructure, from consulting to requirement definition, design development, implementation, maintenance, and operation.

・PrismScaler is a high-quality, rapid, "auto-configuration," "auto-monitoring," "problem detection," and "configuration visualization" for multi-cloud/IT infrastructure such as AWS, Azure, and GCP.